Vision AI Dev provisioning with Azure IoT Central

| Summary | |

|

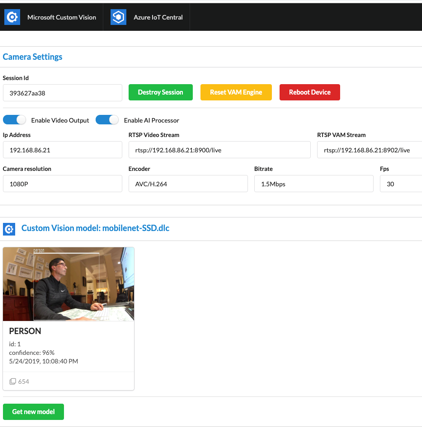

This project introduces a Vision AI module with a web client that allows the user to interact directly with the device to control it as well as experiment with Custom Vision AI models. It also demonstrates an implementation of a Vision AI DevKit device provisioning itself with Azure IoT Central services to enable the live reporting of telemetry, state, events, and settings with the ability to manually control the ML model.

While it is a fully working sample with detailed instructions in the README, it is also meant to be a resource to help you build your own custom implementation. |

|

| Implementation |

|

This project is implemented as a NodeJS micro service and React Web client. While the IoT Central support for module deployments is still in the works, the project uses IoT Hub for module deployment. The module then connects to IoT Central to interact directly with the device. This project is an excellent way to showcase the power of the intelligent edge camera with telemetry and other built-in functions. |

| Software and Services used | Hardware | |

|

|

| Repository |

|

Find more information and relevant code here.

Users are encouraged to innovate and continue to improve the functionality of current projects. |

| Future Improvements and Project Suggestions |

| Feel free to fork the project and contribute back any improvements or suggestions. Contributors and maintainers are encouraged. |

| About the Creator |  |

|

Scott Seiber is a long-time Microsoft software engineer who is focused on the cross-section of hardware and software. He is currently working in the Azure IoT organization, enabling partners with their digital transformations.

You can learn more about what Scott is working on here. |

Leave a comment