Running SLMs as Sidecar extensions on App Service for Linux

Introduction:

Natural language processing (NLP) is no longer limited to massive AI models requiring significant computational resources. With the rise of Small Language Models (SLMs), you can now integrate lightweight yet powerful AI capabilities into your applications without the cost and complexity of traditional Large Language Models (LLMs).

Phi-3 and Phi-4 are two such state-of-the-art SLMs optimized for efficiency and high-quality reasoning. Designed to operate with minimal resource overhead, these models are ideal for scenarios where responsiveness, security and cost-effectiveness are paramount.

- Phi-3-Mini-4K-Instruct is a compact 3.8B parameter model trained on a high-quality dataset, making it an excellent choice for inference tasks with limited infrastructure.

- Phi-4, built on a blend of synthetic and public datasets, is a quantized model optimized for enhanced performance in constrained environments.

By deploying these models as sidecars on App Service for Linux, you can seamlessly enhance applications with conversational AI, content generation, and advanced NLP features. Lets get started!

Building a Frontend for Phi-3 and Phi-4

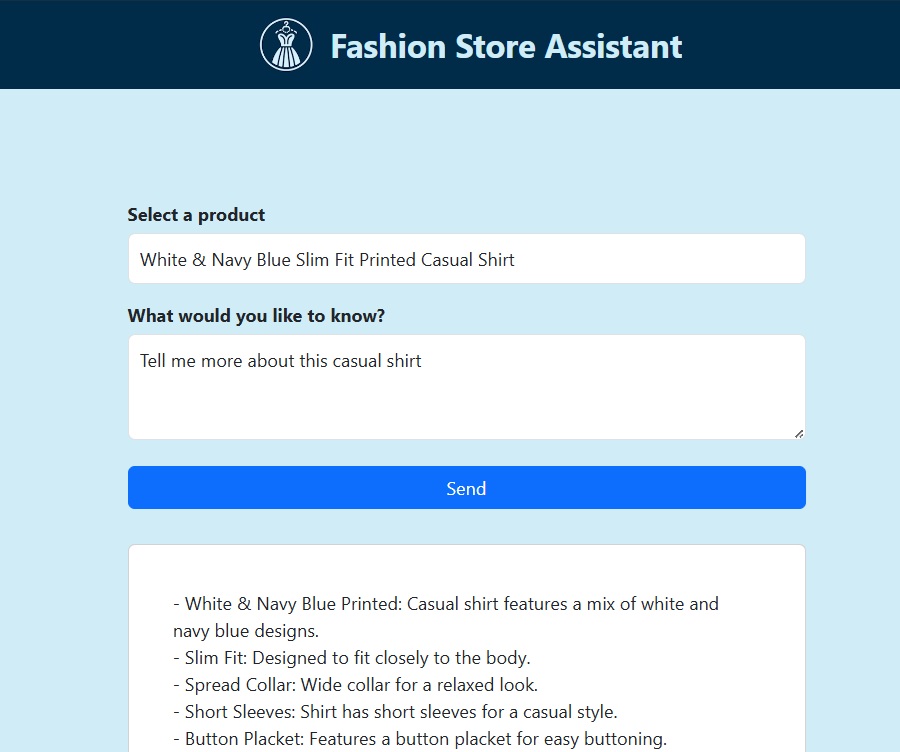

To showcase the capabilities of running Phi-3 and Phi-4 as sidecars, we have a sample application that acts as a frontend for these models: Fashion Assistant App. This is a .NET Blazor application that implements a chat functionality, allowing users to interact with an AI-powered assistant for on-demand product information and styling suggestions.

- Open the dotnet-slm-fashion-assistant-app project in VS Code.

- Open Program.cs. Here you can see how we have configured the endpoint for the model:

builder.Services.AddScoped(sp => new HttpClient { BaseAddress = new Uri(builder.Configuration["FashionAssistantAPI:Url"] ?? "http://localhost:11434/v1/chat/completions") }); builder.Services.AddHttpClient(); - Open SLMService.cs and navigate to the

StreamChatCompletionsAsyncfunction: This function is calling the SLM endpoint usingHttpRequestMessagevar content = new StringContent(JsonSerializer.Serialize(requestPayload), Encoding.UTF8, "application/json"); var request = new HttpRequestMessage(HttpMethod.Post, _apiUrl) { Content = content }; var response = await _httpClient.SendAsync(request, HttpCompletionOption.ResponseHeadersRead); response.EnsureSuccessStatusCode();The response that we get from the endpoint is displayed one token at a time.

while (!reader.EndOfStream) { var line = await reader.ReadLineAsync(); line = line?.Replace("data: ", string.Empty).Trim(); if (!string.IsNullOrEmpty(line) && line != "[DONE]") { var jsonObject = JsonNode.Parse(line); var responseContent = jsonObject?["choices"]?[0]?["delta"]?["content"]?.ToString(); if (!string.IsNullOrEmpty(responseContent)) { yield return responseContent; } } } - Open Home.razor.

Here, we get the user input which includes the product and the question. This then forms the prompt and is passed to the

StreamChatCompletionsAsyncfunction.Product selectedItem = new Product().GetProduct(int.Parse(selectedProduct)); var queryData = new Dictionary<string, string> { {"user_message", message}, {"product_name", selectedItem.Name}, {"product_description", selectedItem.Description } }; var prompt = JsonSerializer.Serialize(queryData); await foreach (var token in slmService.StreamChatCompletionsAsync(prompt)) { response += token; isLoading = false; StateHasChanged(); }

Deploying Your Web Application

Before adding the Phi sidecar extension, you need to deploy your application to Azure App Service. There are two ways to deploy applications: code-based deployment and container-based deployment.

Note: SLMs run along with your webapps and share the available compute resources of the machine.Hence, it is recommended to choose a SKU with atleast 4 vCPU and 7 GB Memory.

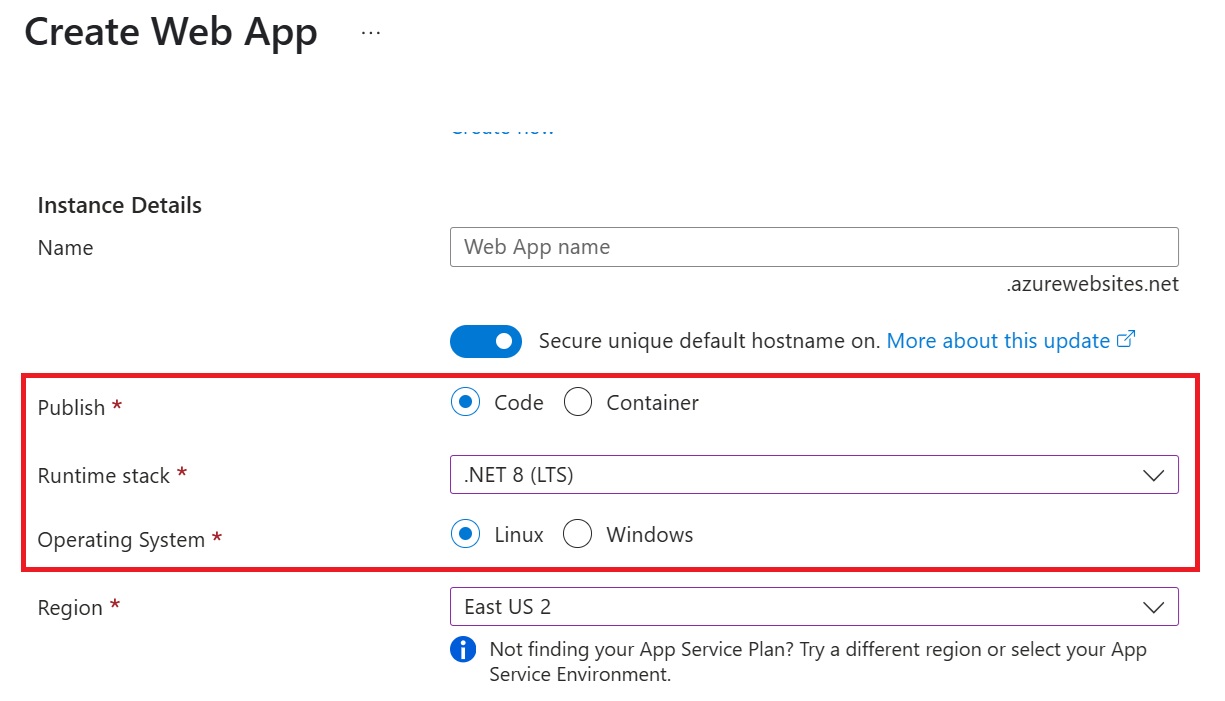

Code-Based Deployment

-

Go to the Azure Portal and create a .NET 8 Linux App Service.

-

Set up CI/CD with GitHub to automate deployments. Deploy to App Service using GitHub Actions

Note: Sidecars for code-based applications only support GitHub Actions right now. We are rolling out the experience for other deployment methods

-

Push your application code to your GitHub repository.

The deployment pipeline will automatically build and deploy your web application to Azure App Service.

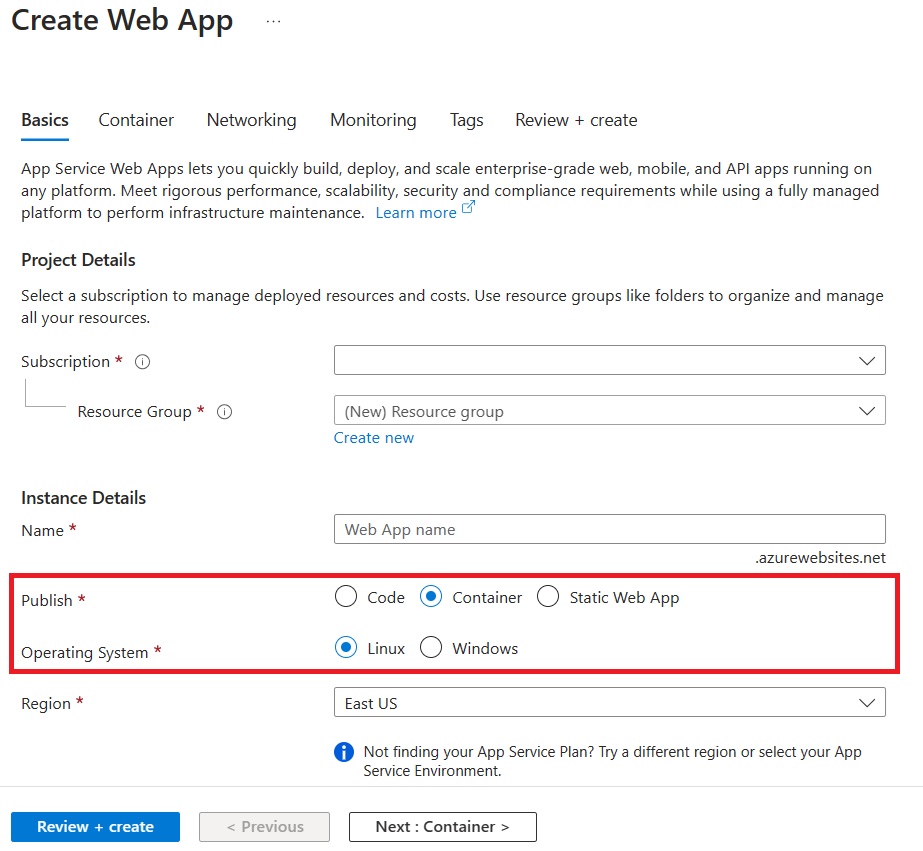

Container-Based Deployment

-

Use the Dockerfile in your repository to build a container image of your application. We have a sample Dockerfile here

-

Build the image and push it to your preferred container registry, such as Azure Container Registry, Docker Hub, or a private registry.

-

Go to the Azure Portal and create a container-based App Service.

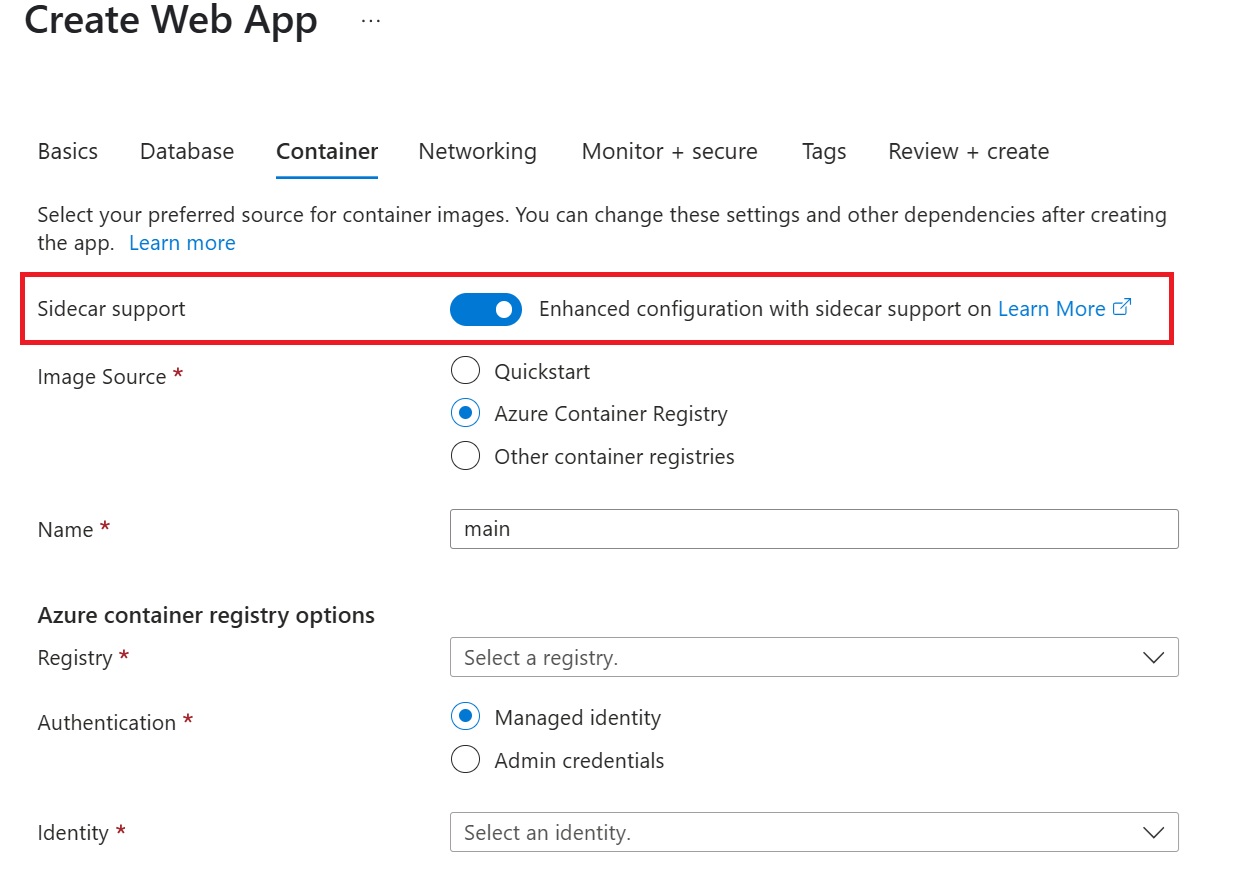

On the Container tab, make sure that Sidecar support is Enabled.

Specify the details of your application image.

Note: We strongly recommend enabling Managed Identity for your Azure resources.

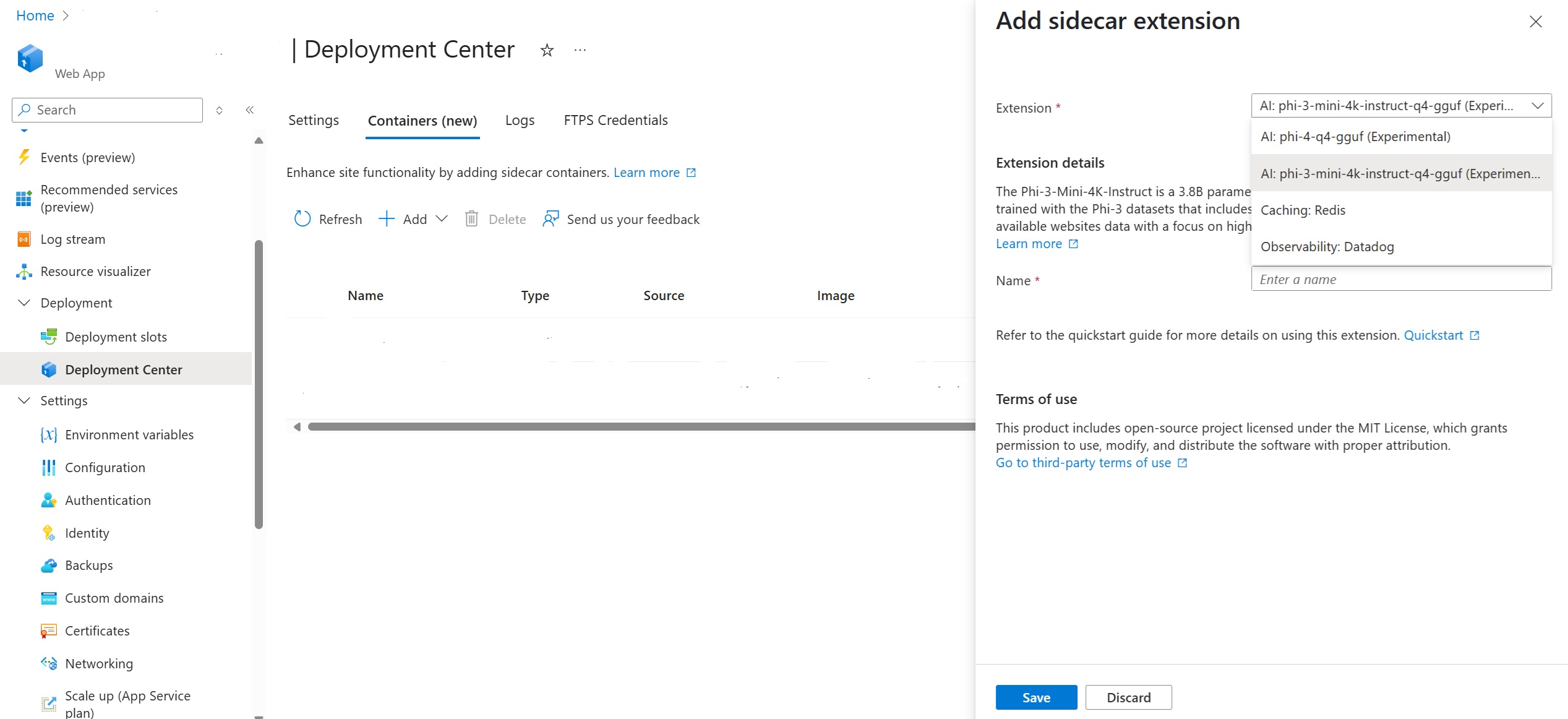

Adding the Phi Sidecar Extension

Once your application is deployed, follow these steps to enable the Redis sidecar extension:

- Navigate to the Azure Portal and open your App Service resource.

-

Go to Deployment Center in the left-hand menu and navigate to the Containers tab.

Note: You might see a banner which says *Interested in adding containers to run alongside your app? Click here to give it a try. Clicking on the banner will enable the new Containers experience for you.*

-

Add the Phi sidecar extension like this

Testing the application

After adding the sidecar, wait a few minutes for the application to restart.

Note: Since we are deploying a language model, please be aware that the application might take a little longer to start up the first time. This delay is due to the initial setup and loading of the Phi model, which ensures that it is ready to handle requests efficiently. Subsequent startups should be faster once the model is properly initialized.

Once the application is live, navigate to it and try asking questions like Tell me more about this shirt or How do I pair this shirt?

Conclusion

The integration of Phi models as sidecars on Azure App Service for Linux demonstrates the power of Small Language Models (SLMs) in delivering efficient, AI-driven experiences without the overhead of large-scale models. We are actively working on more AI scenarios for Azure App Service and would love to hear what you are building. Your feedback and ideas are invaluable as we continue to explore the possibilities of AI and cloud-based deployments.