1-Bit Brilliance: BitNet on Azure App Service with Just a CPU

In a world where running large language models typically demands GPUs and hefty cloud bills, Microsoft Research is reshaping the narrative with BitNet — a compact, 1-bit quantized transformer that delivers surprising capabilities even when deployed on modest hardware.

BitNet is part of a new wave of small language models (SLMs) designed for real-world applications where performance, latency, and cost are critical. Unlike traditional transformer models, BitNet employs 1-bit weight quantization and structured sparsity, making it remarkably lightweight while still retaining strong reasoning abilities.

In mid-April 2025, Microsoft Research unveiled BitNet b1.58 2B4T on Hugging Face—a transformer-based model with just 1.58-bit weights, trained on a staggering 4 trillion tokens.

In this blog, we’ll show you how you can run this model on Azure App Service for Linux, leveraging its Sidecar architecture to serve BitNet models alongside your web app — no GPU required. Whether you’re building intelligent chat interfaces, processing reviews, or enabling offline summarization, you’ll see how App Service enables you to add AI to your app stack — with simplicity, scalability, and efficiency.

Getting Started with BitNet on Azure App Service

To make it even easier to get hands-on with the BitNet model, we’ve published a ready-to-use Docker image:

👉 mcr.microsoft.com/appsvc/docs/sidecars/sample-experiment:bitnet-b1.58-2b-4t-gguf

You can try it in two simple ways:

1. Spin up a Container-Based App with BitNet (Quickest way)

The easiest way to get started is by creating a container-based app on Azure App Service and pointing it to the BitNet image.

Here’s how you can do it through the Azure Portal:

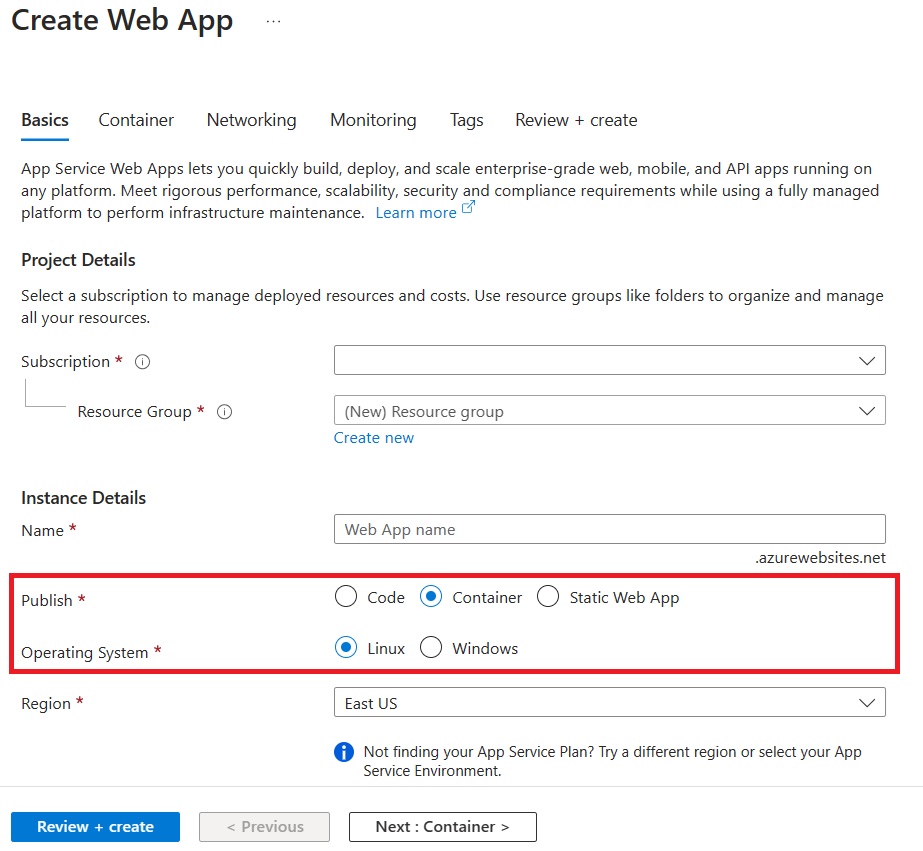

- In the Azure Portal, go to Create a resource > Web App.

- Under Publish, select Container.

-

Choose Linux as the Operating System.

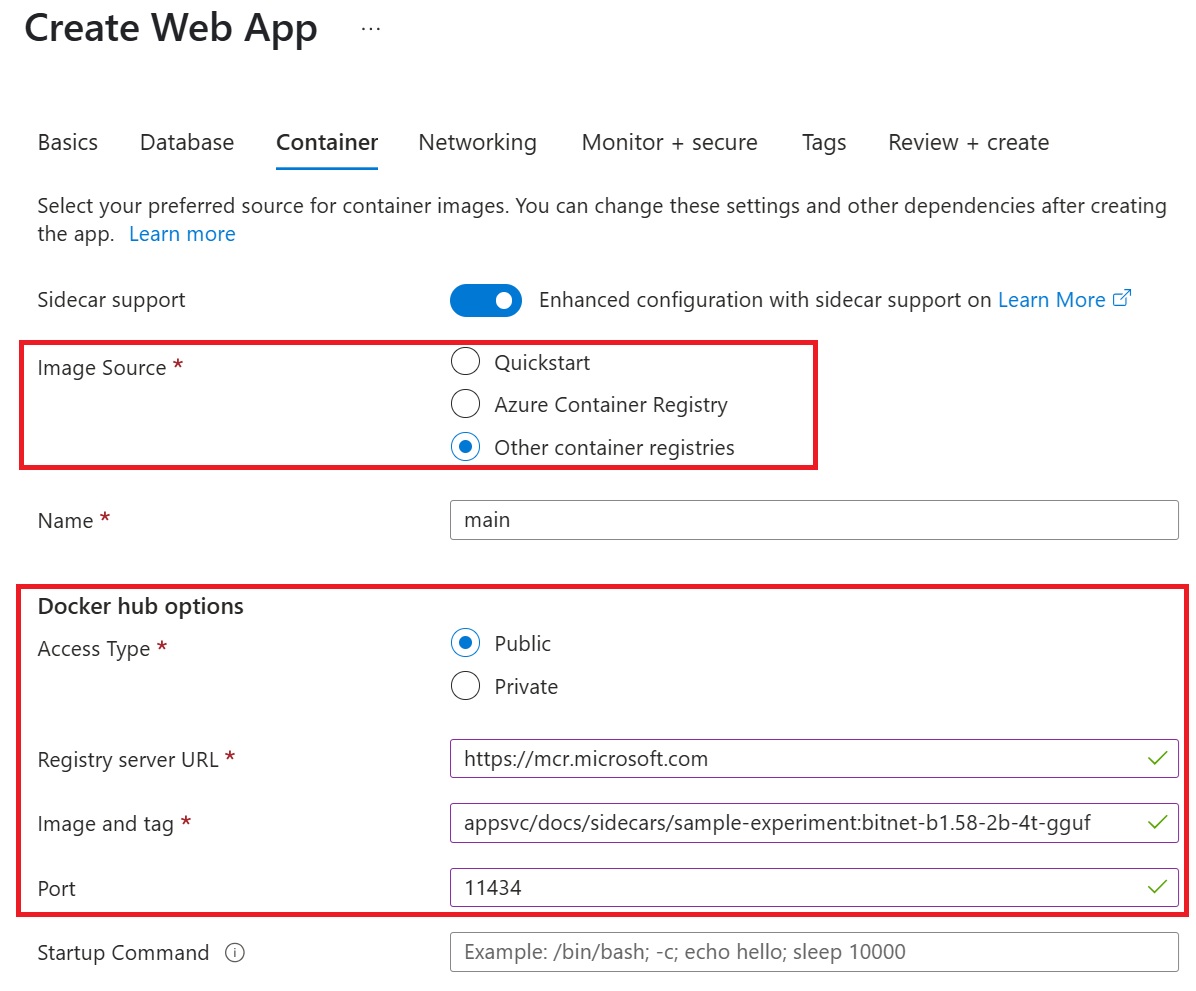

- In the Containers tab:

- Set Image source to Other Container registries.

-

Enter this Image and Tag:

mcr.microsoft.com/appsvc/docs/sidecars/sample-experiment:bitnet-b1.58-2b-4t-ggufSpecify the port as 11434

-

Review and Create the app.

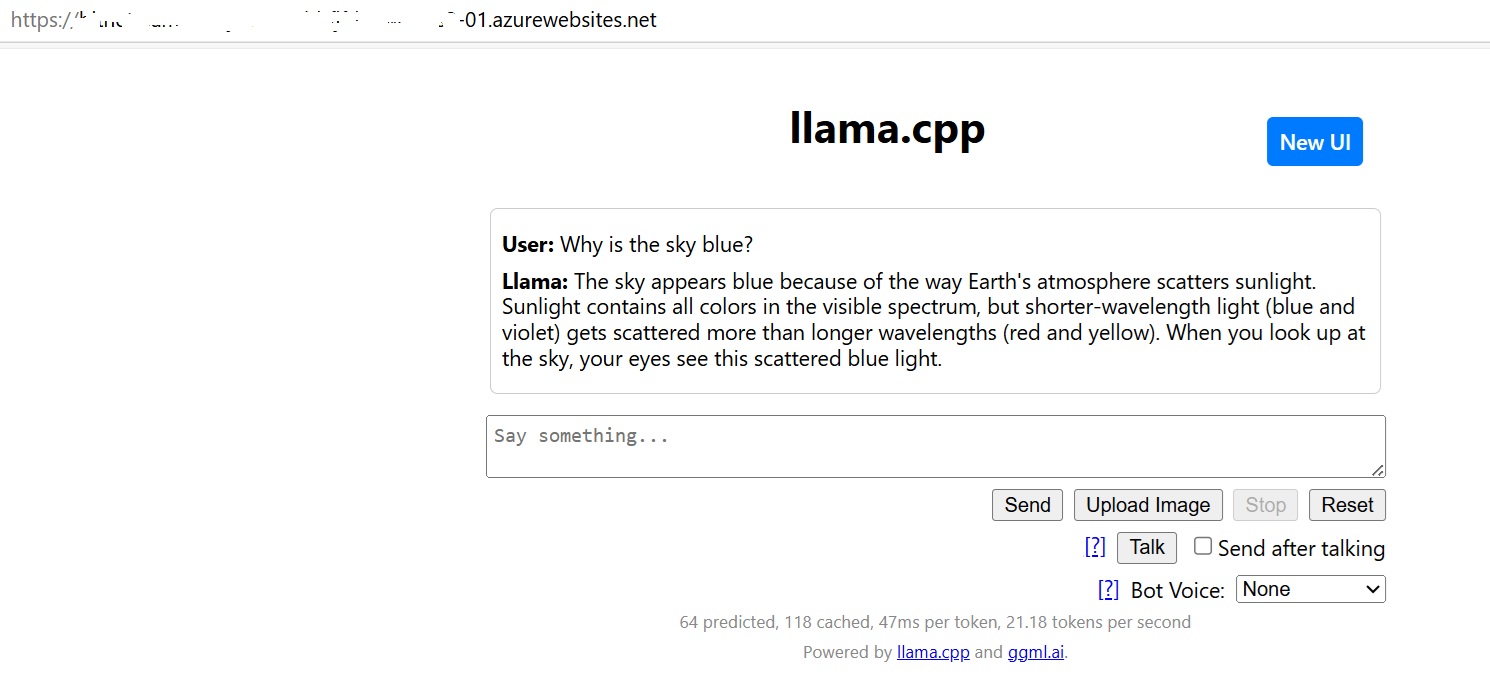

Once deployed, you can simply browse to your app’s URL.

Because BitNet is based on llama.cpp, it automatically serves a default chat interface in the browser — no extra code needed!

2. Customize Your Chat UI with a Python Flask App

If you want to build a more customized experience, we have you covered too!

You can use a simple Flask app that talks to our BitNet container running as a sidecar.

Here’s how it works:

The app calls the BitNet sidecar its local endpoint:

ENDPOINT = "http://localhost:11434/v1/chat/completions"

It sends a POST request with the user message and streams back the response.

Here’s the core Flask route:

@app.route('/chat', methods=['POST'])

def chat():

user_message = request.json.get("message", "")

payload = {

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": user_message}

],

"stream": True,

"cache_prompt": False,

"n_predict": 300

}

headers = {"Content-Type": "application/json"}

def stream_response():

with requests.post(ENDPOINT, headers=headers, json=payload, stream=True) as resp:

for line in resp.iter_lines():

if line:

text = line.decode("utf-8")

if text.startswith("data: "):

try:

data_str = text[len("data: "):]

data_json = json.loads(data_str)

for choice in data_json.get("choices", []):

content = choice.get("delta", {}).get("content")

if content:

yield content

except json.JSONDecodeError:

pass

return Response(stream_response(), content_type='text/event-stream')

if __name__ == '__main__':

app.run(debug=True)

Steps to deploy:

- Clone the sample Flask app from our GitHub repo.

- Deploy the Flask app to Azure App Service as a Python Web App (Linux).

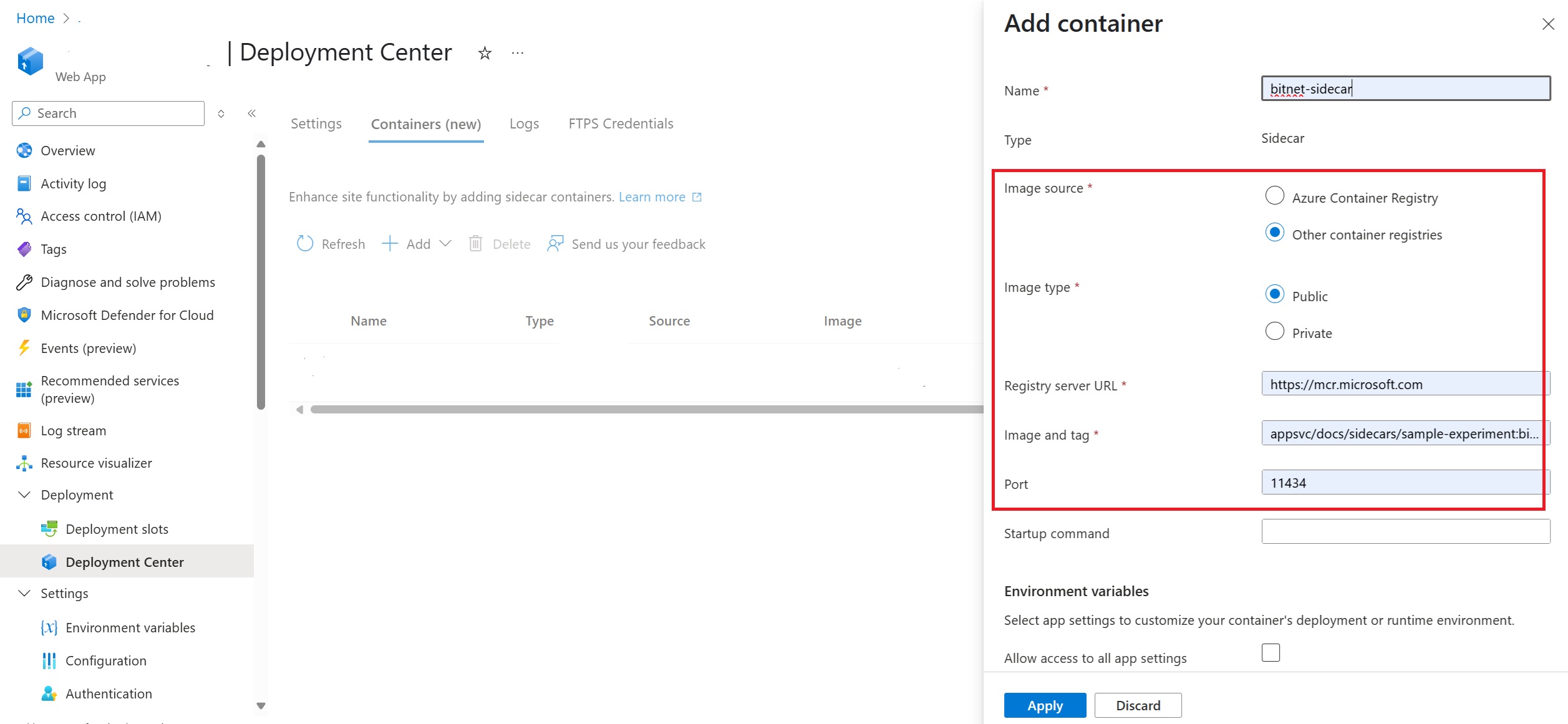

- After deployment, add a BitNet sidecar:

- Go to your App Service in the Azure Portal.

- Go to the Deployment Center for your application and add the BitNet image as a sidecar container.

- Save and Restart the app.

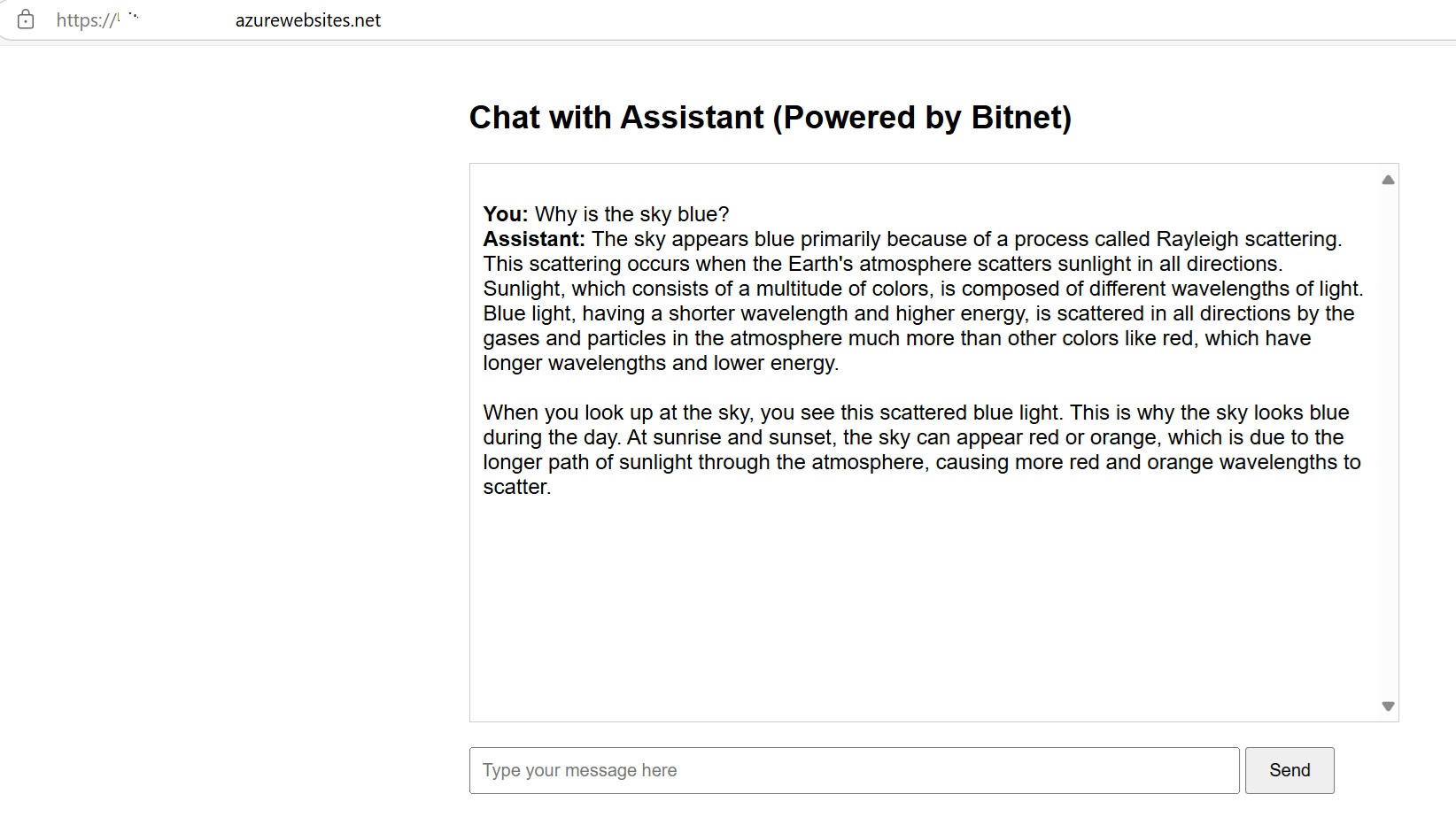

Once complete, you can browse to your app URL — and you’ll see a simple, clean chat interface powered by BitNet!

Resources and Further Reading

-

🔗 BitNet GitHub Repository

Explore the official BitNet project from Microsoft Research, including model details and technical documentation. -

🔗 Azure App Service Documentation

Learn more about Azure App Service and how to easily host web apps, APIs, and containers. -

🔗 Azure App Service Sidecars Deep-Dive

Understand how Sidecars can run supporting services (like BitNet!) alongside your main app in App Service. -

🔗 llama.cpp GitHub Repository

Discover the project that inspired BitNet’s server — a lightweight C++ inference engine for LLMs. -

🔗 Quickstart: Deploy a containerized app to App Service

Step-by-step guide to deploying your own Docker container on Azure App Service. -

🔗 BitNet model container image

The ready-to-use BitNet container image you can deploy today on App Service.

Closing Thoughts

We’re entering an exciting new era where small, efficient language models like BitNet are making AI more accessible than ever — no massive infrastructure needed.

With Azure App Service, you can deploy these models quickly, scale effortlessly, and start adding real intelligence to your applications with just a few clicks.

We can’t wait to see what you build with BitNet and Azure App Service!

If you create something cool or have feedback, let us know — your experiments help shape the future of lightweight, powerful AI.