Welcome to Day 5️⃣ of our journey Building An AI App End-to-End On Azure!. It's time to wrap-up the week with a look at two key topics - deployment and responsible AI! Ready? Let's go!

What You'll Learn In This Post

- Deploying the chat AI (Contoso Chat)

- Deploying the chat UI (Contoso Web)

- Automate Deployments (CI/CD)

- Accelerate Solutions (Enterprise)

- Evaluate & Mitigate Harms (Responsible AI)

- Exercise: Explore training resources.

- Resources: Azure AI Studio Code-First Collection

1. Revisiting Contoso Chat

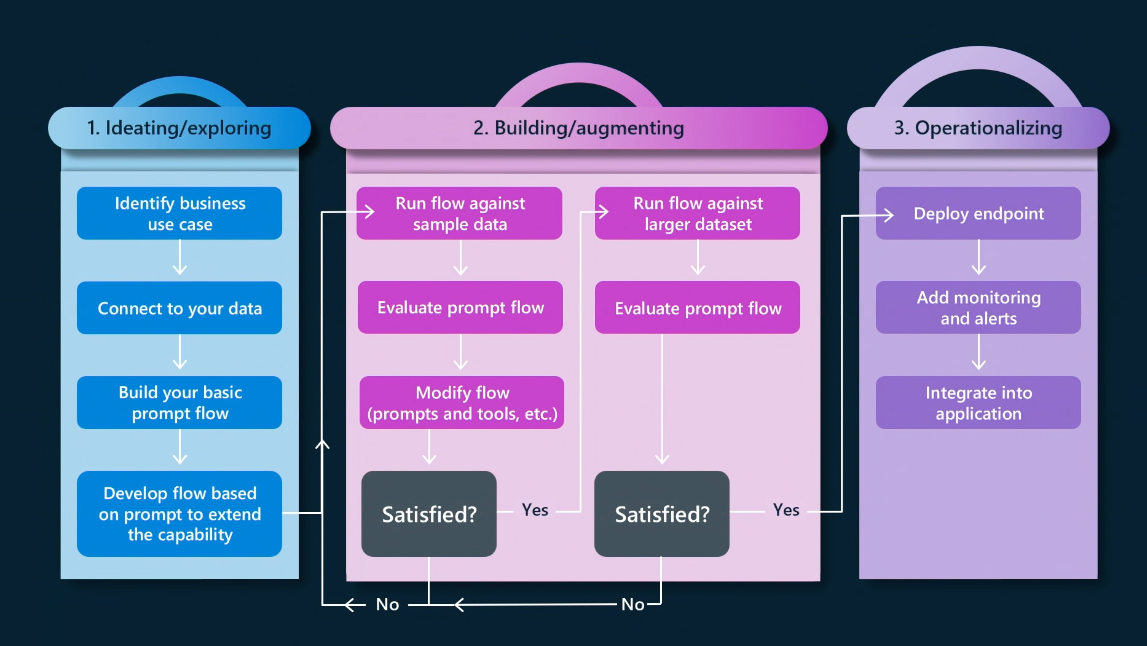

We started the week by talking about LLM Ops, and identifying the three core phases of the end-to-end lifecycle for a generative AI application. In the previous posts, we've mostly focused on the first two phases: ideating (building & validating a starter app) and augmenting (evaluating & iterating app for quality). In this post, we'll focus on phase 3: operationalizing the application to get it ready for real-world usage.

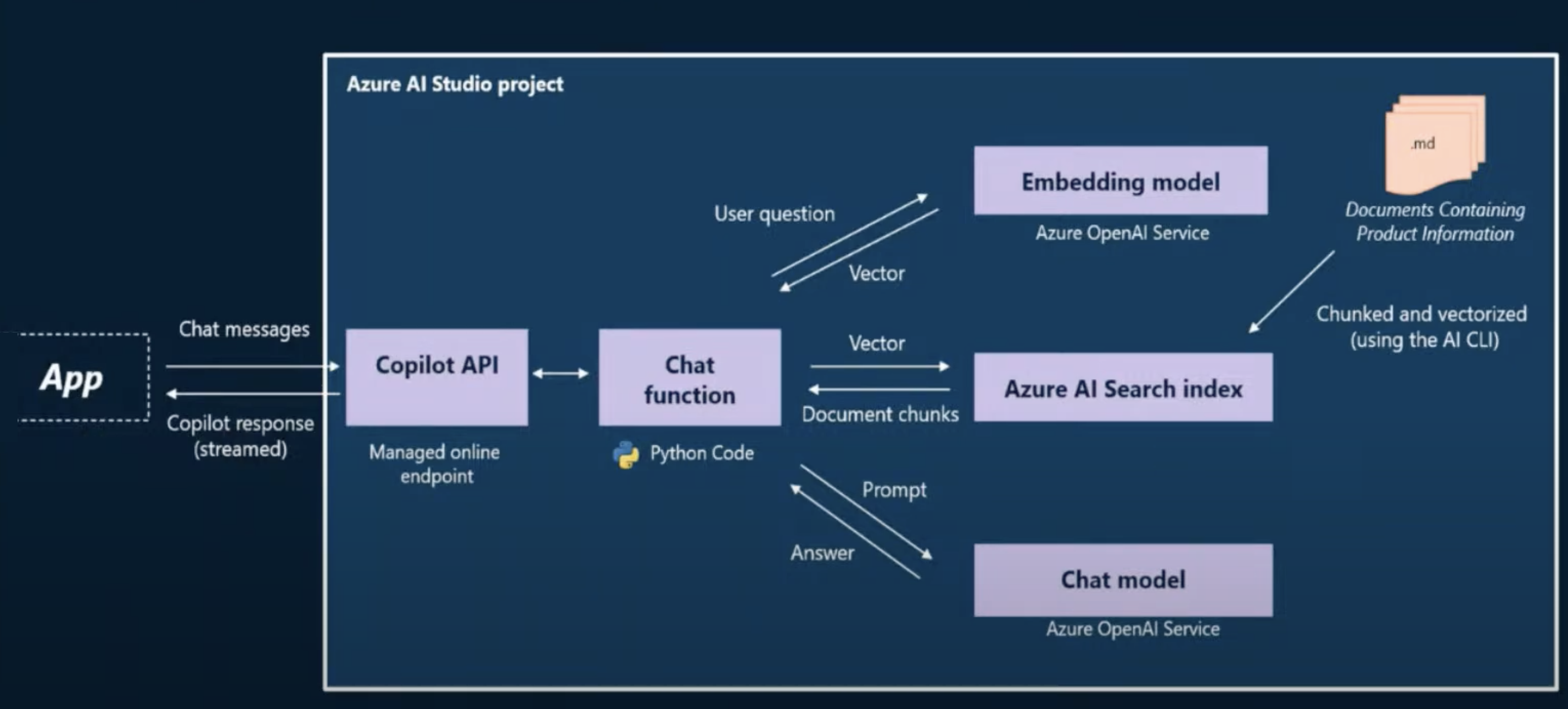

First, let's remind ourselves of the high-level architecture for a copilot application. Our solution has two components:

- Backend: The chat AI app that is deployed to provide a hosted API endpoint.

- Frontend: The chat UI app that is deployed to support user interactions with API.

Let's look at what deployment means in each case:

2. Deploy your chat AI app

In our example, the chat AI is implemented by the Contoso Chat sample. Deploying this chat AI solution involves three steps.

- Deploy the Models

- Deploy the Flows

- Deploy the Web App

Let's look at the first two in this section, starting with model deployment. Azure AI Studio has a rich model catalog from providers including OpenAI, HuggingFace, Meta and Microsoft Research. Some models can be deployed as a service (with a pay-as-you-go subscription) while others require hosted, managed infra (with a standard Azure subscription). Our chat AI uses three models, all of which used the hosted, managed option.

gpt-35-turbo- for chat completion (core function)text-embedding-ada-002- for embeddings (query vectorization)gpt-4- for chat evaluation (responsible AI)

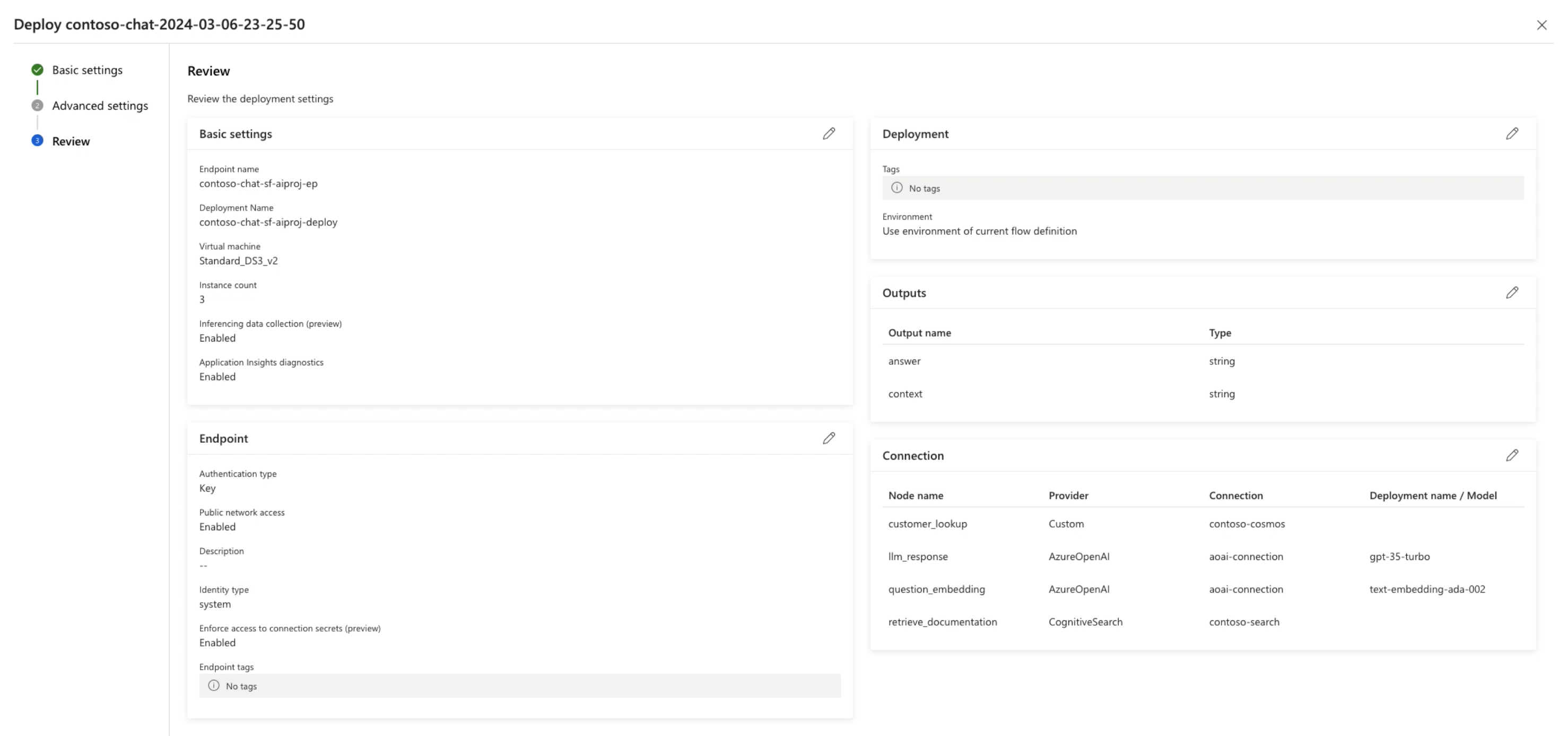

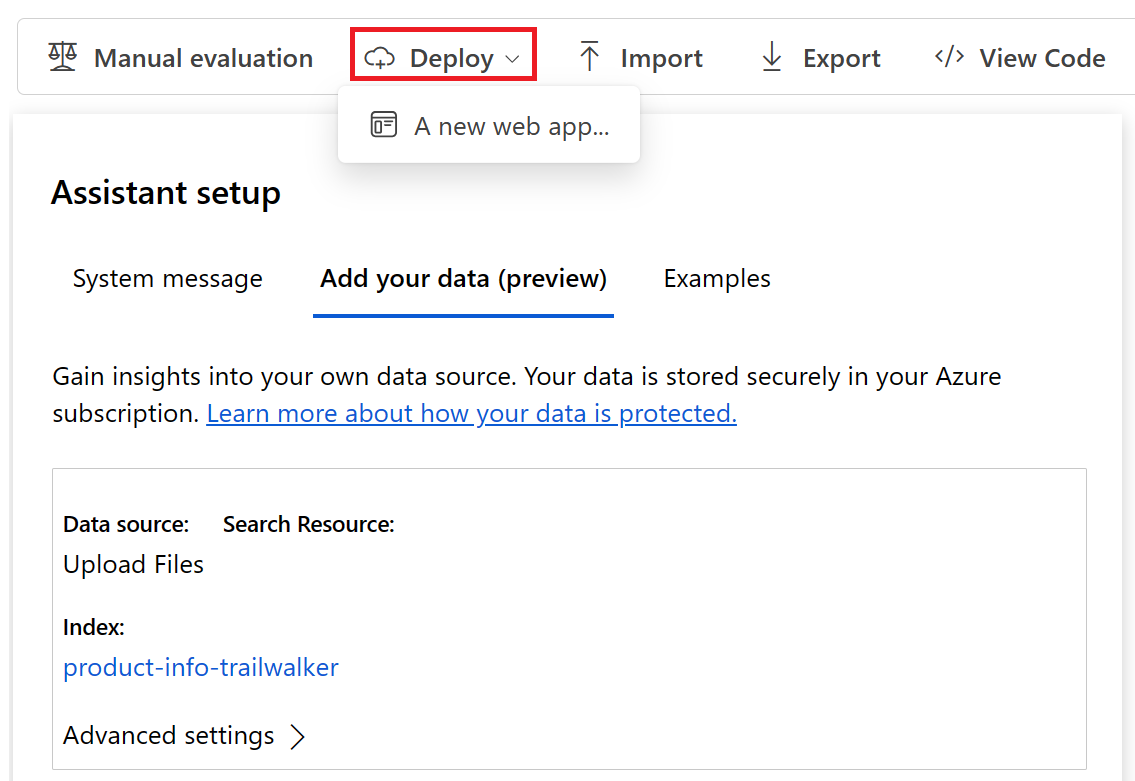

Next, let's talk about deploying flows. There are two kinds of flows we'll use in our chat AI - completion flows (that we'll use for real-time inference) and evaluation flows (that we'll use for quality assessment). Azure AI Studio provides low-code deployment via the UI and code-first deployment using the Azure AI SDK. In our Contoso Chat sample, we use the SDK to upload the flow to Azure, then deploy it using the UI as shown.

Finally, let's talk about deploying web apps. Here, the web app is a chat UI that can invoke requests on the deployed chat AI and validate the functionality in production. There are three options to consider:

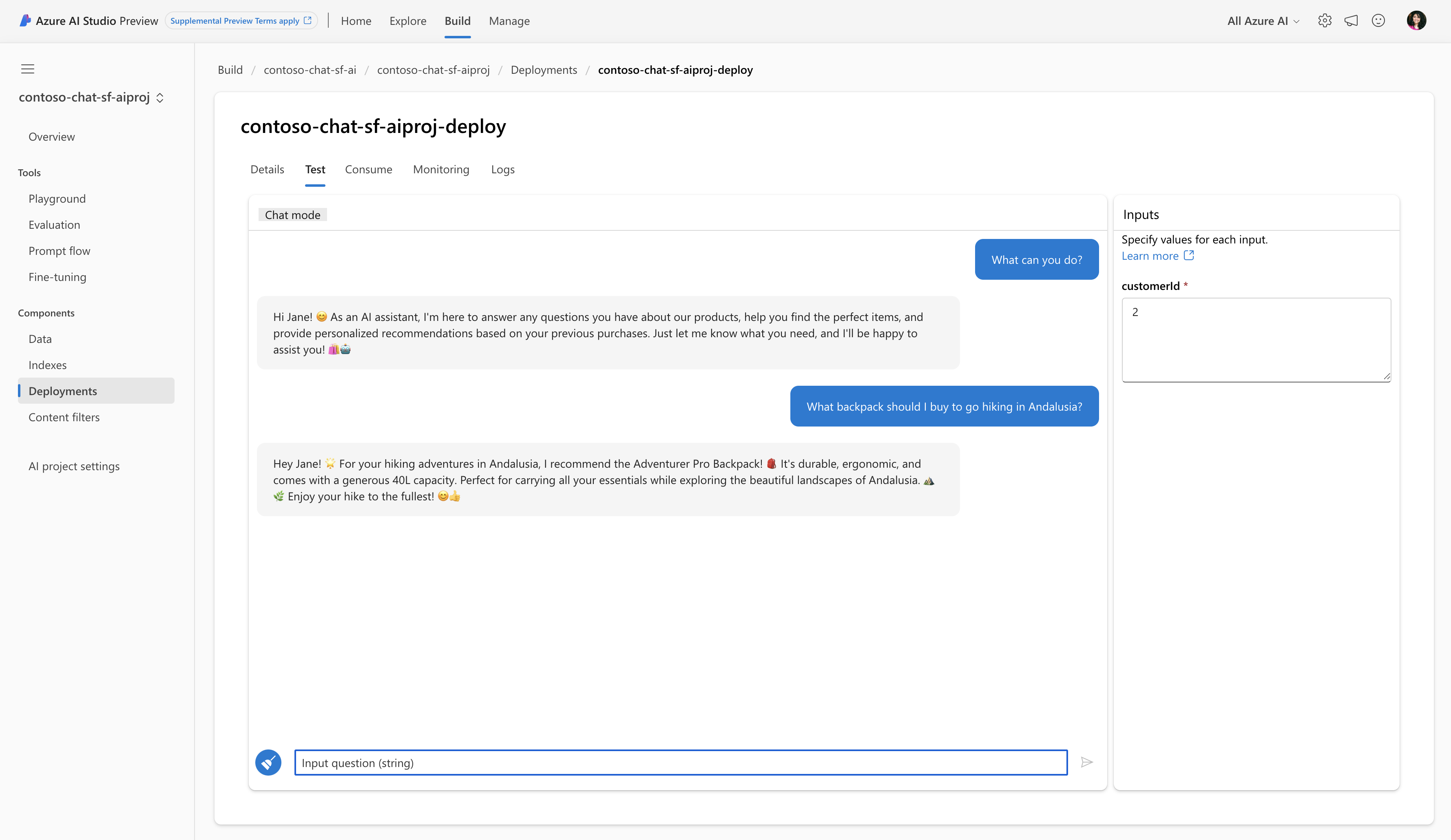

- Built-in Testing UI. When you deploy your flow via Azure AI Studio, you can visit the deployment details page and navigate to the Test tab, to get a built-in testing sandbox as shown. This provides a quick way to test prompts with each new iteration, in a manual (interactive) way.

- Deploy as Web App. Azure AI Studio also provides a Playground where you can deploy models directly (for chat completion) and add your data (preview) (for grounding responses) using Azure AI Search and Blob Storage resources, to customize that chat experience. Then deploy a new web app directly from that interface, to an Azure App Service resource.

- Dedicated Web App. This is the option we'll explore in the next section.

3. Deploy your chat UI app

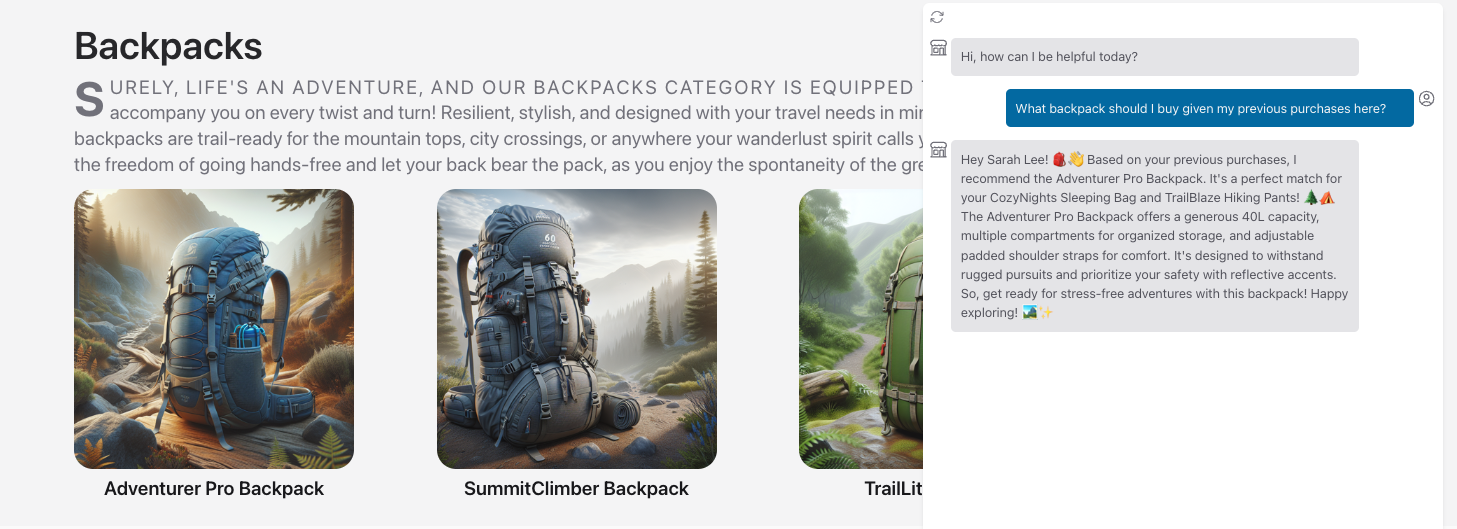

The Contoso Chat sample comes with a dedicated Contoso Web application that is implemented using the Next.js framework with support for static site generation. This provides a rich "Contoso Outdoors" website experience for users as shown below.

To use that application, simply setup the endpoint variables for Contoso Chat and deploy the app to Azure App Service. Alternatively, you can use this fork of the application to explore a version that can be run in GitHub Codespaces (for development) and deployed to Azure Static Web Apps (for production) using GitHub Actions for automated deploys. Once deployed, you can click the chat icon onscreen *bottom right) to see the chat dialog as shown in the screenshot above, and interact with the deployed Contoso chat AI.

4. Automate your chat AI deployment

The Contoso Chat sample is a constantly-evolving application sample that is updated regularly to reflect both the changes to Azure AI Studio (preview) and showcase new capabilities for end-to-end development workflows. You can currently explore two additional capabilities implemented in the codebase, to streamline your deployment process further.

- Using GitHub Actions. The sample has instructions to Deploy with GitHub Actions instead of the manual Azure AI Studio based deployment step we showed earlier. By setting up the actions workflow, you can automated deployments on every commit or PR, and get a baseline CI/CD pipeline for your chat AI, to build on later.

- Using Azure Developer CLI. The sample was just azd-enabled recently, making it possible to use the Azure Developer CLI as a unified tool to accelerate the end-to-end process from provisioning the resources to deploying the solution. The azd template adds support for infrastructure-as-code, allowing your application to have a consistent and repeatable deployment blueprint for all users. You can also browse the azd template gallery for other ChatGPT style application examples.

Note that the Contoso Chat sample is a demo application sample that is designed to showcase the capabilities of Azure AI Studio and Azure AI services. It is not a production-ready application, and should be used primarily as a learning tool and starting point for your own development.

5. Enterprise Architecture Options

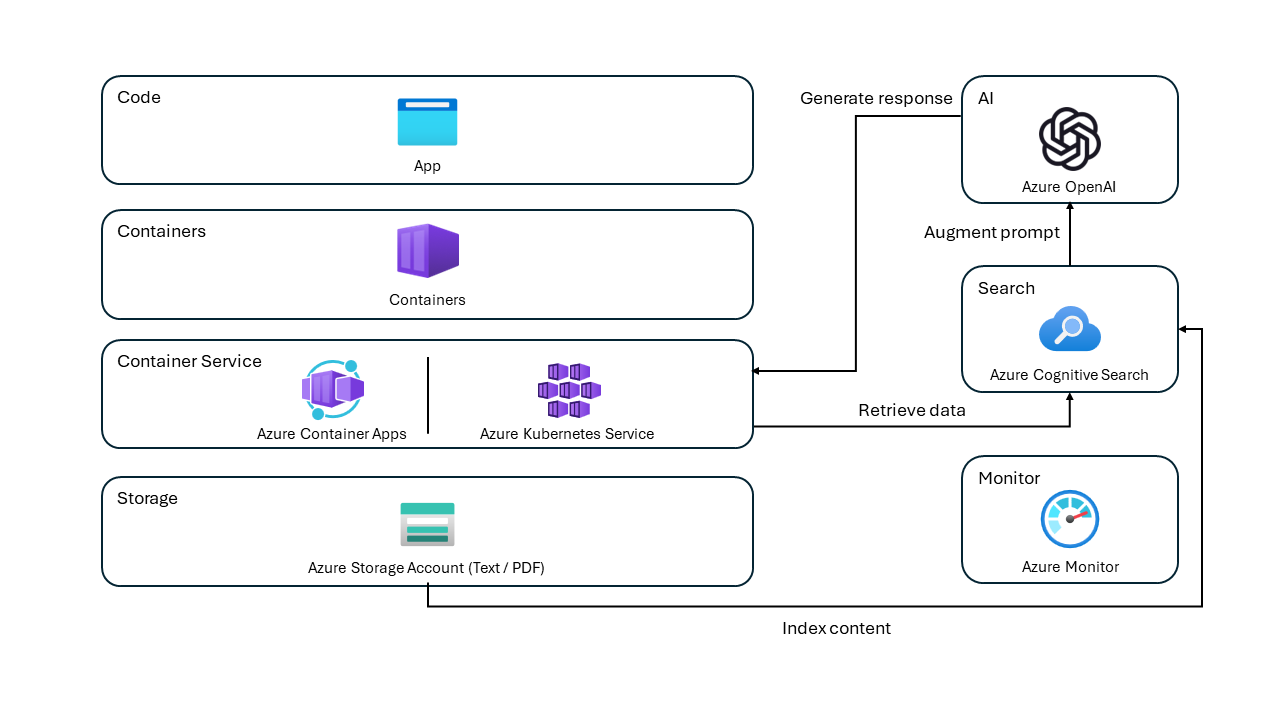

The objective of this series was to familiarize you with the Azure AI Studio (preview) platform and the capabilities it provides for building generative AI applications. And to give you a sense of how to build, run, test and deploy, your chat AI application for real-world use. But the platform is still in preview (and evolving rapidly). So what are your options if you want to build and deploy generative AI solutions at enterprise scale today? How can you design it using a well-architected cloud framework with cloud-native technologies like Azure Container Apps or Azure Kubernetes Service?

Here are some open-source samples and guidance you can explore to start with:

- ChatGPT + Enterprise data with Azure Open AI and AI Search (Python) - open-source sample that uses Azure App Service, Azure Open AI, Azure AI Search and Azure Blob Storage, for an enterprise-grade solution grounded in your (documents) data.

- ChatGPT + Enterprise data with Azure Open AI and AI Search (.NET) - open-source sample chat AI for a fictitious company called "Contoso Electronics" using the application architecture shown below. This blog post provides more details.

- Chat with your data Solution Accelerator - uses Azure App Service, Azure Open AI, Azure AI Search and Azure Blob Storage, for an end-to-end baseline RAG sample that goes beyond the Azure OpenAI Service On Your Data feature (GA in Feb 2024).''

- Built a private ChatGPT style app with enterprise-ready architecture - a blog post from the Microsoft Mechanics team that uses an open-source chat UI sample and discusses how to enhance the chat experience with Azure AI Studio and streamline setup by using Azure Landing Zones.

We covered a lot today - but there's one last thing we should talk about before we wrap up. Responsible AI.

6. Responsible AI In Practice

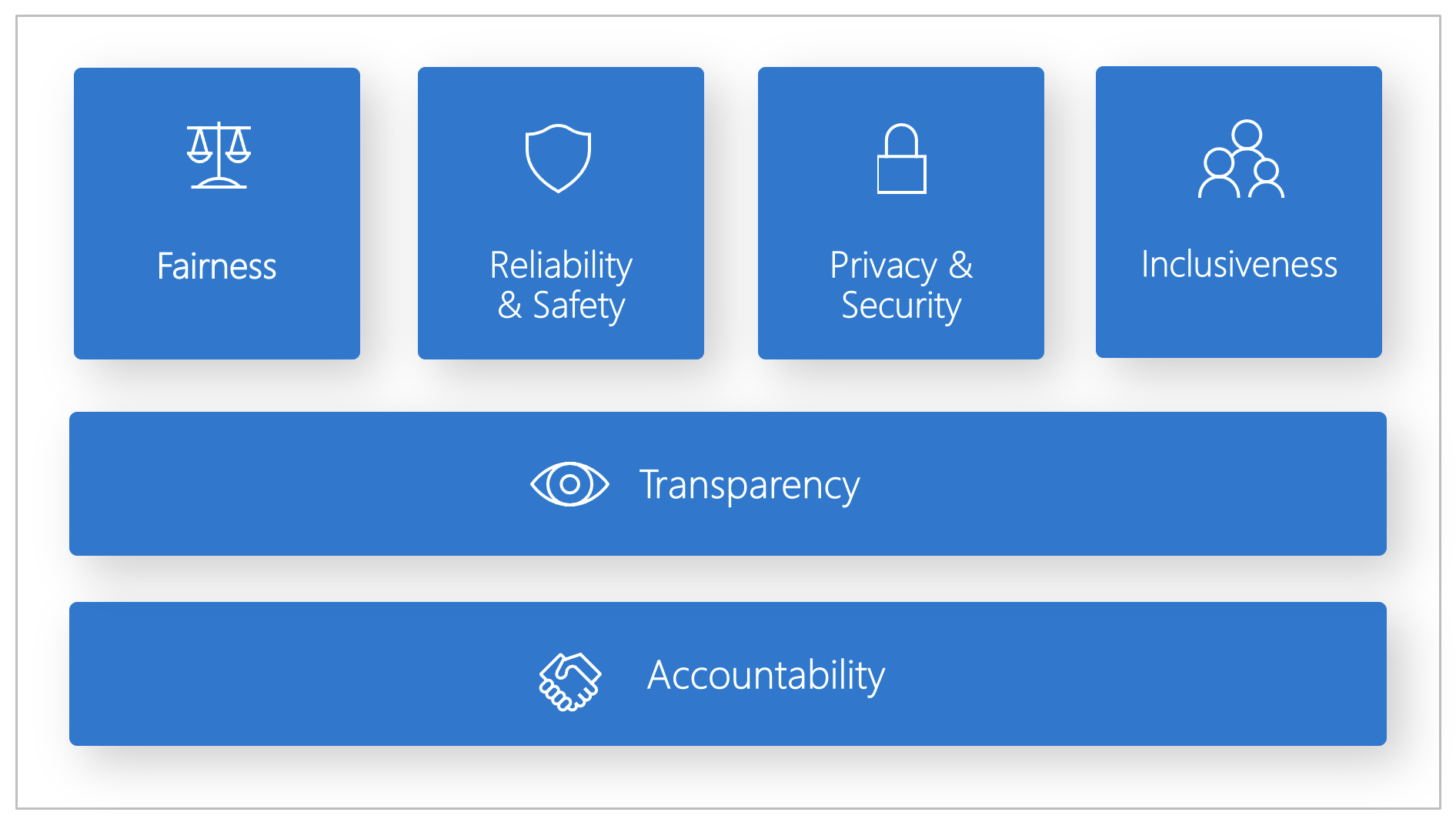

6.1 Principles of Responsible AI

By one definition, Responsible AI is approach to developing, assessing, and deploying AI systems in a safe, trustworthy, and ethical way. The Responsible AI standard was developed by Microsoft as a framework for building AI systems, using 6 principles to guide our design thinking.

| Principle | Description |

|---|---|

| Fairness | How might an AI system allocate opportunities, resources, or information in ways that are fair to the humans who use it? |

| Reliability & Safety | How might the system function well for people across different use conditions and contexts, including ones it was not originally intended for? |

| Privacy & Security | How might the system be designed to support privacy and security?. |

| Inclusiveness | How might the system be designed to be inclusive of people of all abilities? |

| Transparency | How might people misunderstand, misuse, or incorrectly estimate the capabilities of the system? |

| Accountability | How can we create oversight so that humans can be accountable and in control? |

6.2 Implications for Generative AI

The Fundamentals of Responsible Generative AI describes core guidelines for building generative AI solutions responsibly as a 4-step process:

- Identify potential harms relevant to your solution.

- Measure presence of these harms in outputs generated by your solution.

- Mitigate harms at multiple layers to minimize impact, and ensure transparent communication about potential risks to users.

- Operate your solution responsibly by defining and following a deployment and operational readiness plan.

6.3 Identify Potential Harms

The first step of the process is to identify potential harms in your application domain using a 4-step process:

- Identify potential harms (offensive, unethical, fabrication) that may occur in generated content.

- Assess likelihood of each occurrence, and severity of impact.

- Test and verify if harms occur, and under what conditions.

- Document and communicate potential harms to stakeholders.

6.4 Measure Presence of Harms

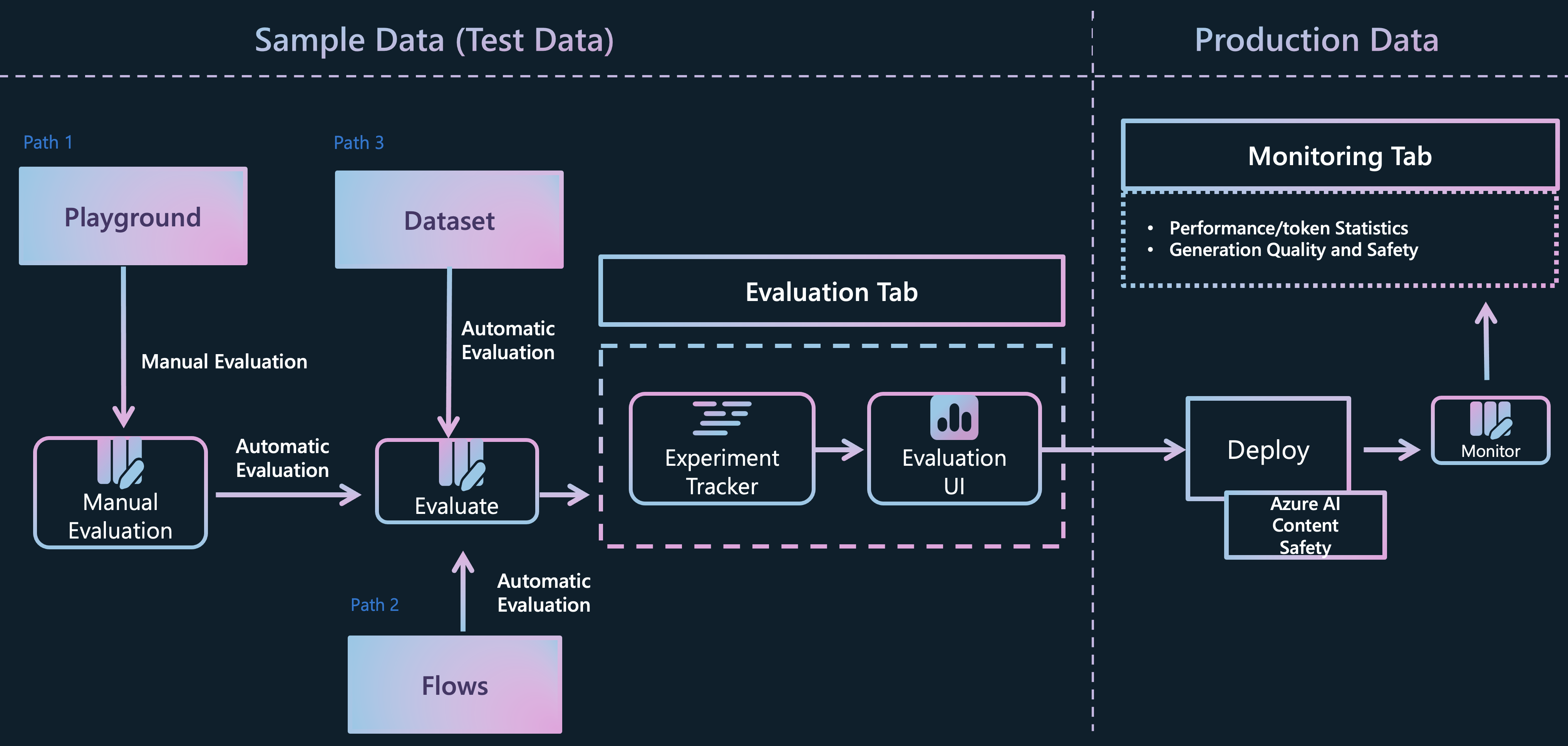

Evaluation of generative AI applications is the process of measuring the presence of identified harms in the generated output. Think of it as a 3-step process:

- Prepare a diverse selection of input prompts that may result in the potential harms documented.

- Submit prompts to your AI application and retrieve generated output

- Evaluate those responses using pre-defined criteria.

Azure AI Studio provides many features and pathways to support evaluation. Start with manual evaluation (small set of inputs, interactive) to ensure coverage and consistency. Then scale to automated evaluation (larger set of inputs, flows) for increased coverage and operationalization.

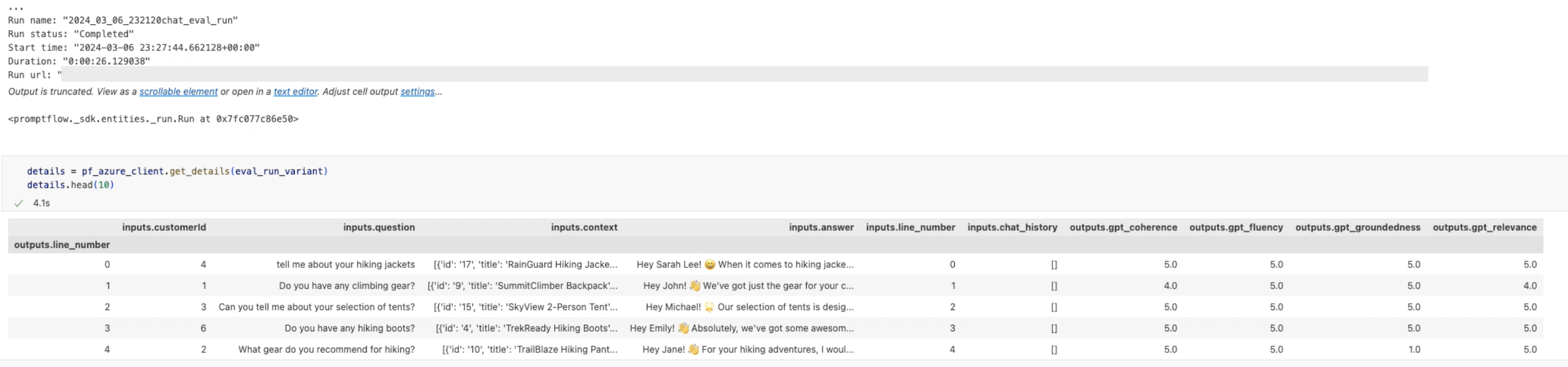

But what metrics can we use to quantify the quality of generated output? Quantifying accuracy is now complicated because we don't have access to a ground truth or deterministic answer that can serve as a baseline. Instead, we can use AI-assisted metrics - where we instruct another LLM to score your generated output for quality and safety using the guidelines and criteria you provide.

- Quality is measured using metrics like relevance, coherence and fluency.

- Safety is measured using metrics like groundedness and content harms.

In our Contoso Chat app sample, we show examples of local evaluation (with single and multiple metrics) and batch runs (for automated evaluation in the cloud). Here's an exmaple of what the output from the local evaluation looks like:

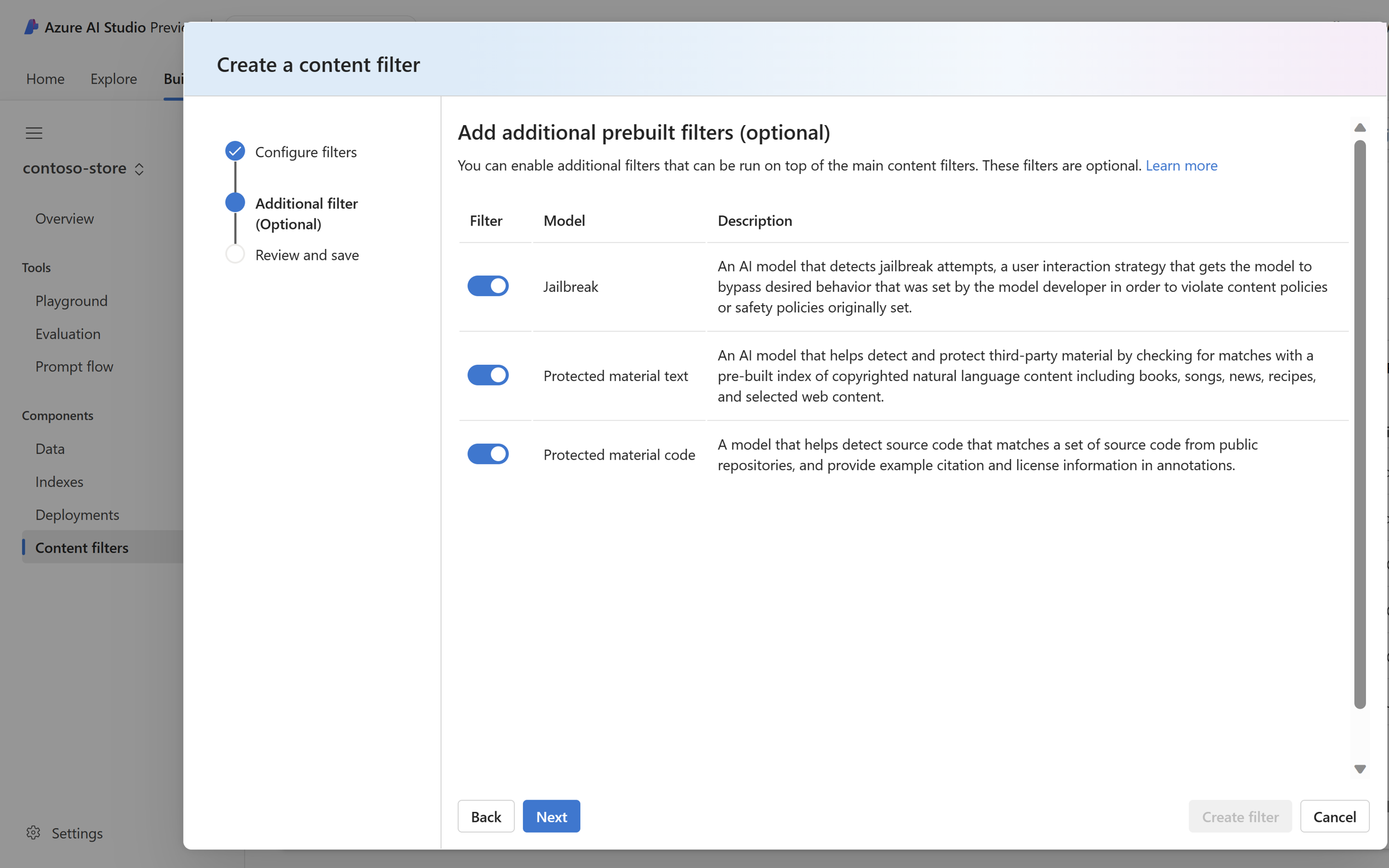

6.5 Content Safety for Mitigation

One of the most effective ways to mitigate harmful responses from generative AI models in Azure OpenAI is to use Content Filtering powered by the Azure AI Content Safety service. The service works by running the user input (prompt) and the generated output (completion) through an ensemble of classification models that are trained to detect, and act on, identified caegories of harmful content.

Azure AI Studio provides a default content safety filter, and allows you to create custom content filters with more tailored configurations if you opt-in to that capability first. These filters can then be applied to a model or app deployment to ensure that inputs and outputs are gated to meet your content safety requirements.

The screenshot shows the different content filtering categories and the level of configurability each provides. This allows us to identify and mitigate different categories of issues (Violence, Hate, Sexual and Self-harm) by automatically detecting these in both user prompts (input) and model completions (output). An additional filter (optional) lets you enable filters for more advanced usage scenarios including jailbreaks, protected content or code as described here.

Once the filters are applied, the deployment can be opened up in the Playground, or using an integrated web app, to validate that the filters work. Check out this #MSIgnite session from the Responsible AI team for strategies and examples for responsible AI practices with prompt engineering and retrieval augmented generation patterns in context.

7. Exercise:

We covered a lot today - and that also brings us to the end of our journey into Azure AI in this series. Want to get hands-on experience with some of these concepts? Here are some suggestions:

- Walk through the Contoso Chat sample end-to-end, and get familiar with the Azure AI Studio platform and the LLM Ops workflow for generative AI solutions.

- Explore the Responsible AI Developer Hub and try out the Content Safety and Prompt flow Evaluation workshops to get familiar with the Responsible AI principles and practices for generative AI.

8. Resources

We covered a lot this week!! But your learning journey with Generative AI development and Azure AI is just beginning. Want to keep going? Here are three resources to help you: