Welcome to Day 3️⃣ of the Azure AI week on #60Days Of IA

In the previous post, we learned about how to get started with the Azure AI SDK and using it to build a Copilot. In today's post we'll be covering building a copilot with custom code and data using PromptFlow.

What You'll Learn Today

- Quickstart Sample: Using PromptFlow to build a copilot.

- What is "Prompt Flow" ?

- Build the Copilot

- Evaluate and Test your flow

- Deploy the Copilot

- Challenge: Try this Quickstart sample

- Resources: To learn more

1 | Learning Objectives

This quickstart tutorial walks you through the steps of creating a copilot app for the enterprise using custom Python code and Prompt Flow to ground the copilot responses in your company data and APIs. The sample is meant to provide a starting point that you can further customize to add additional intelligence or capabilities. By the end of this tutorial, you should be able to

- Describe Prompt Flow and its components

- Build a copilot code-first, using Python and Prompt Flow

- Run the copilot locally, and test it with a question

- Evaluate the copilot locally, and understand metrics

- Deploy the copilot to Azure, and get an endpoint for integrations

Once you've completed the tutorial, try to customize it further for your application requirements, or to explore other platform capabilities. This is not a production sample so make sure you validate responses and evaluate the suitability of this sample for use in your application context.

What is Prompt Flow?

Prompt Flow is a tool that simplifies the process of building a fully-fledged AI Solution. It helps you prototype, experiment, iterate, test and deploy your AI Applications. Some of the tasks you can achieve with promptflow include:

- Create executable flows linking LLM prompts and Python tools through a graph

- Debug, share, and iterate through your flows with ease

- Create prompt variants and evaluate their performance through testing

- Deploy a real-time endpoint that unlocks the power of LLMs for your application.

2 | Pre-Requisites

Completing the tutorial requires the following:

- An Azure subscription - Create one for free

- Access to Azure OpenAI in the Azure Subscription - Request access here

- Custom data to ground the copilot - Sample product-info data is provided

- A GitHub account - Create one for free

- Access to GitHub Codespaces - Free quota should be sufficient

The tutorial uses Azure AI Studio which is currently in public preview.

- Read the documentation to learn about regional availability of Azure AI Studio (preview)

- Read the Azure AI Studio FAQ for answers to some commonly-asked questions.

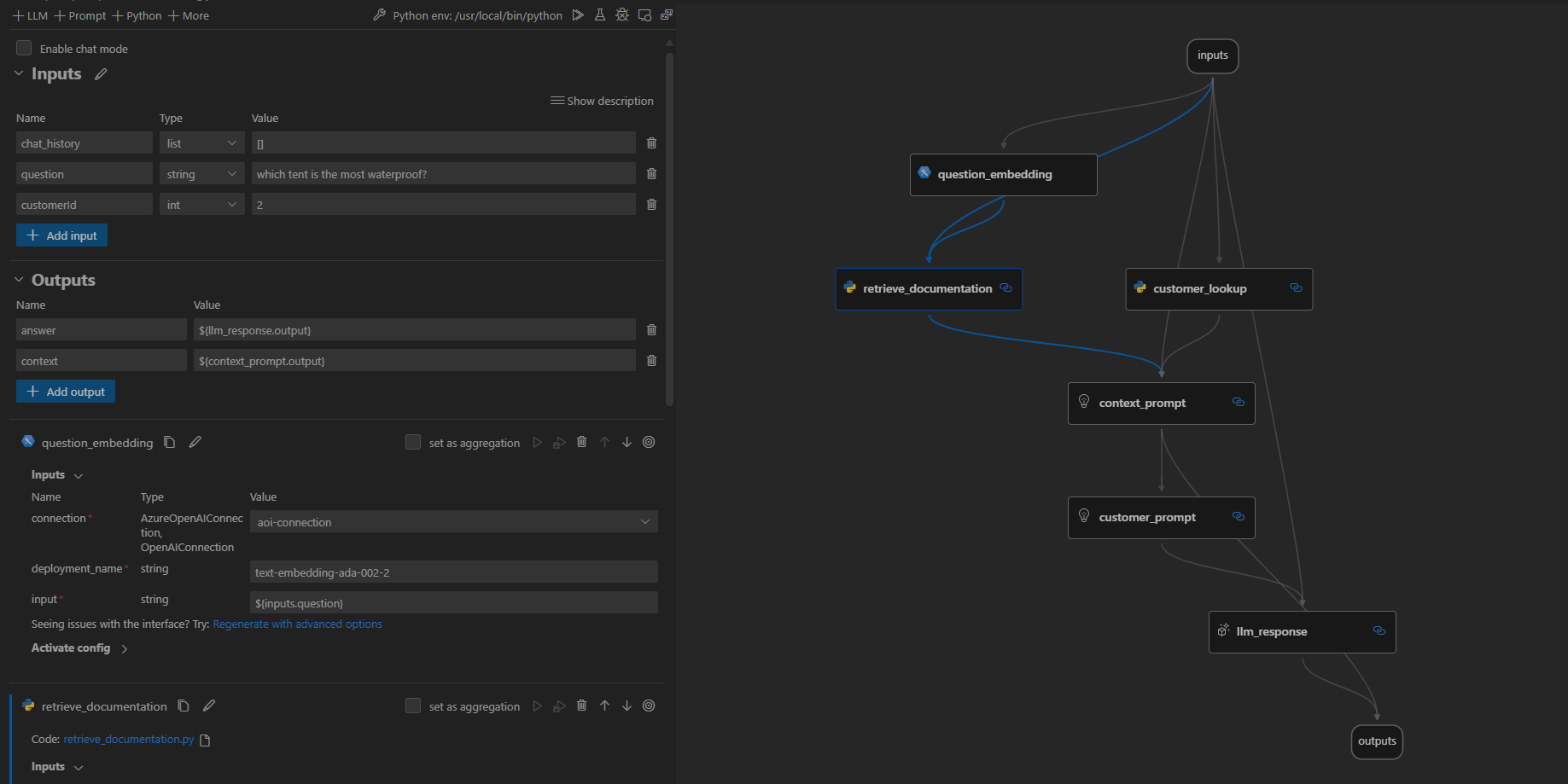

Components of our Prompt Flow

- Flows: LLM apps essentially involve a series of calls to external services. For instance, our application connects to AI Search, Embeddings Model, and GPT-35-turbo LLM. A flow in PromptFlow are merely...... There are two types of flows:

- Standard flow: This is a flow for you to develop you LLM application.

- Chat flow: This is similiar to standard flow but the difference is you can define the

chat_history,chat_inputandchat_outputfor our flow, enhancing the flow for conversations. - Evaluation Flow: this flow allows you to test and evaluate the quality of your LLM application. It runs on the output of yout flow and computes metrics that can be used to determine whether the flow performs well.

The flow is defined in

src/copilot_proptflow/flow.dag.yaml, where you will find all the inputs, nodes and outputs.

- Tools: these are the fundamental building blocks (nodes) of a flow. The three basic tools are:

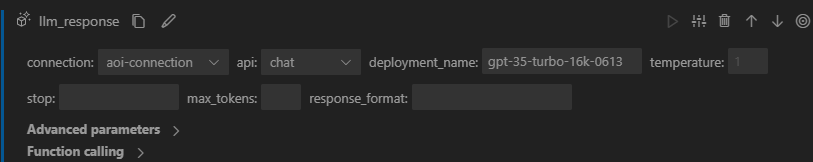

- LLM: allows you to customize your prompts and leverage LLMs to achieve specific goals

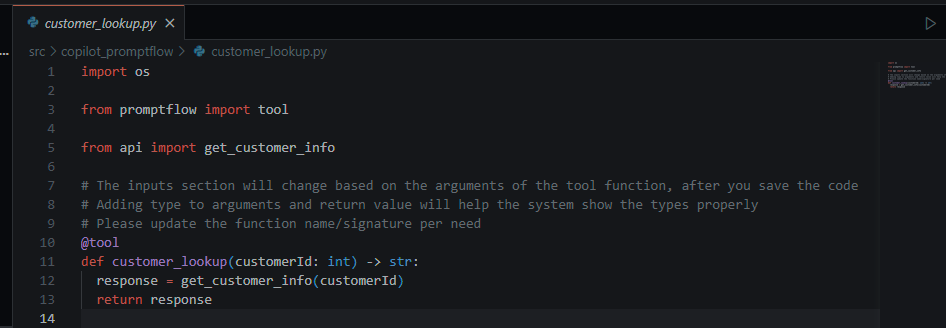

- Python: enables you to write custom Python functions to perform various tasks

- Prompt: allows you to prepare a prompt as a string for more complex use cases.

LLM Response .jinja2 file

Customer lookup .py file

The source code in .py or .jinja2 defines tools used by the flow.

- Connections these are for storing information about how you can access external services such as LLM endpoints, API keys, databases, and custom connections e.g. Azure Cosomos DB. You can add your connection as follows using

Prompt flow: Create connectioncommand:

Once created, you can then update your new connection in you flow:

- Variants: they are used to tune your prompts, for instance utilizing different variants for prompts to evaluate how your model responds to different inputs to get the most suitable combination for your application.

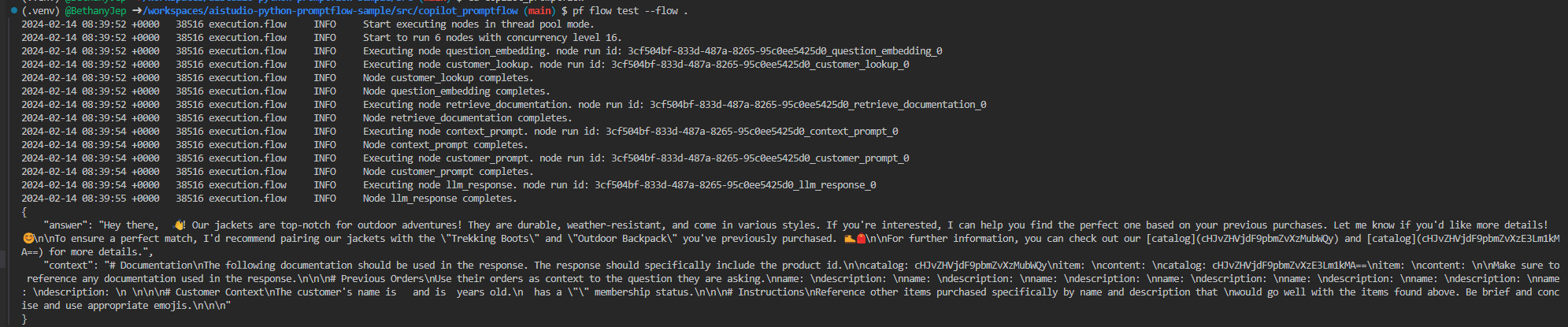

- Running our code: You can run by clicking run on the visual editor.

Test and evaluate our PromptFlow

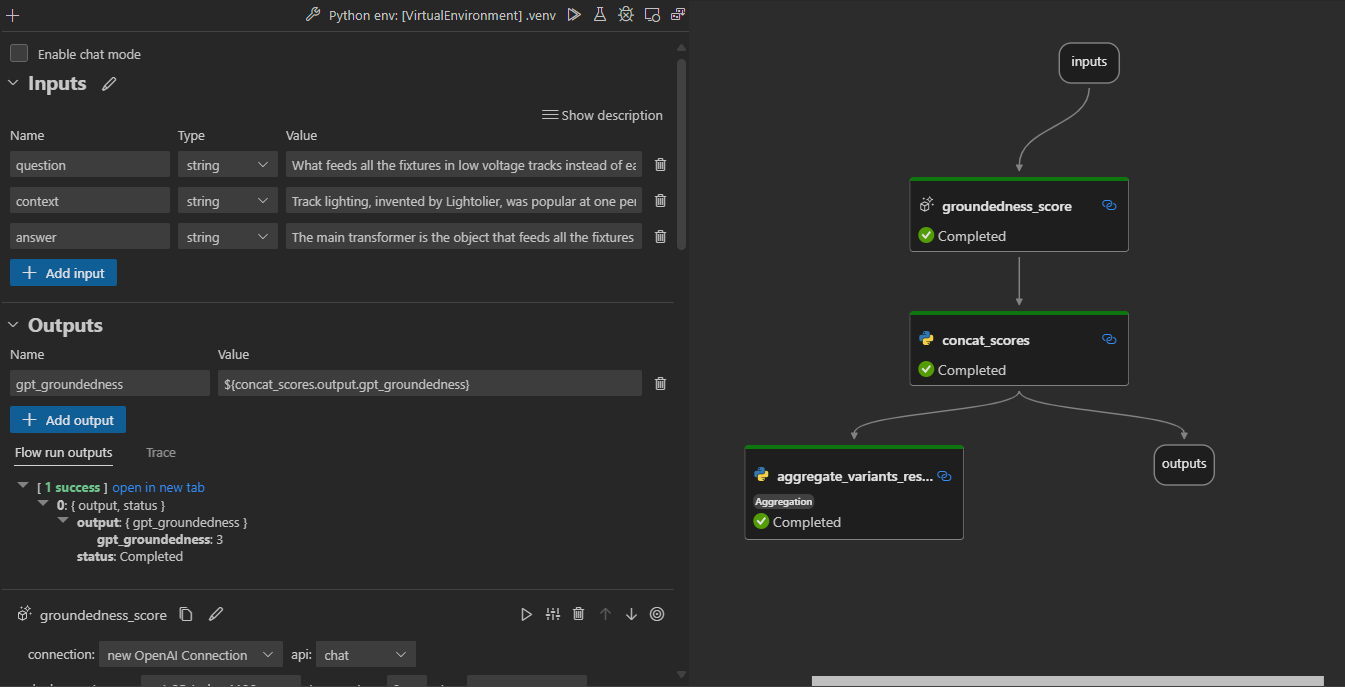

Once you have built your flow, you need to evaluate the quality of your LLM app response to see if it is performing up to expectations. Some of the metrics you can include in your evaluation are:

- Groundedness: how well does the generated responses align with the source data?

- Relevance: to what extent is the model's generated responses directly related to the questions/input?

- Coherence: to what extent does the generated response sound natural, fluent and human like?

- Fluency: how grammatically proficient is the output generated by the AI?

During local evaluation you can explore one metric e.g. groundedness or multiple metrics to evaluate your application. We will evaluate Groundedness by using the evaluation flow as shown below:

Exercise

We have covered the building blocks of PromptFlow and how you can ground your data and build your AI Application. Next, once you are satisfied with the performance of your model, you can go ahead and deploy your application. You can do this using either Azure AI Studio or Azure AI Python SDK.

🚀 EXERCISE

Deploy the PromptFlow either using the Azure AI Studio UI or using the Azure AI SDK

Resources

- AI Studio Prompt Flow Quickstart Sample: Code-first approach to building, running, evaluating, and deploying, a prompt flow based Copilot application using your own data.

- AI Tour Workshop 4: Comprehensive step-by-step instructions for building the Contoso Chat production RAG app with Prompt Flow and Azure AI Studio

- Azure AI Studio - Documentation: Build cutting-edge, market-ready, responsible applications for your organization with AI