Welcome to Day 2️⃣ of #30DaysOfServerless!

Today, we kickstart our journey into serveless on Azure with a look at Functions As a Service. We'll explore Azure Functions - from core concepts to usage patterns.

Ready? Let's Go!

What We'll Cover

- What is Functions-as-a-Service? (FaaS)

- What is Azure Functions?

- Triggers, Bindings and Custom Handlers

- What is Durable Functions?

- Orchestrators, Entity Functions and Application Patterns

- Exercise: Take the Cloud Skills Challenge!

- Resources: #30DaysOfServerless Collection.

1. What is FaaS?

Faas stands for Functions As a Service (FaaS). But what does that mean for us as application developers? We know that building and deploying modern applications at scale can get complicated and it starts with us needing to take decisions on Compute. In other words, we need to answer this question: "where should I host my application given my resource dependencies and scaling requirements?"

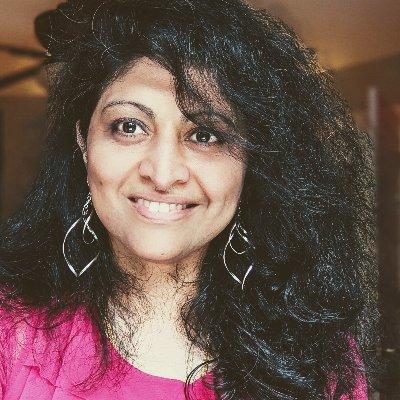

Azure has this useful flowchart (shown below) to guide your decision-making. You'll see that hosting options generally fall into three categories:

- Infrastructure as a Service (IaaS) - where you provision and manage Virtual Machines yourself (cloud provider manages infra).

- Platform as a Service (PaaS) - where you use a provider-managed hosting environment like Azure Container Apps.

- Functions as a Service (FaaS) - where you forget about hosting environments and simply deploy your code for the provider to run.

Here, "serverless" compute refers to hosting options where we (as developers) can focus on building apps without having to manage the infrastructure. See serverless compute options on Azure for more information.

2. Azure Functions

Azure Functions is the Functions-as-a-Service (FaaS) option on Azure. It is the ideal serverless solution if your application is event-driven with short-lived workloads. With Azure Functions, we develop applications as modular blocks of code (functions) that are executed on demand, in response to configured events (triggers). This approach brings us two advantages:

- It saves us money. We only pay for the time the function runs.

- It scales with demand. We have 3 hosting plans for flexible scaling behaviors.

Azure Functions can be programmed in many popular languages (C#, F#, Java, JavaScript, TypeScript, PowerShell or Python), with Azure providing language-specific handlers and default runtimes to execute them.

- What if we wanted to program in a non-supported language?

- Or we wanted to use a different runtime for a supported language?

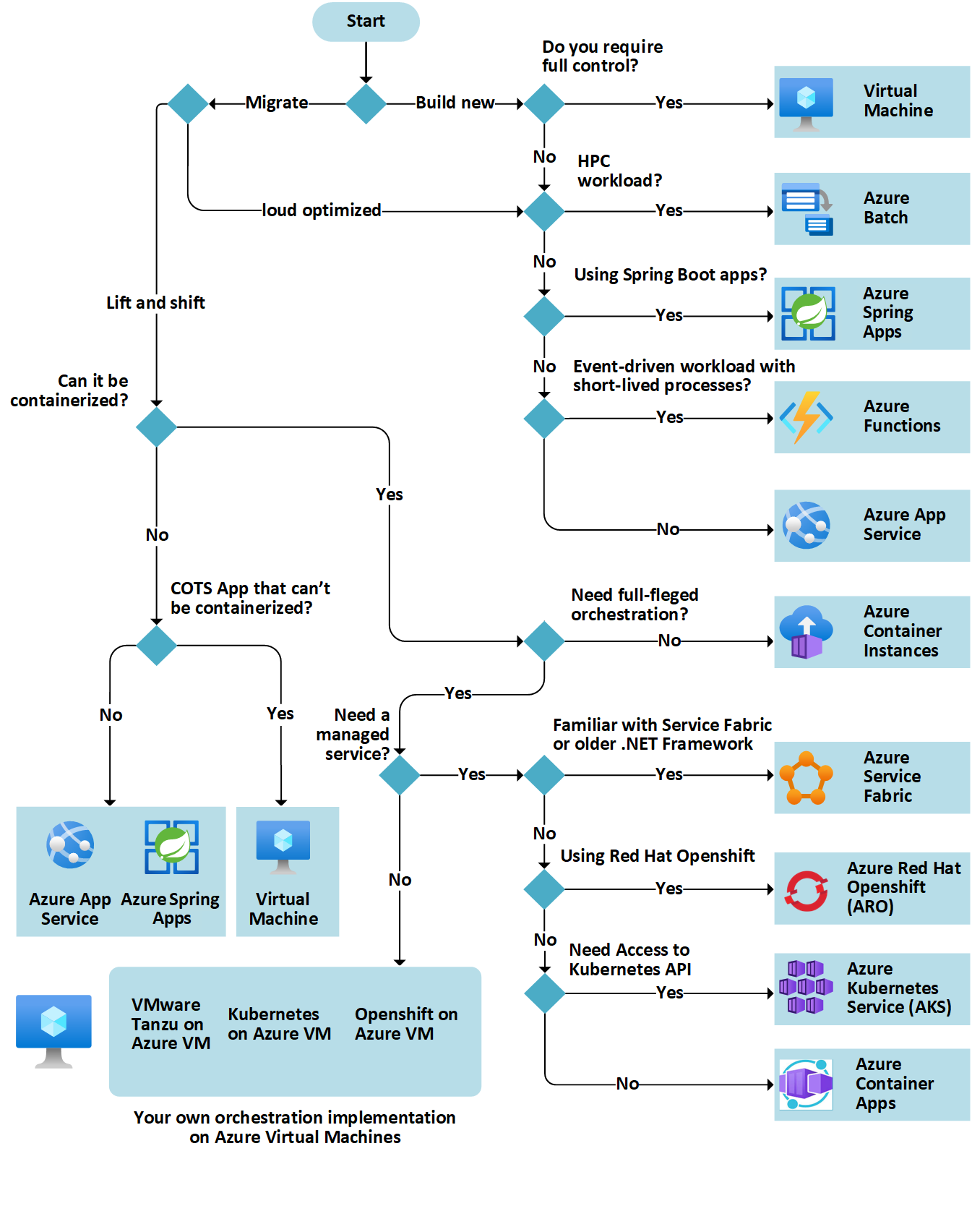

Custom Handlers have you covered! These are lightweight webservers that can receive and process input events from the Functions host - and return responses that can be delivered to any output targets. By this definition, custom handlers can be implemented by any language that supports receiving HTTP events. Check out the quickstart for writing a custom handler in Rust or Go.

We talked about what functions are (code blocks). But when are they invoked or executed? And how do we provide inputs (arguments) and retrieve outputs (results) from this execution?

This is where triggers and bindings come in.

Triggersdefine how a function is invoked and what associated data it will provide. A function must have exactly one trigger.Bindingsdeclaratively define how a resource is connected to the function. The resource or binding can be of type input, output, or both. Bindings are optional. A Function can have multiple input, output bindings.

Azure Functions comes with a number of supported bindings that can be used to integrate relevant services to power a specific scenario. For instance:

- HTTP Triggers - invokes the function in response to an

HTTP request. Use this to implement serverless APIs for your application. - Event Grid Triggers invokes the function on receiving events from an Event Grid. Use this to process events reactively, and potentially publish responses back to custom Event Grid topics.

- SignalR Service Trigger invokes the function in response to messages from Azure SignalR, allowing your application to take actions with real-time contexts.

Triggers and bindings help you abstract your function's interfaces to other components it interacts with, eliminating hardcoded integrations. They are configured differently based on the programming language you use. For example - JavaScript functions are configured in the functions.json file. Here's an example of what that looks like.

{

"disabled":false,

"bindings":[

// ... bindings here

{

"type": "bindingType",

"direction": "in",

"name": "myParamName",

// ... more depending on binding

}

]

}

The key thing to remember is that triggers and bindings have a direction property - triggers are always in, input bindings are in and output bindings are out. Some bindings can support a special inout direction.

The documentation has code examples for bindings to popular Azure services. Here's an example of the bindings and trigger configuration for a BlobStorage use case.

// function.json configuration

{

"bindings": [

{

"queueName": "myqueue-items",

"connection": "MyStorageConnectionAppSetting",

"name": "myQueueItem",

"type": "queueTrigger",

"direction": "in"

},

{

"name": "myInputBlob",

"type": "blob",

"path": "samples-workitems/{queueTrigger}",

"connection": "MyStorageConnectionAppSetting",

"direction": "in"

},

{

"name": "myOutputBlob",

"type": "blob",

"path": "samples-workitems/{queueTrigger}-Copy",

"connection": "MyStorageConnectionAppSetting",

"direction": "out"

}

],

"disabled": false

}

The code below shows the function implementation. In this scenario, the function is triggered by a queue message carrying an input payload with a blob name. In response, it copies that data to the resource associated with the output binding.

// function implementation

module.exports = async function(context) {

context.log('Node.js Queue trigger function processed', context.bindings.myQueueItem);

context.bindings.myOutputBlob = context.bindings.myInputBlob;

};

What if we have a more complex scenario that requires bindings for non-supported resources?

There is an option create custom bindings if necessary. We don't have time to dive into details here but definitely check out the documentation

3. Durable Functions

This sounds great, right?. But now, let's talk about one challenge for Azure Functions. In the use cases so far, the functions are stateless - they take inputs at runtime if necessary, and return output results if required. But they are otherwise self-contained, which is great for scalability!

But what if I needed to build more complex workflows that need to store and transfer state, and complete operations in a reliable manner? Durable Functions are an extension of Azure Functions that makes stateful workflows possible.

How can I create workflows that coordinate functions?

Durable Functions use orchestrator functions to coordinate execution of other Durable functions within a given Functions app. These functions are durable and reliable. Later in this post, we'll talk briefly about some application patterns that showcase popular orchestration scenarios.

How do I persist and manage state across workflows?

Entity Functions provide explicit state mangement for Durable Functions, defining operations to read and write state to durable entities. They are associated with a special entity trigger for invocation. These are currently available only for a subset of programming languages so check to see if they are supported for your programming language of choice.

Durable Functions are a fascinating topic that would require a separate, longer post, to do justice. For now, let's look at some application patterns that showcase the value of these starting with the simplest one - Function Chaining as shown below:

Here, we want to execute a sequence of named functions in a specific order. As shown in the snippet below, the orchestrator function coordinates invocations on the given functions in the desired sequence - "chaining" inputs and outputs to establish the workflow. Take note of the yield keyword. This triggers a checkpoint, preserving the current state of the function for reliable operation.

const df = require("durable-functions");

module.exports = df.orchestrator(function*(context) {

try {

const x = yield context.df.callActivity("F1");

const y = yield context.df.callActivity("F2", x);

const z = yield context.df.callActivity("F3", y);

return yield context.df.callActivity("F4", z);

} catch (error) {

// Error handling or compensation goes here.

}

});

Other application patterns for durable functions include:

There's a lot more to explore but we won't have time to do that today. Definitely check the documentation and take a minute to read the comparison with Azure Logic Apps to understand what each technology provides for serverless workflow automation.

4. Exercise

That was a lot of information to absorb! Thankfully, there are a lot of examples in the documentation that can help put these in context. Here are a couple of exercises you can do, to reinforce your understanding of these concepts.

- Explore the supported bindings for Azure Functions.

- Look at code examples, think of usage scenarios.

5. What's Next?

The goal for today was to give you a quick tour of key terminology and concepts related to Azure Functions. Tomorrow, we dive into the developer experience, starting with core tools for local development and ending by deploying our first Functions app.

Want to do some prep work? Here are a few useful links:

- Azure Functions Quickstart

- Durable Functions Quickstart

- Azure Functions VS Code Extension

- Azure Functions Core Tools

6. Resources

- Developer Guide: Azure Functions

- Azure Functions: Tutorials and Samples

- Durable Functions: Tutorials and Samples

- Self-Paced Learning: MS Learn Modules

- Video Playlists: Azure Functions on YouTube