Lab 3.4 Content Safety with Azure AI Foundry before production

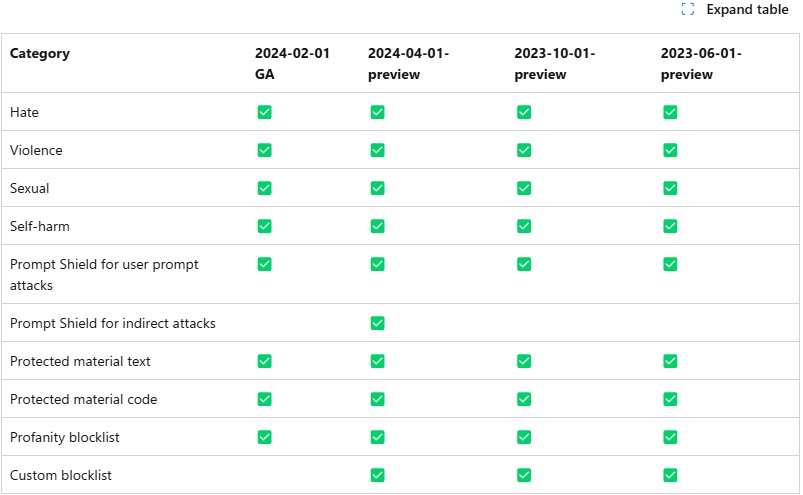

Annotation availability in each API version

Annotation availability in each API version

Prerequisites

- An Azure subscription where you can create an AI Hub and AI project Resource

- Registered the Fine-tune model and deployed LLMs in Azure AI Foundry

Task

- I want to filter problematic prompt from the end users

- I want to rewrite the hamful keywords in the prompt before calling the LLMs

- I want to monitor the service with metrics for the risk and cost management

TOC

- 1️⃣ Test your training dataset using content safety

- 3️⃣ Create a custom blocklist to manage inappropriate keyword in your prompt

- 2️⃣ Configure the content safety to filter to harmful contents for your orchestration flows

- 4️⃣ monitor the deployed application with metrics

query rates

- Content Safety features have query rate limits in requests-per-second (RPS) or requests-per-10-seconds (RP10S) . See the following table for the rate limits for each feature. link: Content Safety query rates

| Pricing tier | Moderation APIs (text and image) |

Prompt Shields | Protected material detection |

Groundedness detection (preview) |

Custom categories (rapid) (preview) |

Custom categories (standard) (preview) |

Multimodal |

|---|---|---|---|---|---|---|---|

| F0 | 5 RPS | 5 RPS | 5 RPS | N/A | 5 RPS | 5 RPS | 5 RPS |

| S0 | 1000 RP10S | 1000 RP10S | 1000 RP10S | 50 RPS | 1000 RP10S | 5 RPS | 10 RPS |

workthough Jupyter Notebook

- Let’s create and run the Content Safety on the jupyter notebook using python sdk. You will learn how to filter to harmful contents contentsafety_with_code_en.ipynb