Lab 0. Requirements (Skip if you have already set up)

1. Requirements

Checklist

Customers who participate in this hands-on lab will need to discuss with their Microsoft sales representative and complete the checklist. The checklist will ultimately be completed by the sales representative, but we ask that you cooperate in completing the checklist to ensure the right situation/right person is involved.

Please create a resource in one of following regions when creating a AI Document Intelligence resource: East-US, West-US2, West-Europe. Otherwise, you may encounter a 404 error when trying to access the resource. (Source)

Hands-on Requirements

- Ensure you have access to Azure OpenAI Service.

- Set up your Azure ML workspace and get your

<WORKSPACE_NAME>,<RESOURCE_GROUP>and<SUBSCRIPTION_ID>. - Create a project in Azure AI Foundry.

- For LLM training, recommend a single NVIDIA A100 GPU (Standard_NC24ads_A100_v4). Opt for Low-priority VMs if on a budget or without a dedicated quota. And the quota should be better request in Azure ML.

- For LLM serving, we recommend two NVIDIA V100 GPUs (Standard_NC6s_v3). Please refer to the note below for why 2 GPUs are required instead of 1 for Azure ML serving. And the quota should be better request in Azure ML.

- for LLMOps, we need to grant Storage File Data Privileged Contributor, Storage Blob Data Contributor at the storage of AI Foundry role to user, group, service principle and managed Identity which you are trying to access the data.

Deploying Azure resources with Azure Developer CLI(azd) and Azure CLI(az)

In case you’re looking for the easiest way to get started, this lab provides Bicep to provision everything with ease. The steps below will provision required Azure resources. Download the CLI from the What is the Azure Developer CLI? and Install the Azure CLI on Linux if you don’t have it installed yet.

Login to your Azure account:

azd auth login

For GitHub Codespaces users, if the previous command fails, try:

azd auth login --use-device-code

And then please run below command:

az ad signed-in-user show --query id --output tsv

Get the objectId of current user. Get it for the below command azd up’s input paramter ‘currentUserObjectId’

Run azd up - This will provision Azure resources including Azure ML workspace, Azure OpenAI resources, Application Insights, and Azure Document Intelligence.

azd up

when you see the message “Enter a value for the ‘currentUserObjectId’ infrastructure parameter” then paste the output of command” az ad signed-in-user show –query id –output tsv”

After executing the azd up command, an .azure folder will be generated under the 0_lab_preparation directory. Within the subfiles of the .azure folder, you can locate the .env file required to access the resources created by the azd up command. Copy this .env file to the root directory of this workshop project, and replace the dummy KEY values with the your actual KEYs.

Important: Beware that the resources created by this command will incur immediate costs, primarily from the AI Search resource. These resources may accrue costs even if you interrupt the command before it is fully executed. You can run azd down or delete the resources manually to avoid unnecessary spending.

You will be prompted to select two locations, one for the majority of resources and one for the OpenAI resource, which is currently a short list. That location list is based on the OpenAI model availability table and may become outdated as availability changes.

After the application has been successfully deployed you will see a URL printed to the console. Click that URL to interact with the application in your browser. It will look like the following:

Cautions

- Request Standard_NC6s_v3 GPU quota increase (*12 core) for serving.

- If configuring in a private environment, set up a private network or VPN to access services.

- Low-priority VM availability may vary by region.

- Set up connections for any blob storage used to store data and models within the Azure ML workspace.

- Request a quota increase if necessary for VMs or GPUs.

- Network config in Azure ML workspace cannot be changed post-setup; create a new workspace if required.

- Ensure compute instances are in the same region as the Azure ML workspace; otherwise, set up a VPN or private link.

- If using Azure AI Foundry compute instances, note that training jobs cannot be executed on them.

For managed online endpoints, Azure ML reserves 20% of the quota for the deployment.1 If you request a given number of instances for those VM SKUs in a deployment, you must have a quota for ceil(1.2 × number of instances requested for deployment) × number of cores for the VM SKU available to avoid getting an error. For example, if you request 1 instances of a Standard_NC6s_v3 VM (that comes with six cores) in a deployment, you should have a quota for 12 cores (ceil(1.2 × 1 instances) = 2, 2 × 6 cores) available.

Please ensure these points are followed to avoid common issues during the workshop.

2. Setup Compute Instance and the configuration file

- 1️⃣ Prepare your compute resource

- 2️⃣ Clone the repository

- 3️⃣ Create

.envfile and Add Azure OpenAI and Azure Document Intelligence details - 4️⃣ Setup config.yml

- 🚀 Get started to validate the setup

1️⃣ Prepare your compute resource

-

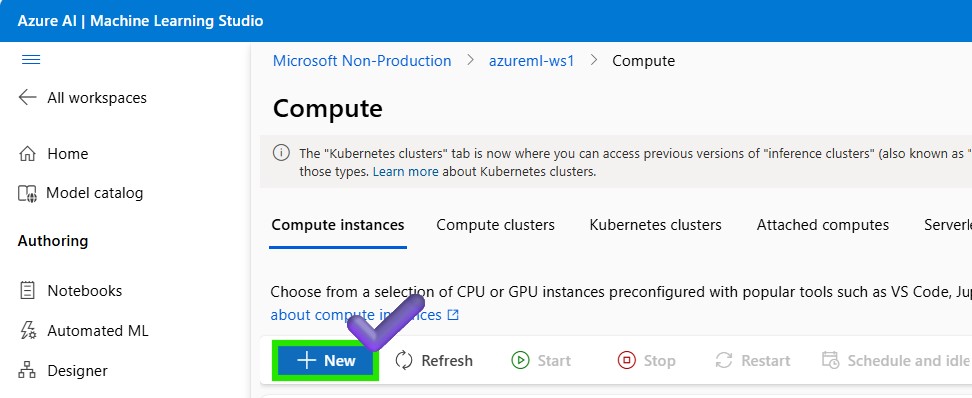

Create your compute instance in Azure ML Studio. Navigate to the Azure ML Studio > Compute > Compute instances and create a new compute instance.

-

For code development, we recommend Standard_E2as_v4 (AMD 2 cores, 16GB RAM, 32GB storage) or **Standard_DS11_v2 (Intel 2 cores, 14GB RAM, 28GB storage, No GPUs) Click on the

Review+Createbutton to create the compute instance. -

Once the compute instance is created and change the status as Running, click on the

JupyterorVS Code(Desktop)to open the Jupyter notebook and terminal.

2️⃣ Clone the repository and install the required packages

- Go to your terminal in your evironment and clone the repository.

git clone https://github.com/Azure/slm-innovator-lab.git

- Install all Python modules and packages listed in the requirements.txt file using the below command.

cd slm-innovator-lab # Change to the directory where the repository is cloned

ENVIRONMENT=azureml_py310_sdkv2

conda activate "$ENVIRONMENT"

pip install -r requirements.txt

- If you want to use the Unstructured toolkit for processing a complex PDF, please be sure to execute

startup_unstructured.shand include it in your instance startup script.

./startup_unstructured.sh

3️⃣ Create .env file and Add Azure OpenAI and Azure Document Intelligence details

- Please do not forget to modify the

.envfile to match your account. Rename.env.sampleto.envor copy and use it.

# .env

# this is a sample for keys used in this code repo.

# Please rename it to .env before you can use it

AZURE_OPENAI_ENDPOINT=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

AZURE_OPENAI_API_KEY=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

# https://learn.microsoft.com/en-us/azure/ai-services/openai/api-version-deprecation

AZURE_OPENAI_API_VERSION=2024-08-01-preview

AZURE_OPENAI_DEPLOYMENT_NAME=gpt-4o-mini

AZURE_DOC_INTELLIGENCE_ENDPOINT=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

AZURE_DOC_INTELLIGENCE_KEY=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

-

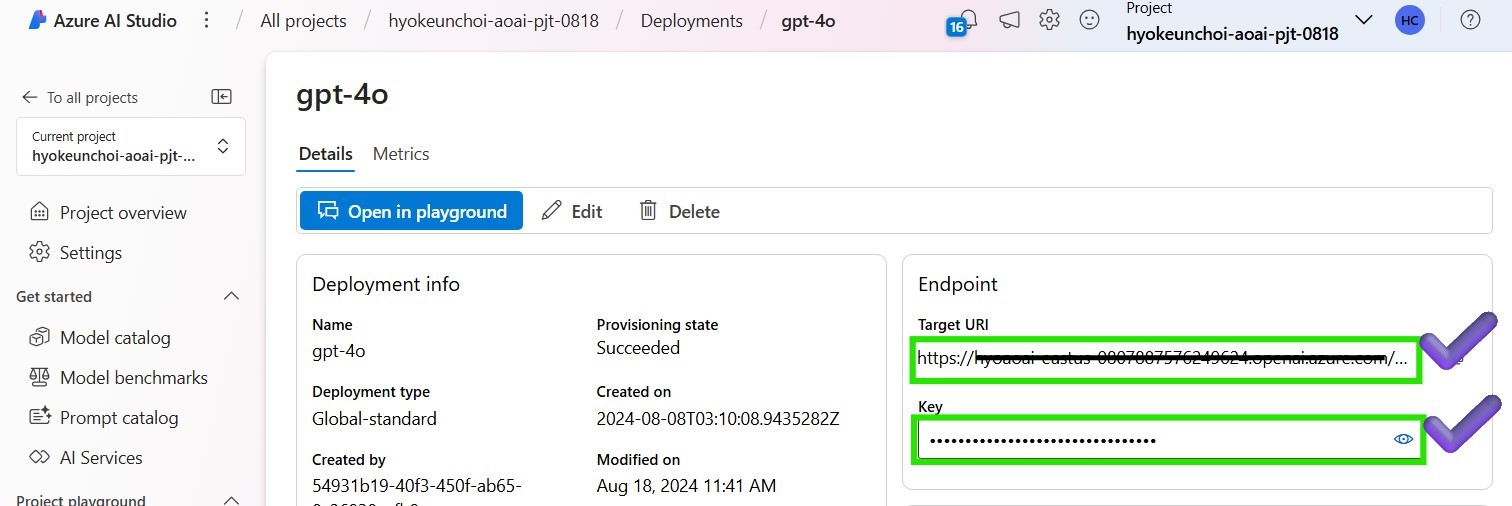

In order to match your Azure OpenAI authentication information in the

.envfile, please navigate to Azure AI Foundry > Deployments > your model deployment to get the Azure OpenAI endpoint and API key after deploying the Azure Open AI models. If you want to understand the LLM deployment process, please refer to the How to deploy Azure OpenAI models with AzureAI Foundryo.

-

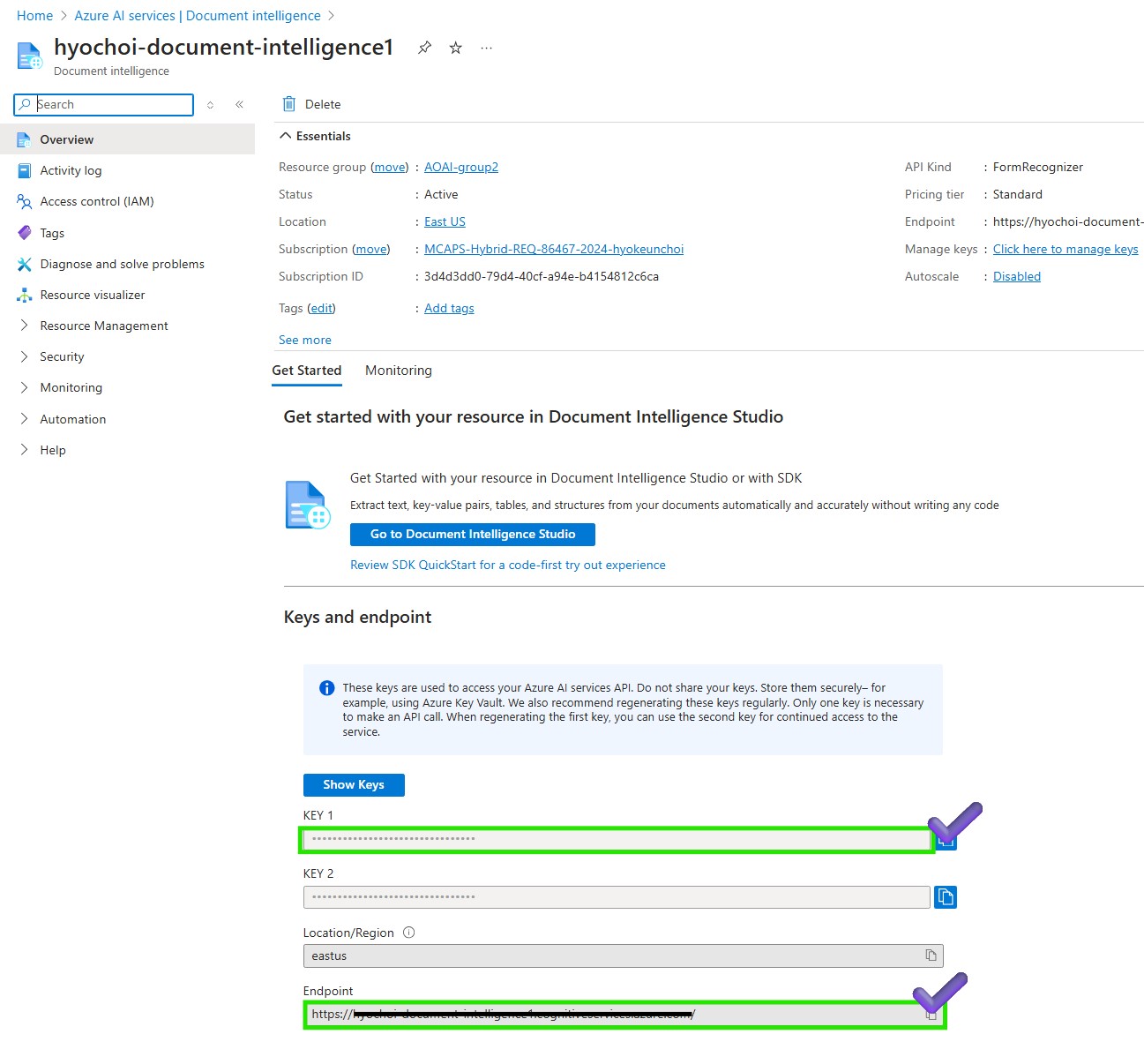

If you want to read and preprocess pdf files during this lab, you need to add the Azure Document Intelligence endpoint and API key to the

.envfile. In order to match your Azure Document Intelligence authentication. Please navigate to Azure AI services Document Intelligence > your model deployment to get the Azure Document Intelligence endpoint and API key after deploying the Azure Document Intelligence models.If you want to understand the Document Intelligence creation process, please refer to the Create a Document Intelligence resource.

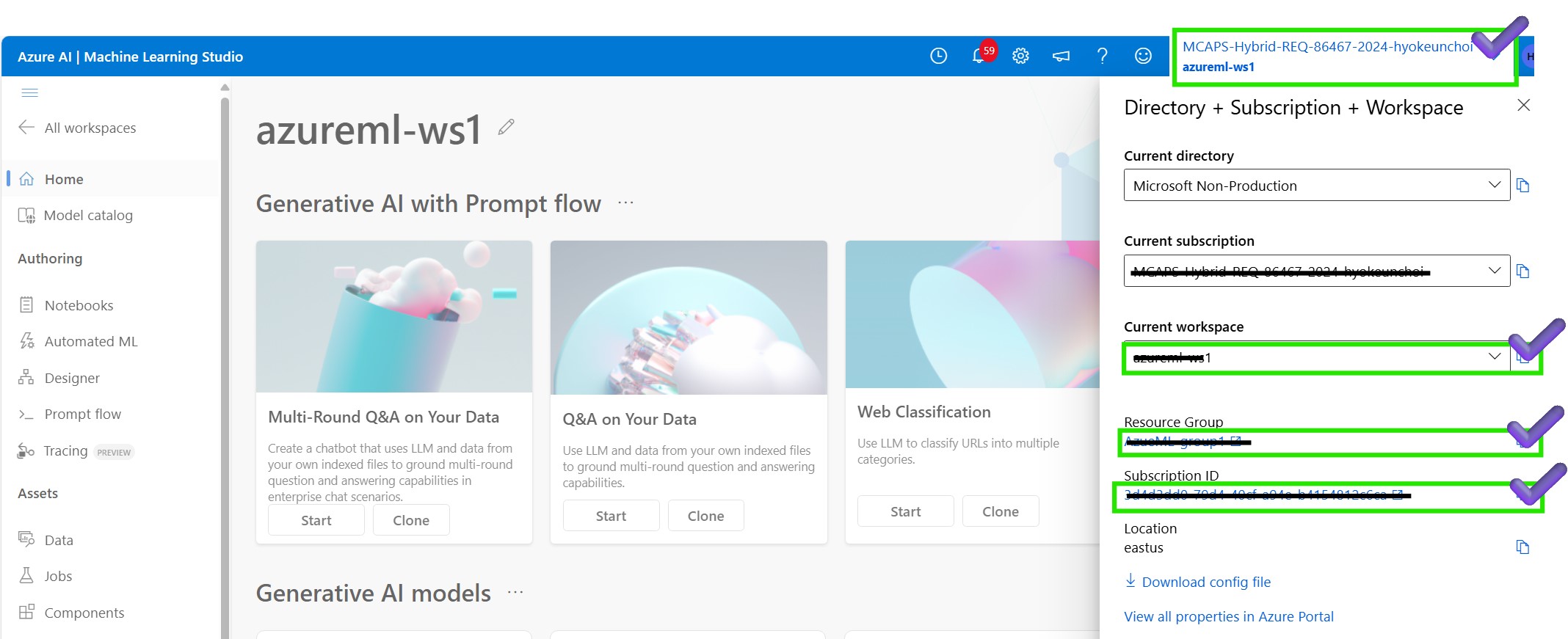

4️⃣ Setup config.yml

To get your subscription ID, resource group, and workspace name, go to Azure ML Studio and open your profile. Modify the 2_slm-fine-tuning-mlstudio/phi3/config.yml file to match your Azure subscription, resource group, workspace, and data name.

config:

AZURE_SUBSCRIPTION_ID: "<YOUR-SUBSCRIPTION-ID>" # Please modify to your subscription

AZURE_RESOURCE_GROUP: "<YOUR-RESOURCE-GROUP>" # Please modify to your Azure resource group

AZURE_WORKSPACE: "<YOUR-AZURE-WORKSPACE>" # Please modify to your Azure workspace

AZURE_DATA_NAME: "hf-ultrachat" # Please modify to your AzureML data name

DATA_DIR: "./dataset"

CLOUD_DIR: "./cloud"

HF_MODEL_NAME_OR_PATH: "microsoft/Phi-3.5-mini-instruct"

IS_DEBUG: true

USE_LOWPRIORITY_VM: true

...

🚀 Get started to validate the setup

Proceed by opening the Jupyter notebook, and follow the steps provided.

-

This extra quota is reserved for system-initiated operations such as OS upgrades and VM recovery, and it won’t incur cost unless such operations run. ↩