Lab 3.3.1 Evaluate your models using Prompt Flow (UI)

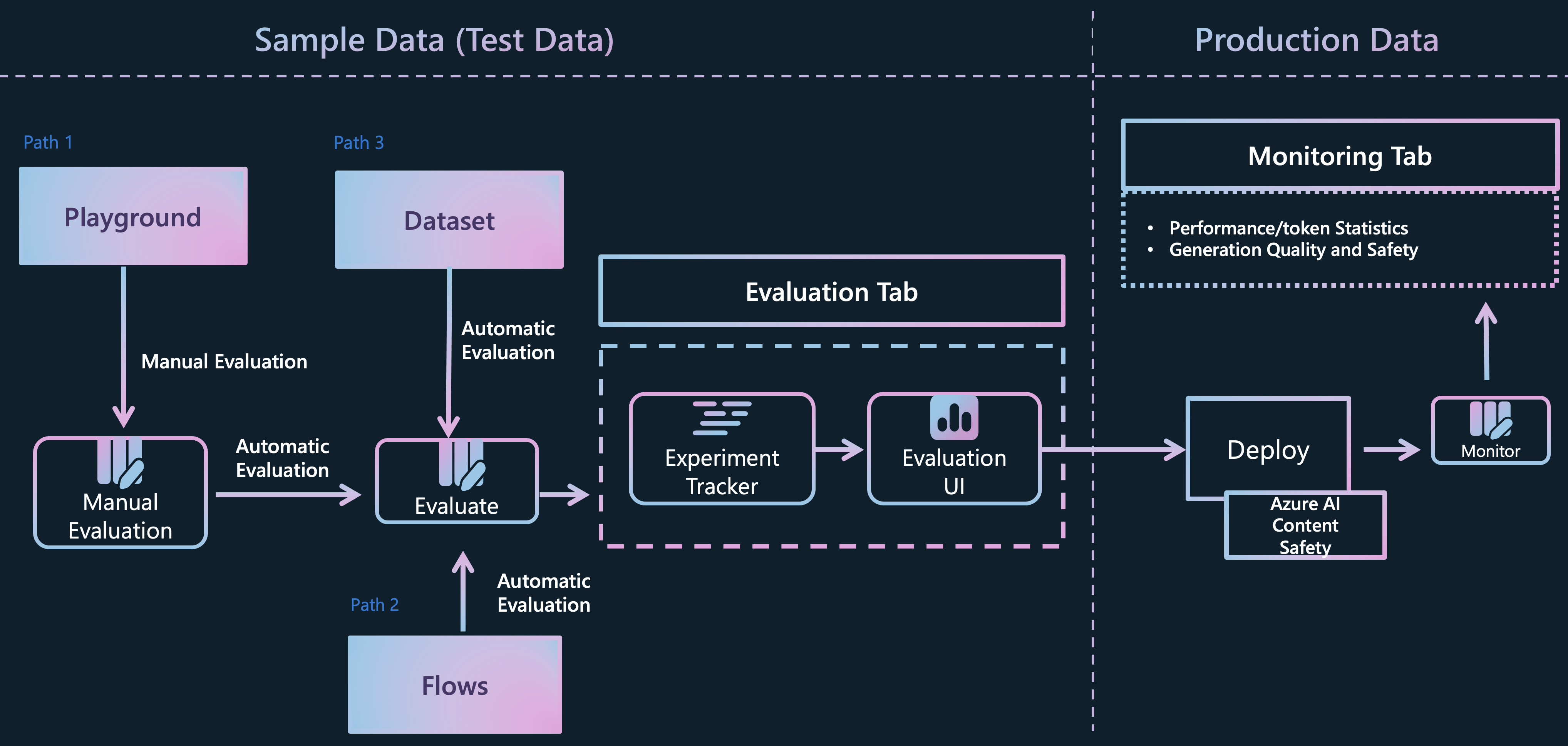

Evaluating and monitoring of generative AI applications

Evaluating and monitoring of generative AI applications

Prerequisites

- An Azure subscription where you can create an AI Hub and AI project Resource

- Deployed gpt-4o model in Azure AI Foundry

Task

- I want to quantitatively verify how well the model and RAG are answering questions

- I want to benchmark in bulk data before production to find bottlenecks and improve

TOC

- 1️⃣ Manual evaluations to review outputs of the selected model

-

2️⃣ Conduct A/B testing with your LLM variants

-

3️⃣ Create Automated Evaluation with variants

- 4️⃣ Create Custom Evaluation flow on Prompt flow

1️⃣ Manual evaluations to review outputs of the selected model

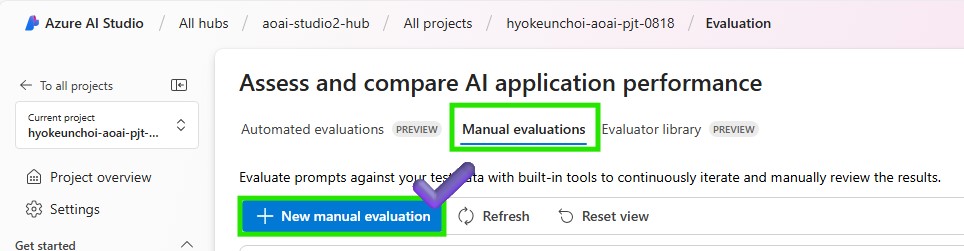

- Go to the Azure AI Foundry > Tools > Evaluation

-

Click on the “Manual Evaluation” tab to create an manual evaluation to assess and compare AI application performance.

- Select model you are going to test on the configurations and update the system message below.

You are a math assistant, and you are going to read the context which includes simple math questions and answer with numbers only. -

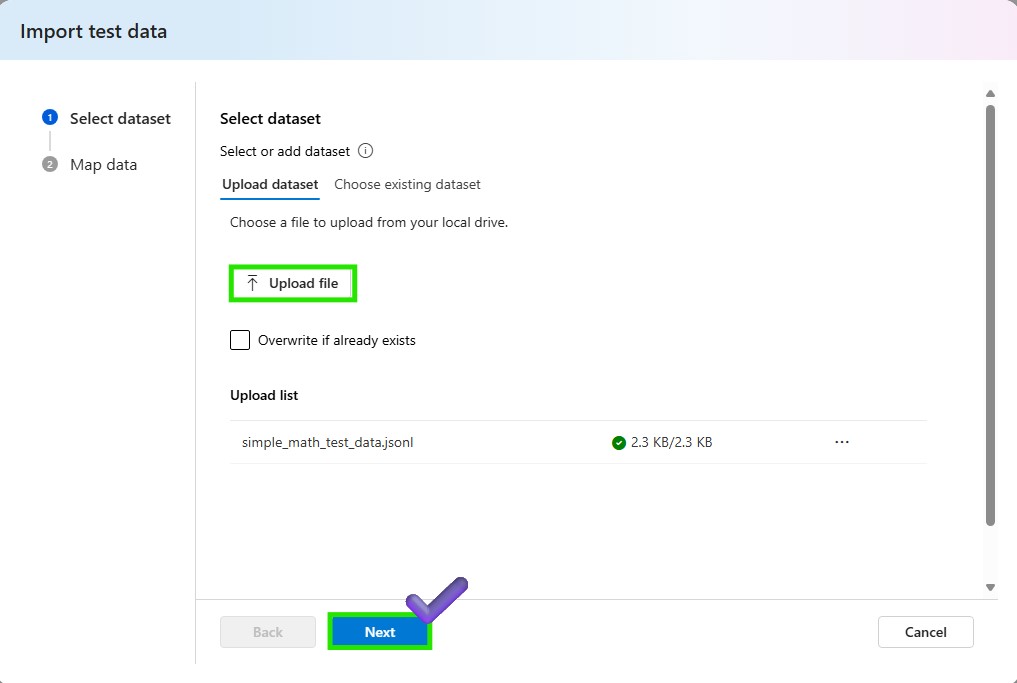

Click the import test data button to import the test data. You can add your data as well if you want to test the model with the context.

-

Select the dataset you want to test on the model.

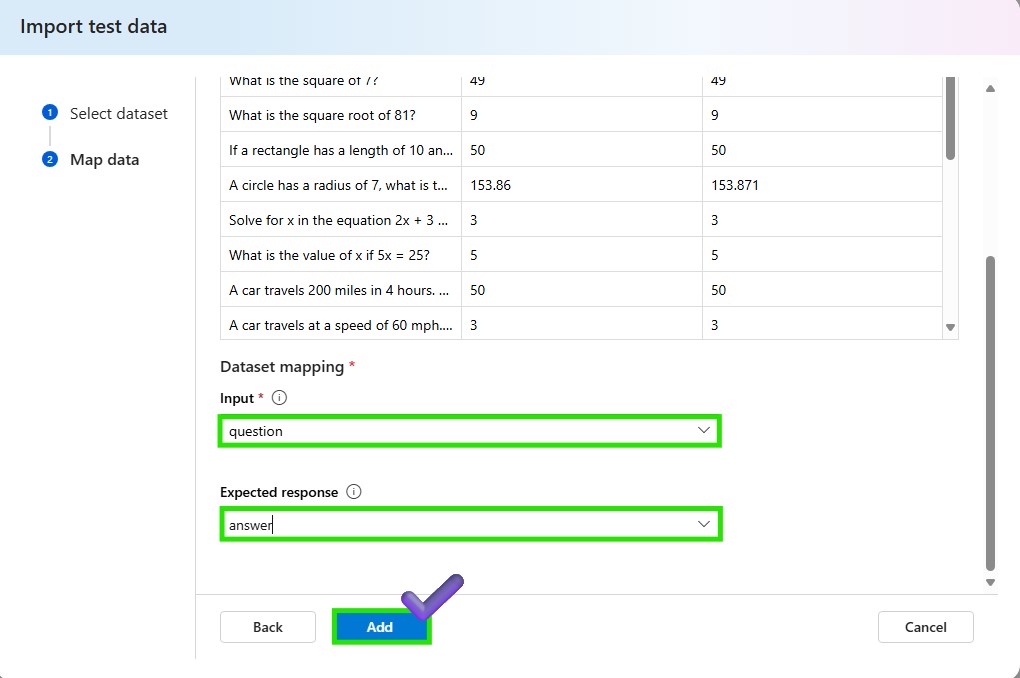

-

Map the test data. Select question as the input and answer as the output. Click the add button to import the test data.

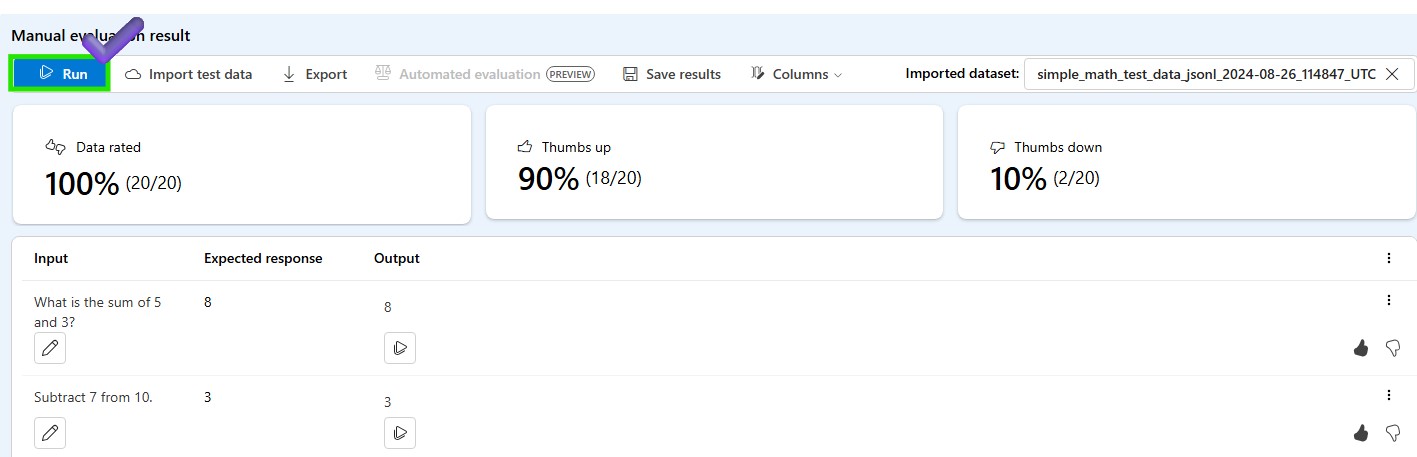

- Click the Run button to test the model with the test data. After the test is done, you can see and export the results, and you can also compare the results with the expected answers. Use thumbs up or down to evaluate the model’s performance. As this result is for the manual evaluation, you can handover the result dataset to automated evaluation to evaluate the model in bulk data.

2️⃣ Conduct A/B testing with your LLM variants

Create a new chat flow with variants

-

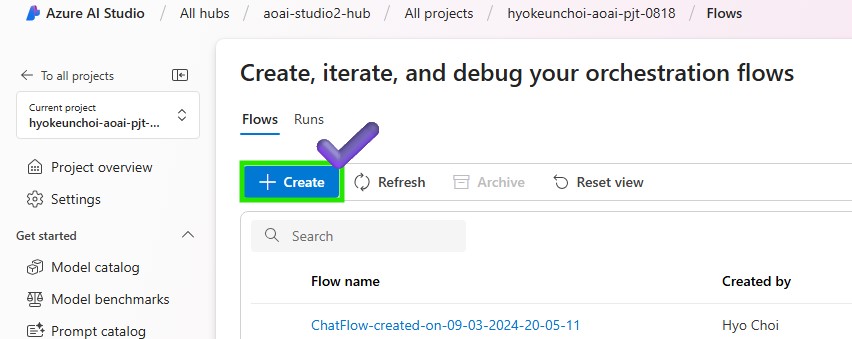

Azure AI Foundry > Prompt flow > Click +Create to create a new flow

-

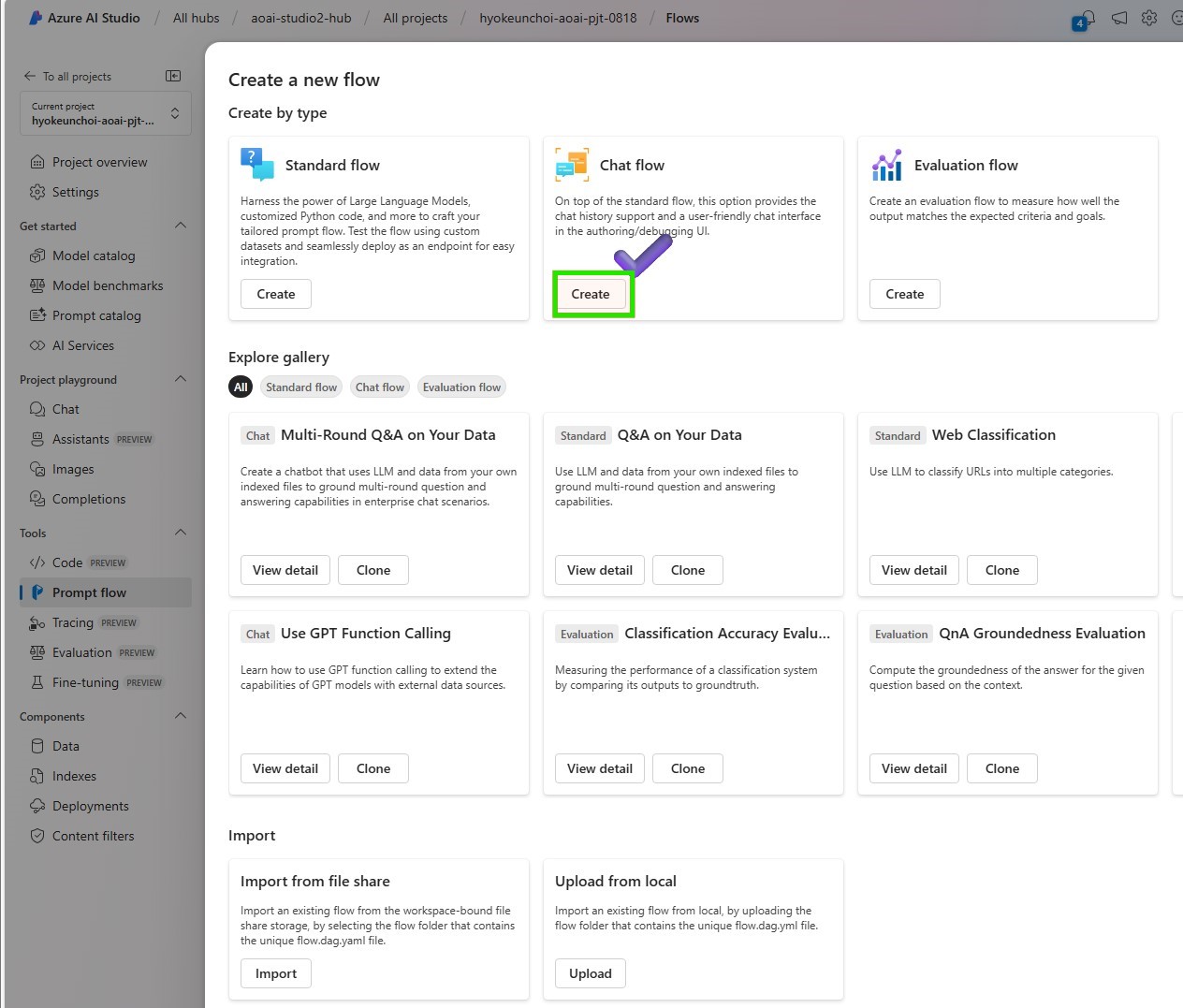

In order to get a user-friendly chat interface, select Chat flow

-

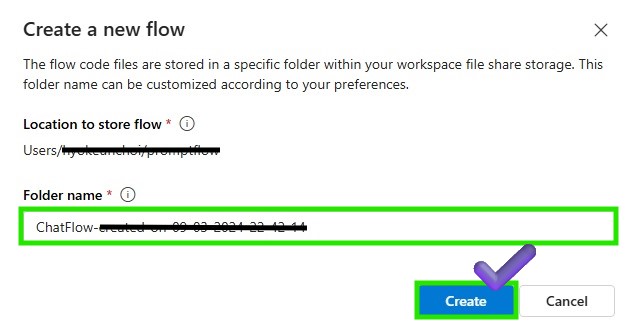

Put your folder name to store your Promptflow files and click the Create button

-

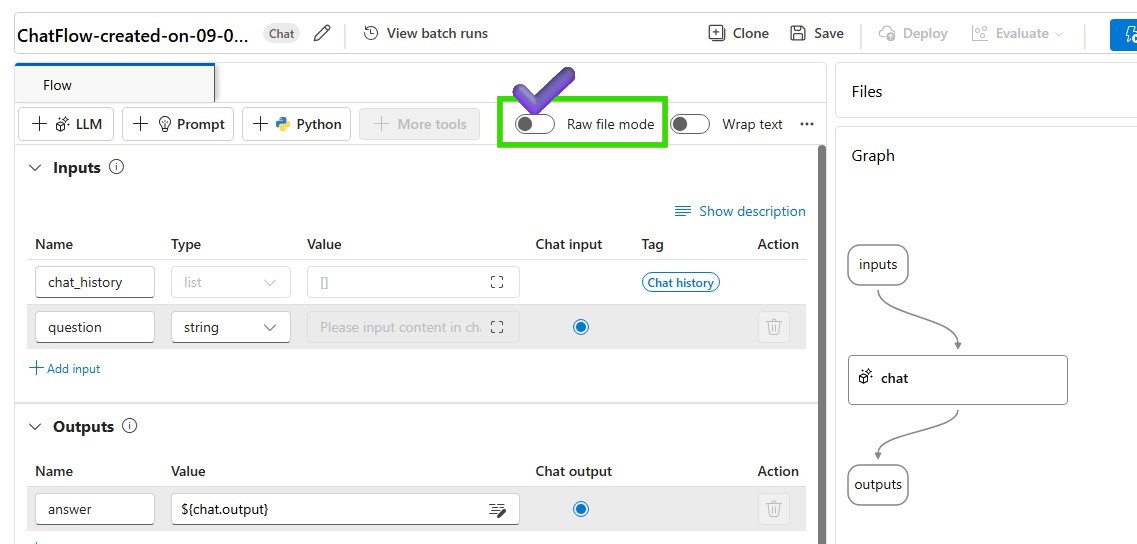

Change as raw file model to modify your basic chat flow

- Modify flow.dag.yaml attach the source code below.

id: chat_variant_flow name: Chat Variant Flow inputs: question: type: string is_chat_input: true context: type: string default: > The Alpine Explorer Tent boasts a detachable divider for privacy, numerous mesh windows and adjustable vents for ventilation, and a waterproof design. It even has a built-in gear loft for storing your outdoor essentials. In short, it's a blend of privacy, comfort, and convenience, making it your second home in the heart of nature! is_chat_input: false firstName: type: string default: "Jake" is_chat_input: false outputs: answer: type: string reference: ${chat_variants.output} is_chat_output: true nodes: - name: chat_variants type: llm source: type: code path: chat_variants.jinja2 inputs: deployment_name: gpt-4o temperature: 0.7 top_p: 1 max_tokens: 512 context: ${inputs.context} firstName: ${inputs.firstName} question: ${inputs.question} api: chat provider: AzureOpenAI connection: '' environment: python_requirements_txt: requirements.txt -

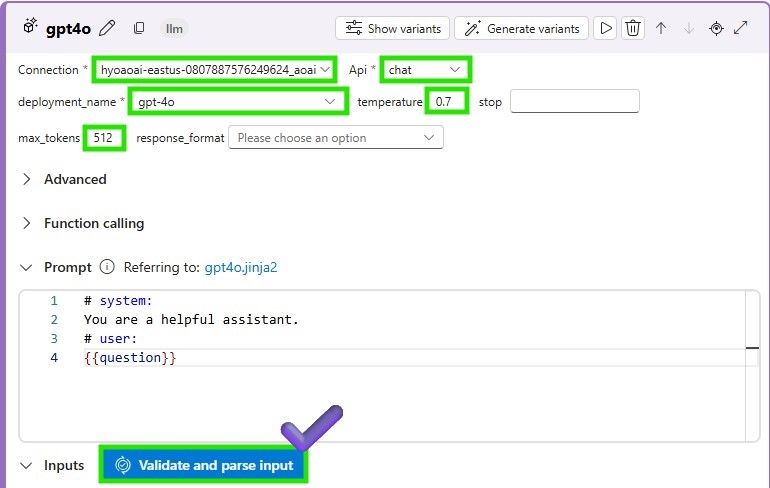

Change the Raw file mode again and Add the connection parameters of the LLM Node to call the deployed LLM model and Click Validate and parse input. Check inputs to the LLM Node in place.

- attach the prompt below on your chat_variants Node to request the deployed model.

system:

You are an AI assistant who helps people find information. As the assistant,

you answer questions briefly, succinctly, and in a personable manner using

markdown and even add some personal flair with appropriate emojis.

Add a witty joke that begins with “By the way,” or “By the way.

Don't mention the customer's name in the joke portion of your answer.

The joke should be related to the specific question asked.

For example, if the question is about tents, the joke should be specifically related to tents.

Respond in your language with a JSON object like this.

{

“answer":

“joke":

}

# Customer

You are helping to find answers to their questions.

Use their name to address them in your responses.

# Context

Use the following context to provide a more personalized response to :

user:

-

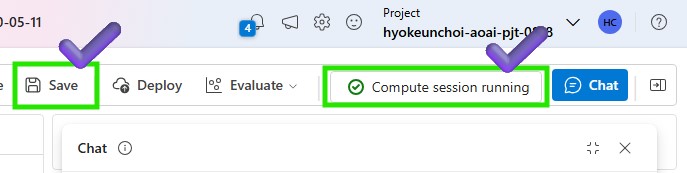

Save your modified flow. Make sure that your compute instance is running to execute the updated chat flow

-

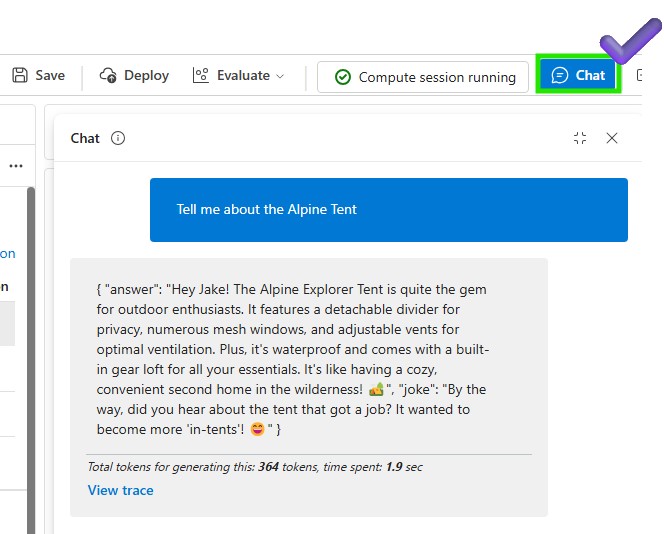

Let’s test the current flow on the chat window

-

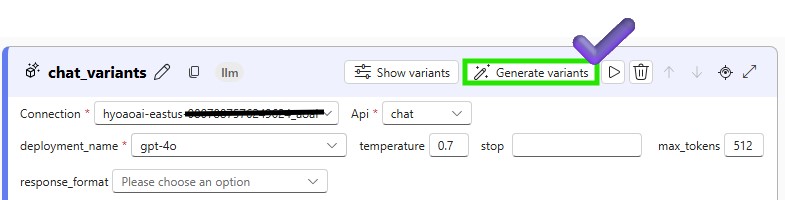

Now you can generate a variant and compare the results with the prompt written in English. Click the generate variant button to create a new variant.

11-1. Add the variant name and the prompt in Korean below. Click the save button to save the variant.

system:

당신은 사람들이 정보를 찾을 수 있도록 도와주는 AI 어시스턴트입니다. 어시스턴트로서

를 사용하여 질문에 간결하고 간결하게, 그리고 개성 있는 방식으로 답변하고

마크다운을 사용하여 간단하고 간결하게 답변하고 적절한 이모티콘으로 개인적인 감각을 더할 수도 있습니다.

"그런데, "로 시작하는 재치 있는 농담을 추가하세요. 답변의 농담 부분에서는 고객의 이름을 언급하지 마세요.

농담은 질문한 특정 질문과 관련이 있어야 합니다.

예를 들어 텐트에 대한 질문인 경우 농담은 텐트와 구체적으로 관련된 것이어야 합니다.

다음과 같은 json 객체로 한국어로 응답합니다.

{

"answer":

"joke":

}

# Customer

당신은 이 질문에 대한 답변을 찾도록 돕고 있습니다.

답변에 상대방의 이름을 사용하여 상대방을 언급하세요.

# Context

다음 컨텍스트를 사용하여 에게 보다 개인화된 응답을 제공하세요. 한국어로 답변 바랍니다:

user:

11-2. Add the variant name and the prompt in Japanese below. Click the save button to save the variant.

system:

あなたは、人々が情報を見つけるのを助けるAIアシスタントです。アシスタントとして

を使用して質問に簡潔に、簡潔に、そして個性的な方法で答えたり

マークダウンを使用してシンプルかつ簡潔に回答し、適切な絵文字で個人的な感覚を加えることもできます。

「ところで、」で始まるウィットに富んだジョークを加えましょう。回答の冗談の部分では、顧客の名前に言及しないでください。

ジョークは、質問された特定の質問に関連している必要があります。

例えば、テントに関する質問の場合、冗談はテントと具体的に関連するものでなければなりません。

次のようなjsonオブジェクトで日本語で回答します。

{

"answer":

"joke":

}

# Customer

あなたは この質問に対する答えを見つけるのを手伝っています。

回答に相手の名前を使用して、相手の名前を言及してください。

# Context

次のコンテキストを使用して に対してよりパーソナライズされた回答を提供します。日本語で回答してください:

user:

- Now you can test the variants on the chat window setting one of variants as default. Click the Run button to test the variant.

3️⃣ Create QnA Relevance Evaluation flow with variants

-

Go to the Azure AI Foundry > Tools > Evaluation

-

Click on the “+New Evaluation” on the Automated evaluations tab to create.

-

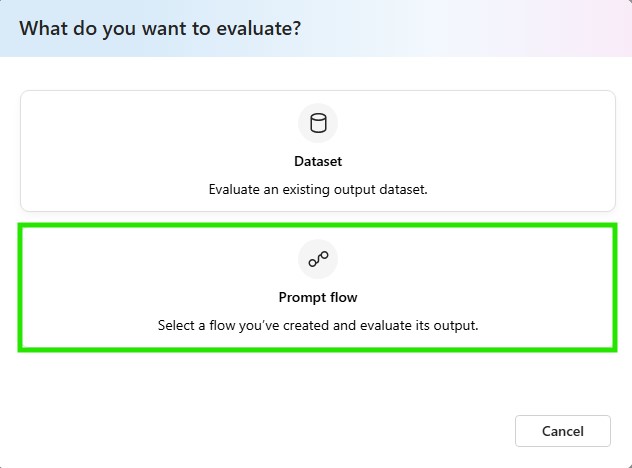

Click on the “Prompt flow” to select a flow to evaluaute its output

-

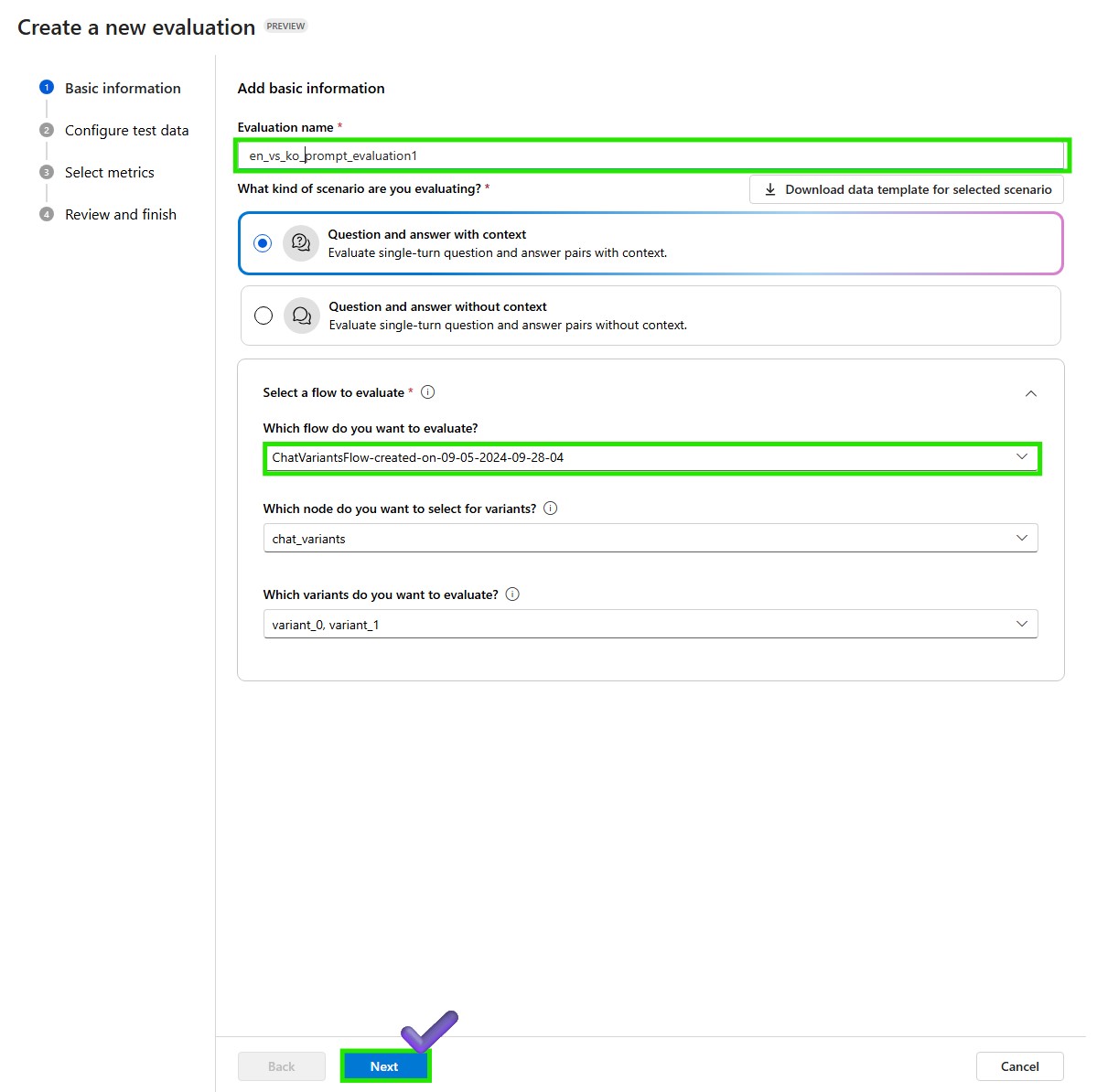

Add basic information for the evaluation. Put the name of the evaluation as ‘variant1_en’ and select the flow you want to evaluate. Select “Question and answer with context” as your evaluation scenario. Click the Next button to continue.

-

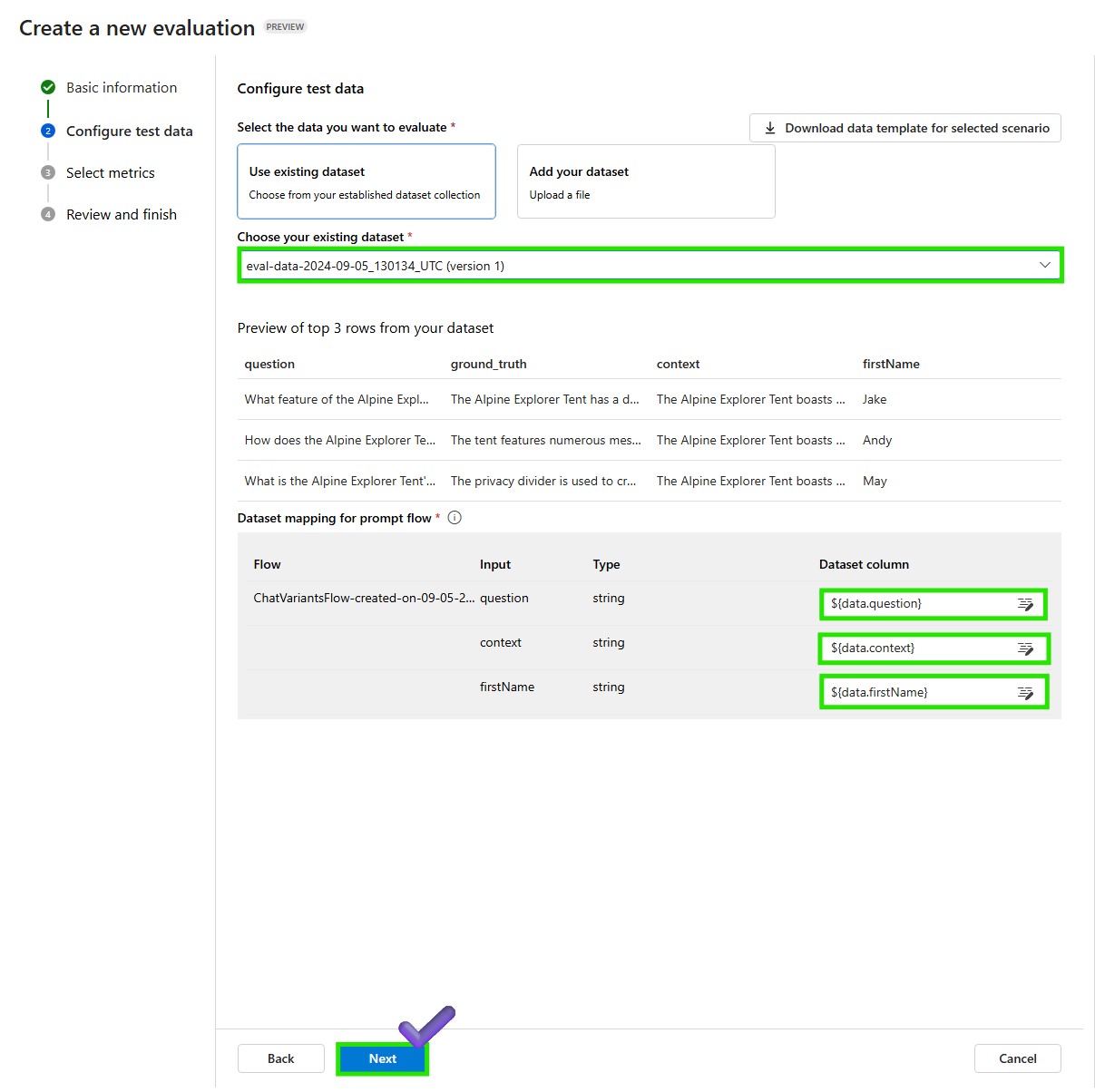

Add ‘simple_qna_data_en.jsonl’ as your dataset and map the question, firstName, context and dataset column. Click the Next button to continue.

-

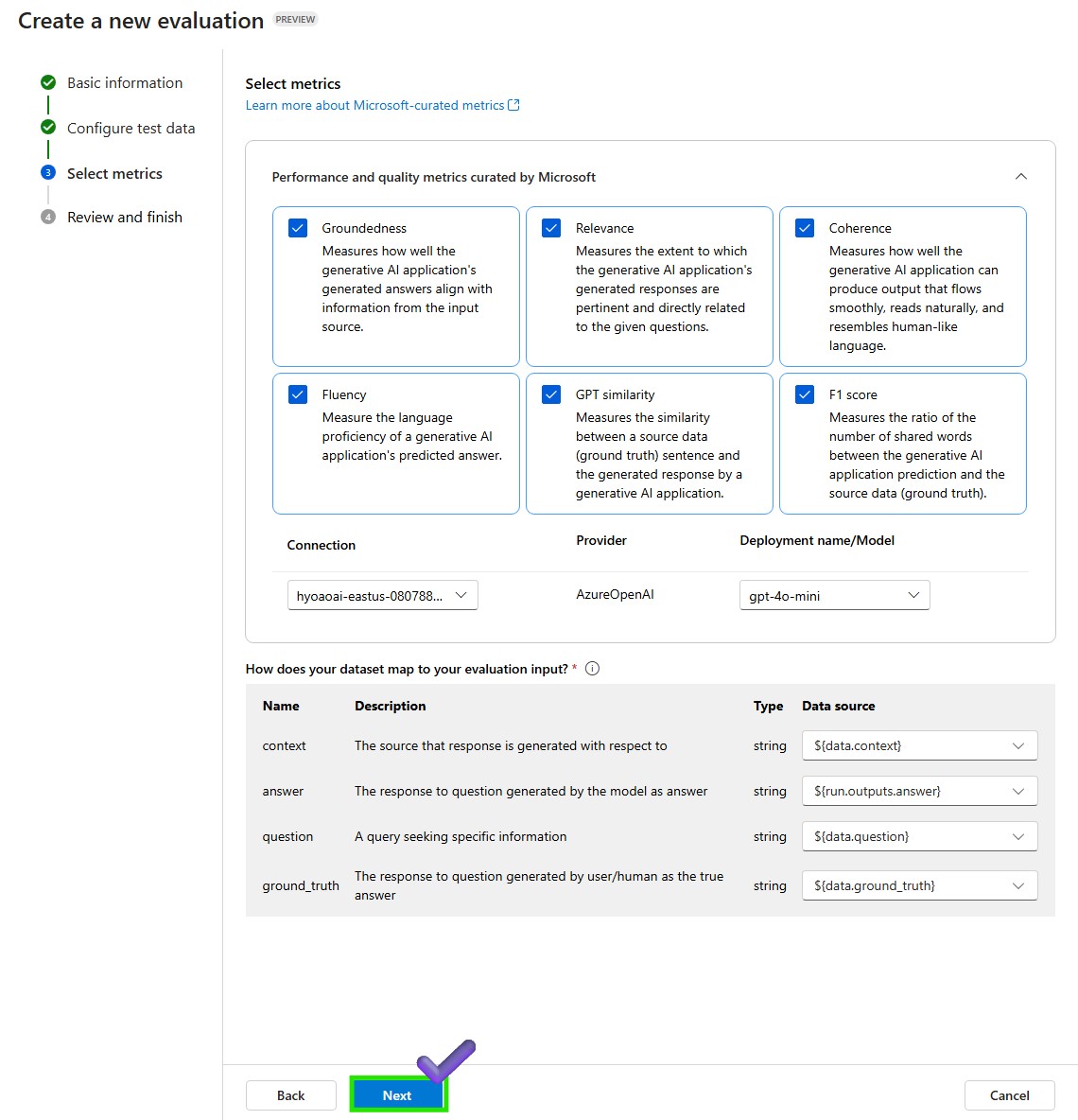

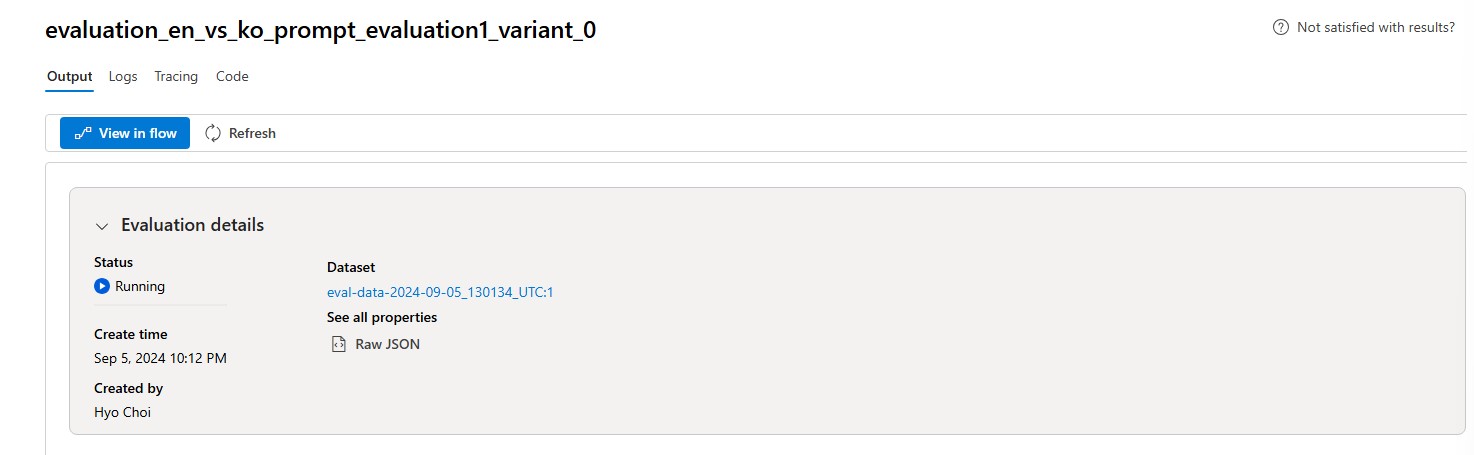

Select Evaluation Metrics. You can select the metrics against which you want to evaluate the model. Enter the connection and deployment model and click the Next button, then review the final configuration and click the Submit button to start/wait for the evaluation.

🧪 +For Your Information

Evaluator is an asset that can be used to run evaluation. You can define evaluator in SDK and run evaluation to generate scores of one or more metrics. In order to use AI-assisted quality and safety evaluators with the prompt flow SDK, check the Evaluate with the prompt flow SDK