Get started with the Azure AI Vision Face UI SDK for iOS

In this sample, you will learn how to build and run the face liveness detection application.

Contents

API Reference Documentation

- Swift API reference: AzureAIVisionFaceUI

Prerequisites

- An Azure Face API resource subscription.

- A Mac (with iOS development environment, Xcode 13+), an iPhone (iOS 14+).

- An Apple developer account to install and run development apps on the iPhone.

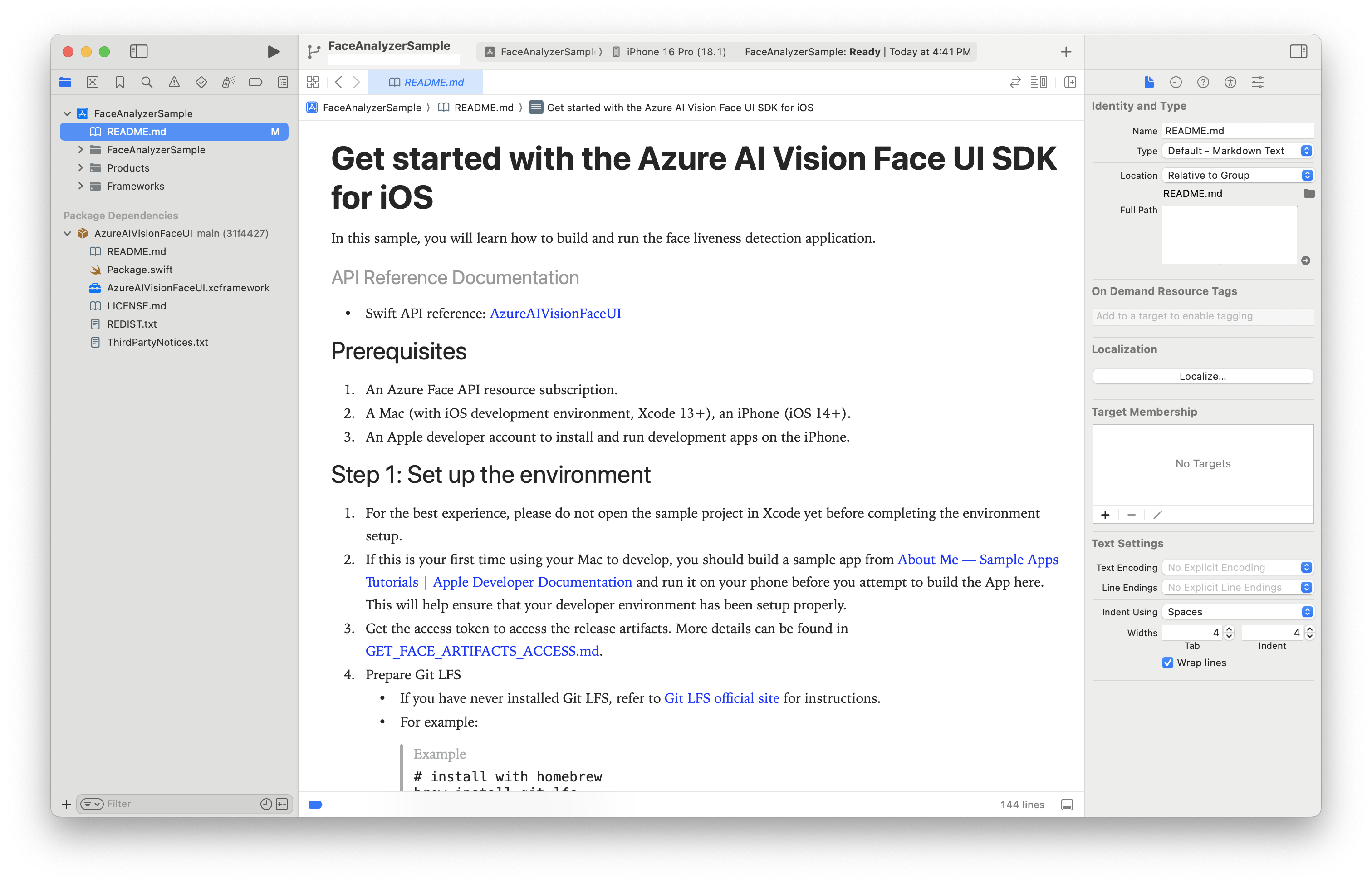

Step 1: Set up the environment

Prepare Xcode environment: For the best experience, please do not open the sample project in Xcode yet before completing the environment setup.

Verify iOS development setup: If this is your first time using your Mac to develop, you should build a sample app from About Me — Sample Apps Tutorials | Apple Developer Documentation and run it on your phone before you attempt to build the App here. This will help ensure that your developer environment has been setup properly.

Get PAT access token: Fetch the PAT access token required for downloading release artifacts, using the API: Get Client Assets Access Token

Install Git LFS: Prepare Git LFS

- If you have never installed Git LFS, refer to Git LFS official site for instructions.

- For example:

# install with homebrew brew install git-lfs # verify and initialize git lfs --version git lfs installConfigure Git authentication: The sample app project has been preconfigured to reference the SDK through Swift Package Manager (SPM). Configure the authorization of the git repository from which SPM will pull the package:

5.1. Open your global git config file.

```sh

# path will be shown by the following command, then open it using editor

git config --global --show-origin --list | head -1

# alternatively default editor will be used if using the following command

git config --global --edit

```

5.2. Get the PAT access token from step 3 above, then add it to the config:

```config

[credential "https://msface.visualstudio.com"]

username = pat

helper =

helper = "!f() { test \"$1\" = get && echo \"password=INSERT_PAT_HERE\"; }; f"

# get PAT from Get Client Assets Access Token REST API and paste ^^^^^^^^^^^^^^^ above, replacing "INSERT_PAT_HERE".

# username does not matter for PAT so long as it is not left blank.

# the first blank helper line is necessary to override existing helpers and not a typo.

```

- for other methods of dependency such as CocoaPods, or other methods of git authentication, please refer to the FAQ section of this document.

- Install Xcode Command Line Tools: If Xcode Command Line Tools is never installed on your machine, install it first following instructions from Apple Developer website.

Step 2: Build and run sample app

Build the sample

- Download the sample App folder, extract it but do not open it yet.

Run the following command from Terminal, from the directory where your .xcodeproj is located, as appropriate for your project. It will resolve the package through your system Git. Your system Git should already have Git LFS configured, as mentioned in Prerequisites section.

xcodebuild -scmProvider system -resolvePackageDependenciesOpen the .xcodeproj file.

Verify the package dependency through Swift Package Manager or other methods as described above.

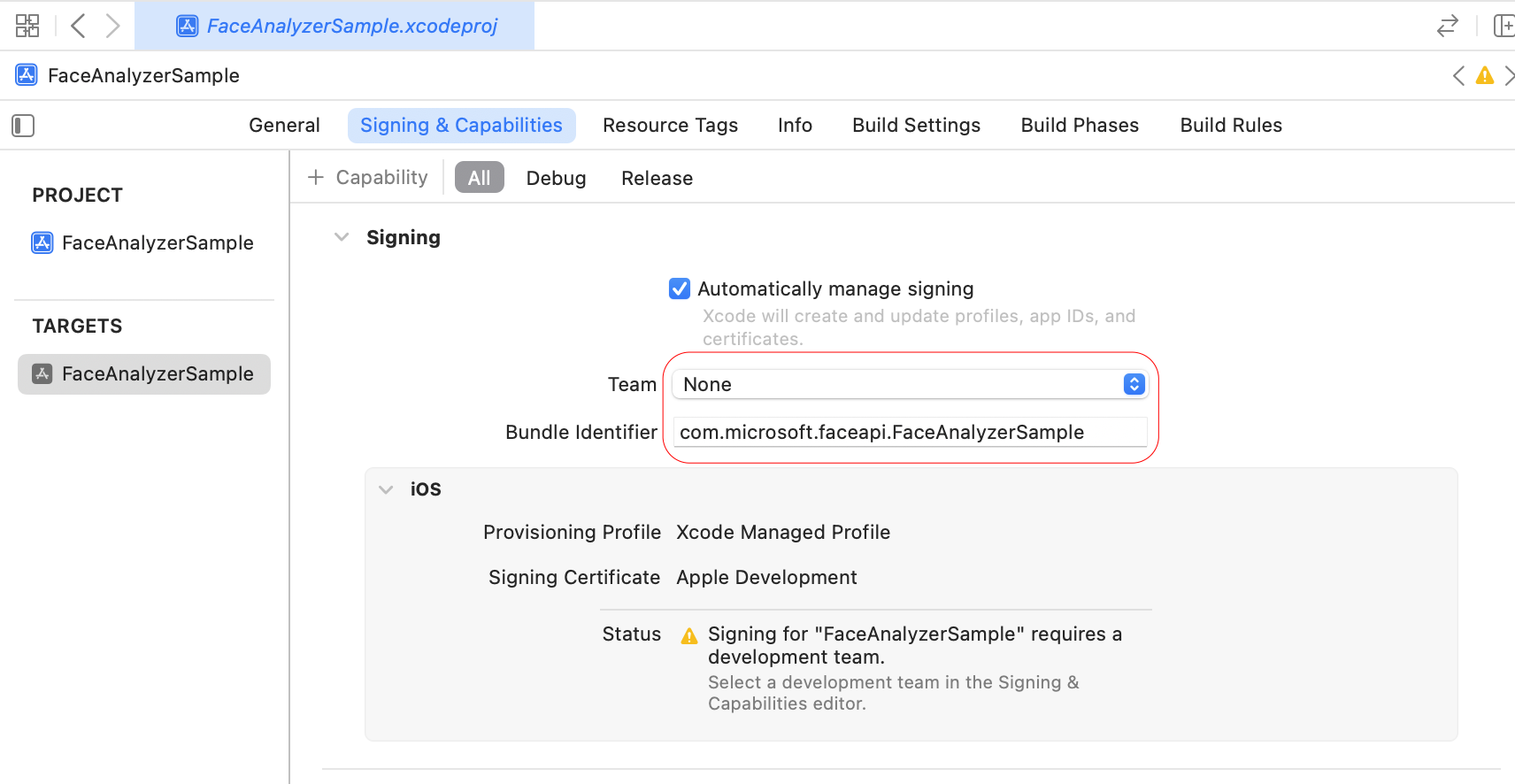

In Xcode → Targets → Signing & Capabilities, set the App bundle identifier and developer team.

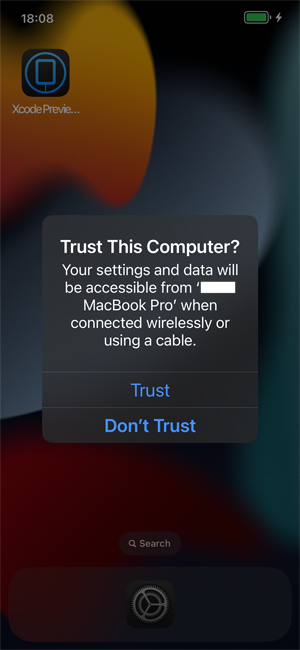

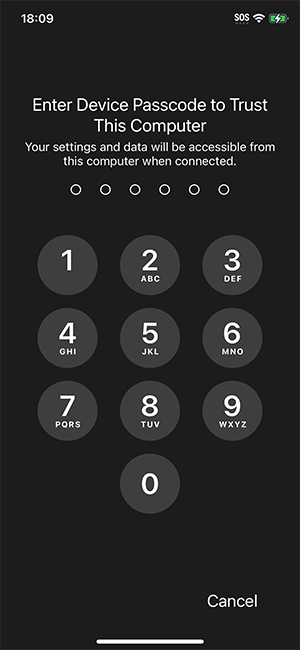

Connect your iPhone to the Mac, then trust the Mac when prompted.

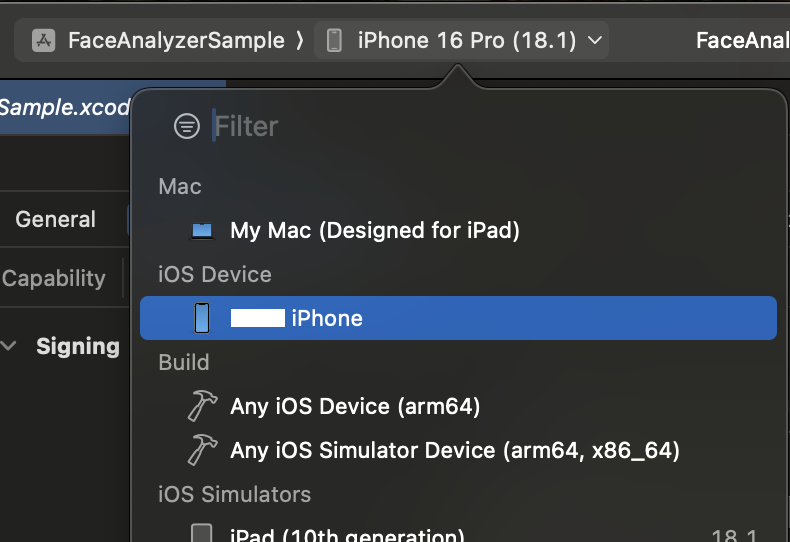

Select your iPhone in the Xcode top bar.

Build and run the app.

Run the sample

- Allow camera permission when prompted.

- This sample creates token on the client, so it needs the API configuration. In production scenario, this will not be necessary. For now, go to the settings page and configure:

- API endpoint

- Subscription key

- API endpoint

- Try one of the buttons (such as “Liveness”) to begin testing.

Test out key scenarios

Liveness

- Tap “Liveness” then “Start” and show your face.

- The screen flashes for liveness analysis.

- Observe the Real/Spoof status.

LivenessWithVerify

- Tap “LivenessWithVerify” then select a reference face image.

- Show your face to the camera.

- Observe the Real/Spoof status, verification status, and confidence score.