This is the multi-page printable view of this section. Click here to print.

Concepts

1 - Azure OpenAI

Azure OpenAI Service provides REST API access to OpenAI’s powerful language models including the GPT-4, GPT-35-Turbo, and Embeddings model series. In addition, the new GPT-4 and gpt-35-turbo model series have now reached general availability. These models can be easily adapted to your specific task including but not limited to content generation, summarization, semantic search, and natural language to code translation. Users can access the service through REST APIs, Python SDK, or our web-based interface in the Azure OpenAI Studio.

Important concepts about Azure OpenAI:

Models available

- GPT-35-Turbo series: typical “chatGPT” model, recommended for most of the Azure OpenAI projects. When we might need more capability, GPT4 can me considered (take into account it will imply more latency and cost)

- GPT-4 series: they are the most advanced language models, available once you fill in the GPT4 Request Form

- Embeddings series: embeddings make it easier to do machine learning on large inputs representing words by capturing the semantic similarities in a vector space. Therefore, you can use embeddings to determine if two text chunks are semantically related or similar, and provide a score to assess similarity.

Take into account that not all models are available in all Azure Regions, for Regional availability check the documentation: Model summary table and region availability

Deployment: once you instantiate a specific model, it will be available as deployment. You can create and delete deployments of available models as you wish. This is managed through the AOAI Studio.

Quotas: the quotas available in Azure are allocated per model and per region, within a subscription. Learn more about quotas. In the documentation you can find best practices to manage your quota.

AI Hub uses Azure OpenAI Embeddings model to vectorize the content and ChatGPT model to conversate with that content.

More information at the official documentation: What is Azure OpenAI

2 - Azure AI Content Safety

Azure AI Content Safety detects harmful user-generated and AI-generated content in applications and services. Azure AI Content Safety includes text and image APIs that allow you to detect material that is harmful. We also have an interactive Content Safety Studio that allows you to view, explore and try out sample code for detecting harmful content across different modalities.

Moderator works both for text and image content. It can be used to detect adult content, racy content, offensive content, and more. The service can be used to moderate content from a variety of sources, such as social media, public-facing communication tools, and enterprise applications.

- Language models analyze multilingual text, in both short and long form, with an understanding of context and semantics

- Vision models perform image recognition and detect objects in images using state-of-the-art Florence technology

- AI content classifiers identify sexual, violent, hate, and self-harm content with high levels of granularity

- Content moderation severity scores indicate the level of content risk on a scale of low to high

Azure AI Content Safety Studio is an online tool designed to handle potentially offensive, risky, or undesirable content using cutting-edge content moderation ML models. It provides templates and customized workflows, enabling users to choose and build their own content moderation system. Users can upload their own content or try it out with provided sample content.

AI Hub uses Azure AI Content Safety to moderate the content of the user’s query, and to moderate the content of the response generated by our LLM (ChatGPT).

Learn more at the official documentation: What is Azure AI Content Safety?

3 - Azure Cognitive Search

Azure Cognitive Search (formerly known as “Azure Search”) is a cloud search service that gives developers infrastructure, APIs, and tools for building a rich search experience over private, heterogeneous content in web, mobile, and enterprise applications.

Search is foundational to any app that surfaces text to users, where common scenarios include catalog or document search, online retail apps, or data exploration over proprietary content. When you create a search service, you’ll work with the following capabilities:

- A search engine for full text and vector search over a search index containing user-owned content

- Rich indexing, with lexical analysis and optional AI enrichment for content extraction and transformation

- Rich query syntax for vector queries, text search, fuzzy search, autocomplete, geo-search and more

- Programmability through REST APIs and client libraries in Azure SDKs

- Azure integration at the data layer, machine learning layer, and AI (Azure AI services)

AI Hub uses Azure Cognitive Search to serve an index of vectorized content, that will be used by our LLM (ChatGPT) to respond to user’s query.

Learn more at the official documentation: What is Azure Cognitive Search?.

Learning Path:Implement knowledge mining with Azure Cognitive Search

4 - Azure Container Apps

Azure Container Apps is a managed serverless container service that enables executing code in containers without the overhead of managing virtual machines, orchestrators, or adopting a highly opinionated application framework.

Common uses of Azure Container Apps include:

- Deploying API endpoints

- Hosting background processing jobs

- Handling event-driven processing

- Running microservices

AI Hub uses Azure Container Apps to deploy the chat user interface that will answer user queries based on the company’s documents.

Learn more about Azure Container Apps: Azure Container Apps documentation?

5 - Azure Functions

Azure Functions is a serverless solution that allows you to write less code, maintain less infrastructure, and save on costs. Instead of worrying about deploying and maintaining servers, the cloud infrastructure provides all the up-to-date resources needed to keep your applications running.

Functions provides a comprehensive set of event-driven triggers and bindings that connect your functions to other services without having to write extra code. You focus on the code that matters most to you, in the most productive language for you, and Azure Functions handles the rest.

AI Hub uses Azure Function to create chunks of the documents text and create embeddings to be added to the Azure Cognitive Search index.

Learn more about Azure Functions: What is Azure Function?. For the best experience with the Functions documentation, choose your preferred development language from the list of native Functions languages at the top of the article.

6 - Azure Storage

Azure Blob Storage is Microsoft’s object storage solution for the cloud. Blob Storage is optimized for storing massive amounts of unstructured data. Unstructured data is data that doesn’t adhere to a particular data model or definition, such as text or binary data.

Blob Storage is designed for:

- Serving images or documents directly to a browser.

- Storing files for distributed access.

- Streaming video and audio.

- Writing to log files.

- Storing data for backup and restore, disaster recovery, and archiving.

- Storing data for analysis by an on-premises or Azure-hosted service.

AI Hub uses Blob Storage to store the documents (PDFs) that will be then vectorized, indexed or analyzed.

Learn more about Azure Blob Storage: What is Azure Blob Storage?

7 - Semantic Kernel

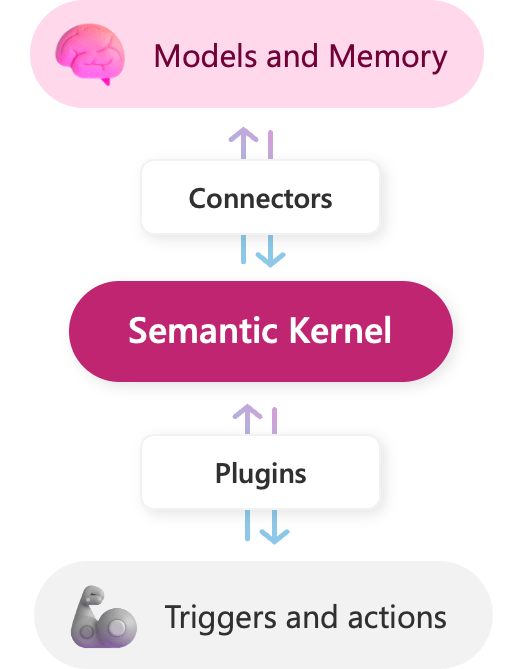

Semantic Kernel is an open-source SDK that lets you easily combine AI services like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C# and Python. By doing so, you can create AI apps that combine the best of both worlds.

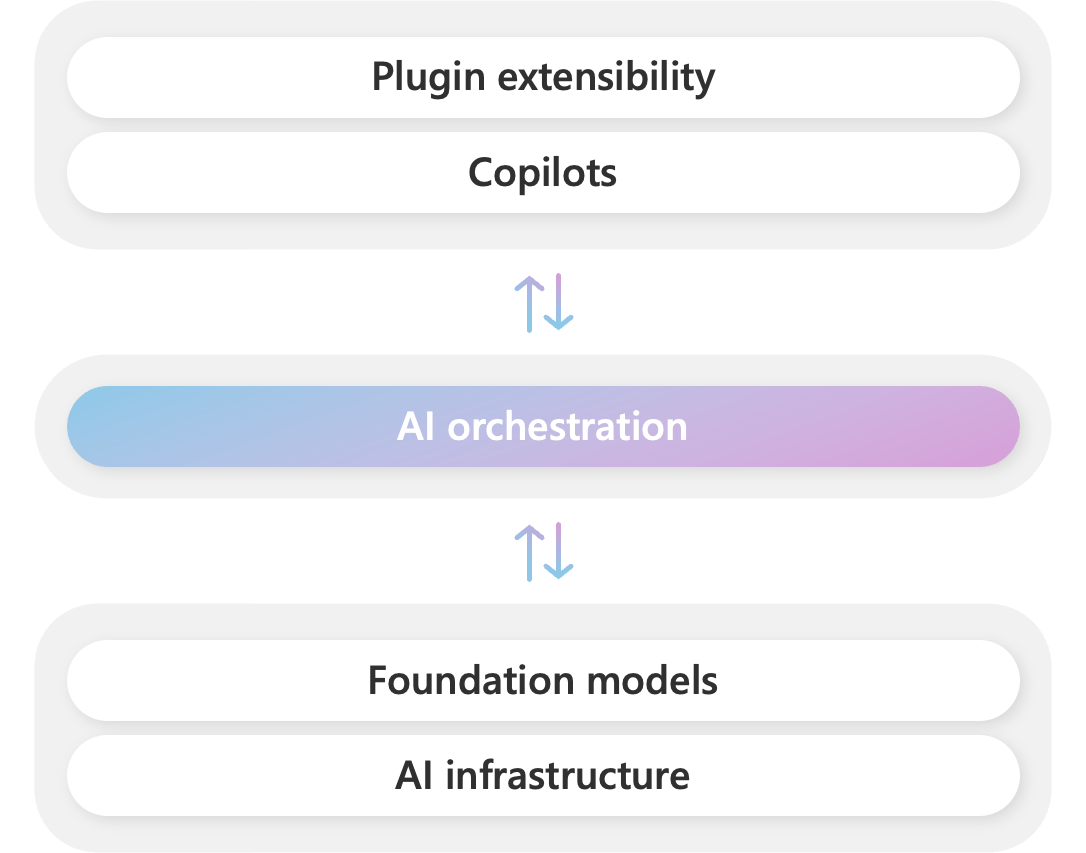

Microsoft powers its Copilot system with a stack of AI models and plugins. At the center of this stack is an AI orchestration layer that allows us to combine AI models and plugins together to create brand new experiences for users.

To help developers build their own Copilot experiences on top of AI plugins, we have released Semantic Kernel, a lightweight open-source SDK that allows you to orchestrate AI plugins. With Semantic Kernel, you can leverage the same AI orchestration patterns that power Microsoft 365 Copilot and Bing in your own apps, while still leveraging your existing development skills and investments.

Semantic Kernel and the AI Hub

At present, the AI Hub does not use the Semantic Kernel directly in its use case examples. However, it does demonstrate its usage through two specific examples that show how easely is to create OpenAI plugins using Semantic Kernel as an SDK.

Here are the available plugin examples:

Call Analysis: This plugin uses Semantic Kernel to implement features similar to those demonstrated in the Call Center Analytics use case.

Financial Product Comparison: This plugin employs Semantic Kernel to combine native and prompt functions to compare a specific financial product with others in the market. It combines prompts with the Web Search Engine Plugin, which is further integrated with the Bing Search connector.

For more information, please refer to the OpenAI Plugins section. You can also learn more about Semantic Kernel in its official documentation.