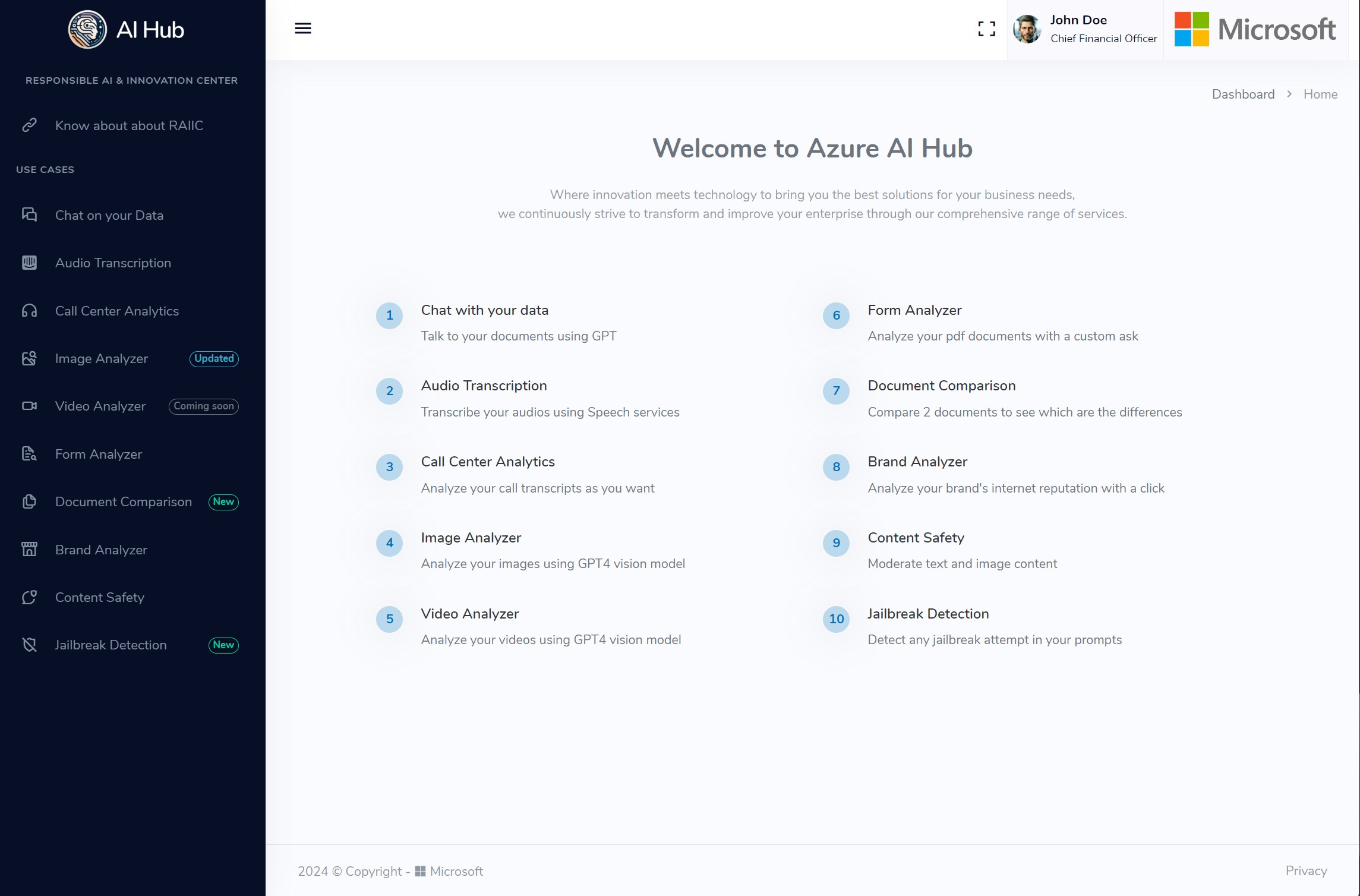

AI Hub! — To show in a simple way different Azure OpenAI features and services.

This delivery guide will guide you through some of the most common scenarios and uses cases when using Azure OpenAI.

This is the multi-page printable view of this section. Click here to print.

AI Hub! — To show in a simple way different Azure OpenAI features and services.

This delivery guide will guide you through some of the most common scenarios and uses cases when using Azure OpenAI.

AI Hub solution is a new offering that has different delivery modes. You can use it as a presales activity to demonstrate to customer the various Azure Open AI features that are accessible with minimal coding effort or you can deliver it as a Non-technical Hands on. This is intended for executives or other non-technical leads. The goal is to show different solutions where customers can test and proceed with further steps such as VBD engagements or new AOAI opportunities.

To use this solution, you will need the following:

By the end of this delivery, you will be able to:

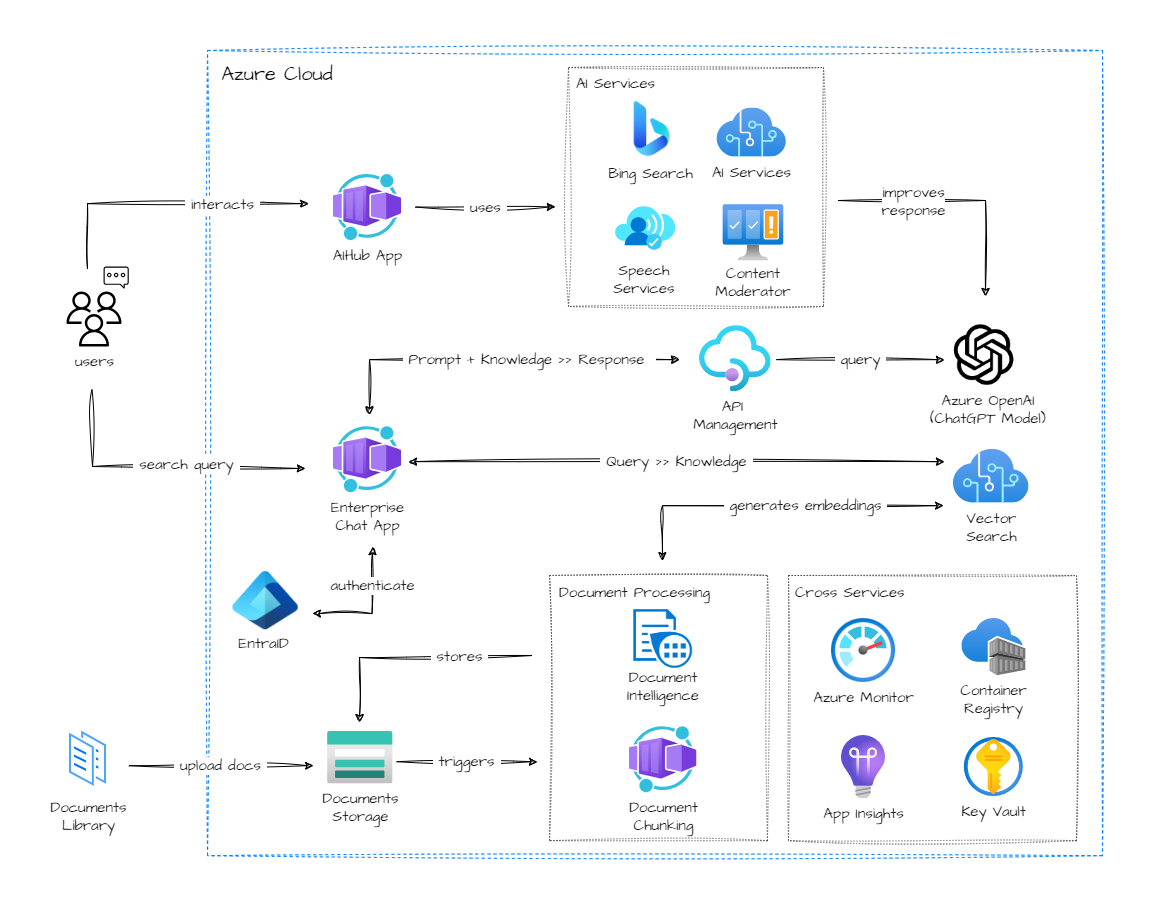

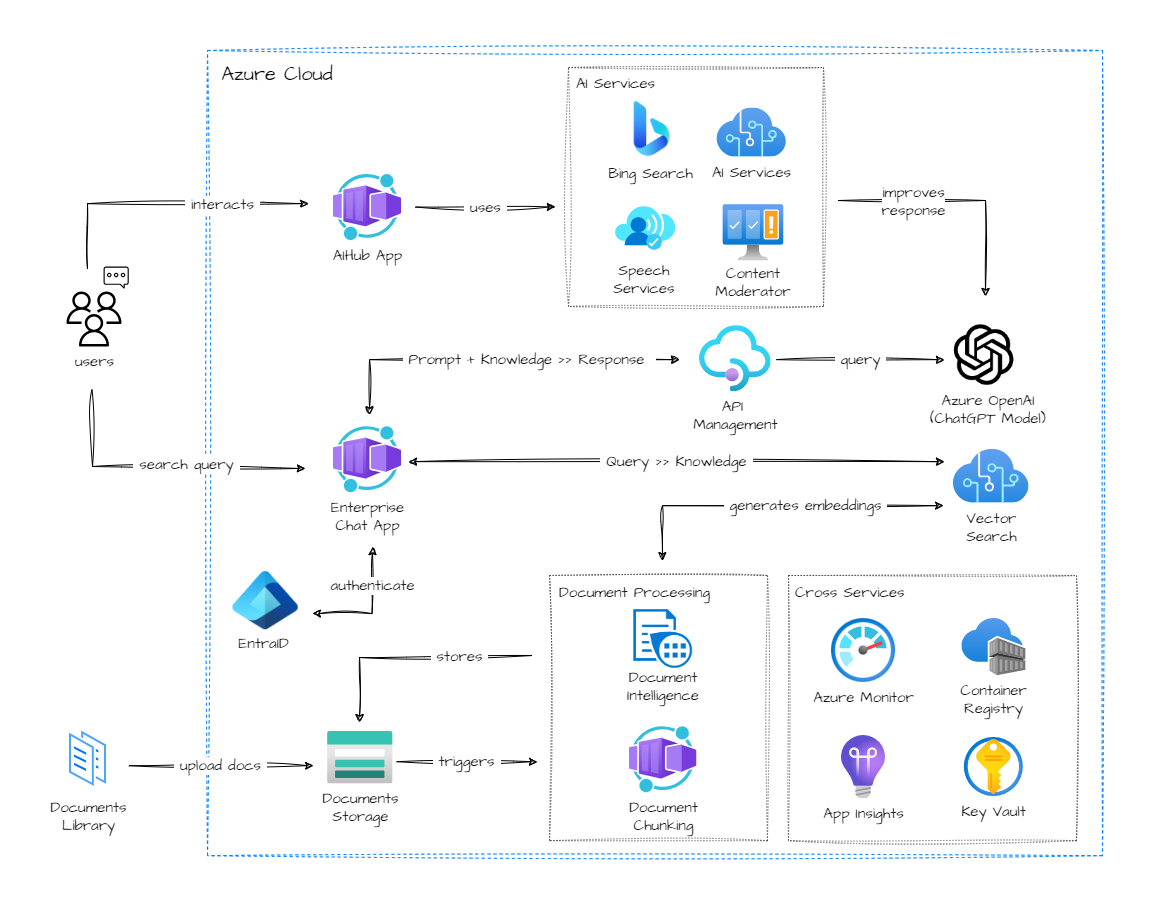

The following diagram shows the high-level architecture of the AI Hub solution:

This delivery involves the the following steps:

By the end of the AI Hub delivery, stakeholders will have a strong and innovative perspective of some AOAI services which can be used separately or together to improve their document retrieval efficiency, enhance their user experience, increase their productivity, share their knowledge, gain data insights and analytics, and comply with security standards.

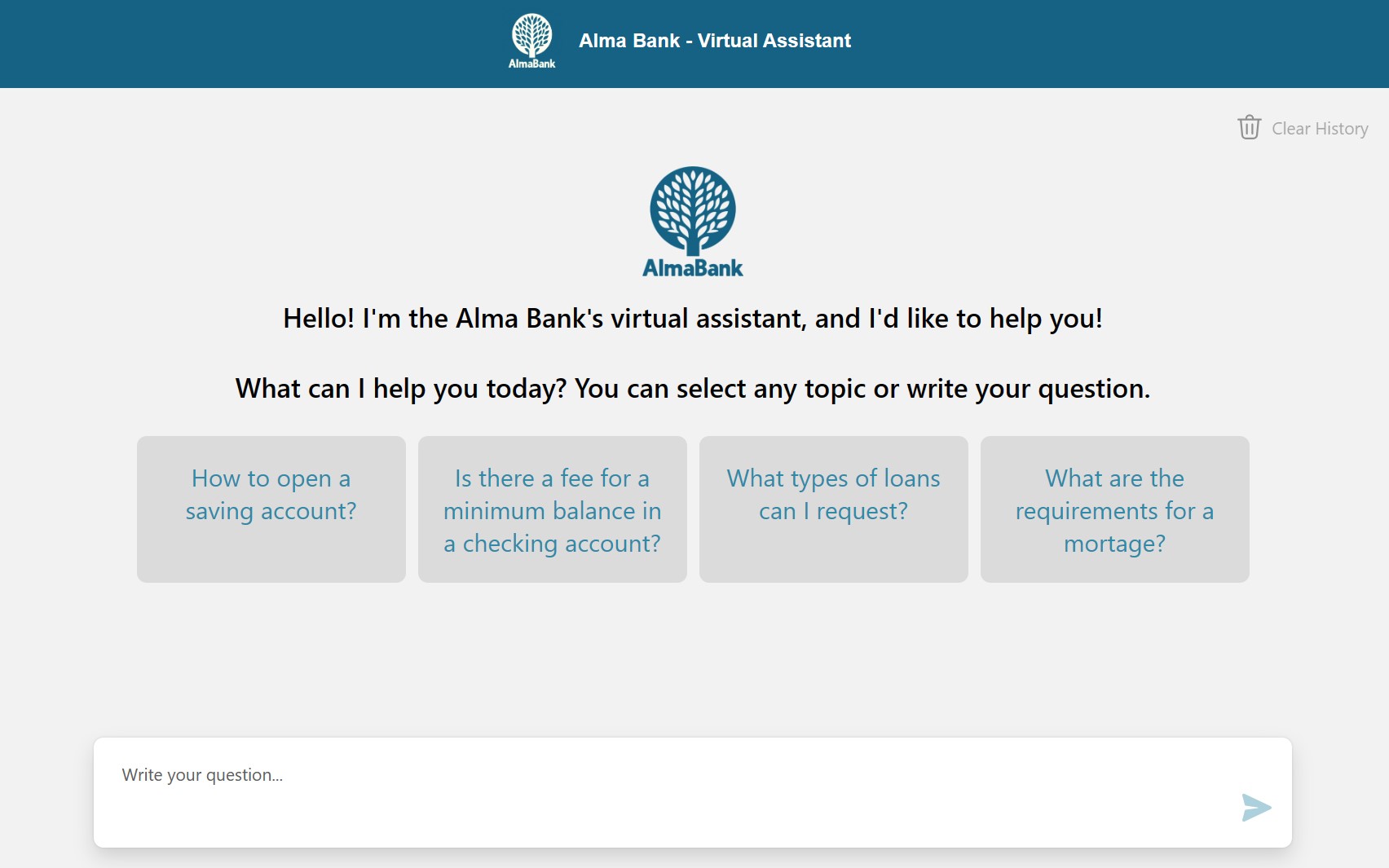

Feel the power of artificial intelligence and cloud computing to provide a smart and scalable document search and retrieval solution. The solution uses Azure OpenAI, Cognitive Search, Container Apps, Application Insights, and Azure API Management to create a chat interface that can answer user queries with relevant documents, suggested follow-up questions, and citations. The solution also allows users to upload custom data files and perform vector search using semantic or hybrid methods. Additionally, the solution supports extensibility through plugins, charge back functionality, security features such as authentication and authorization, monitoring capabilities, and scalability options.

AI Hub uses Azure Cognitive Search to serve an index of vectorized content, that will be used by our LLM (ChatGPT) to respond to user’s query.

Learn more at the official documentation: What is Azure Cognitive Search?.

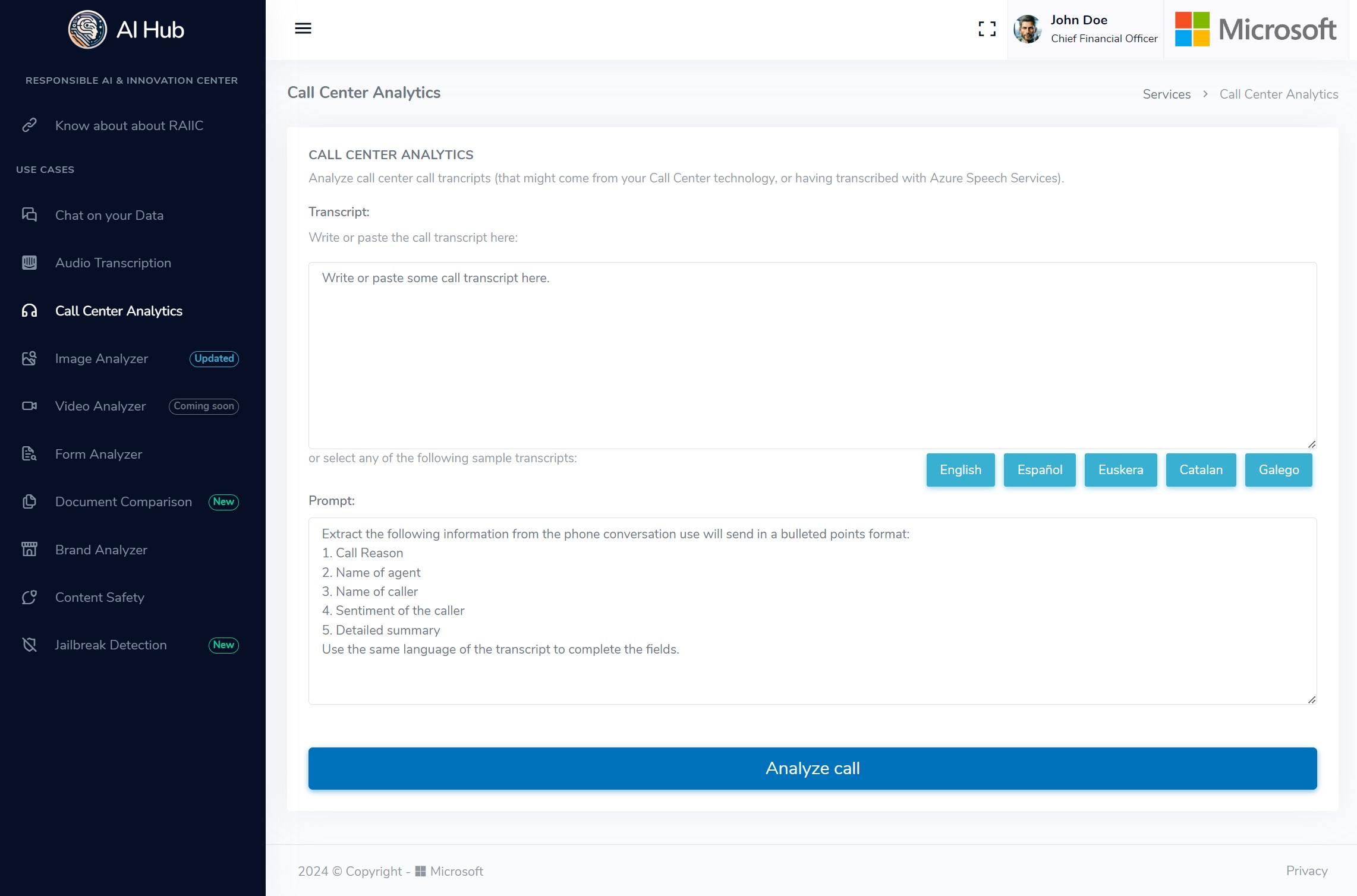

Analyze call center call trancripts (that might come from your Call Center technology, or having transcribed with Azure Speech Services).

Use the predefined template to analyze the call center call transcript, generate a new one, and customize the query to analyze the transcript of your call center.

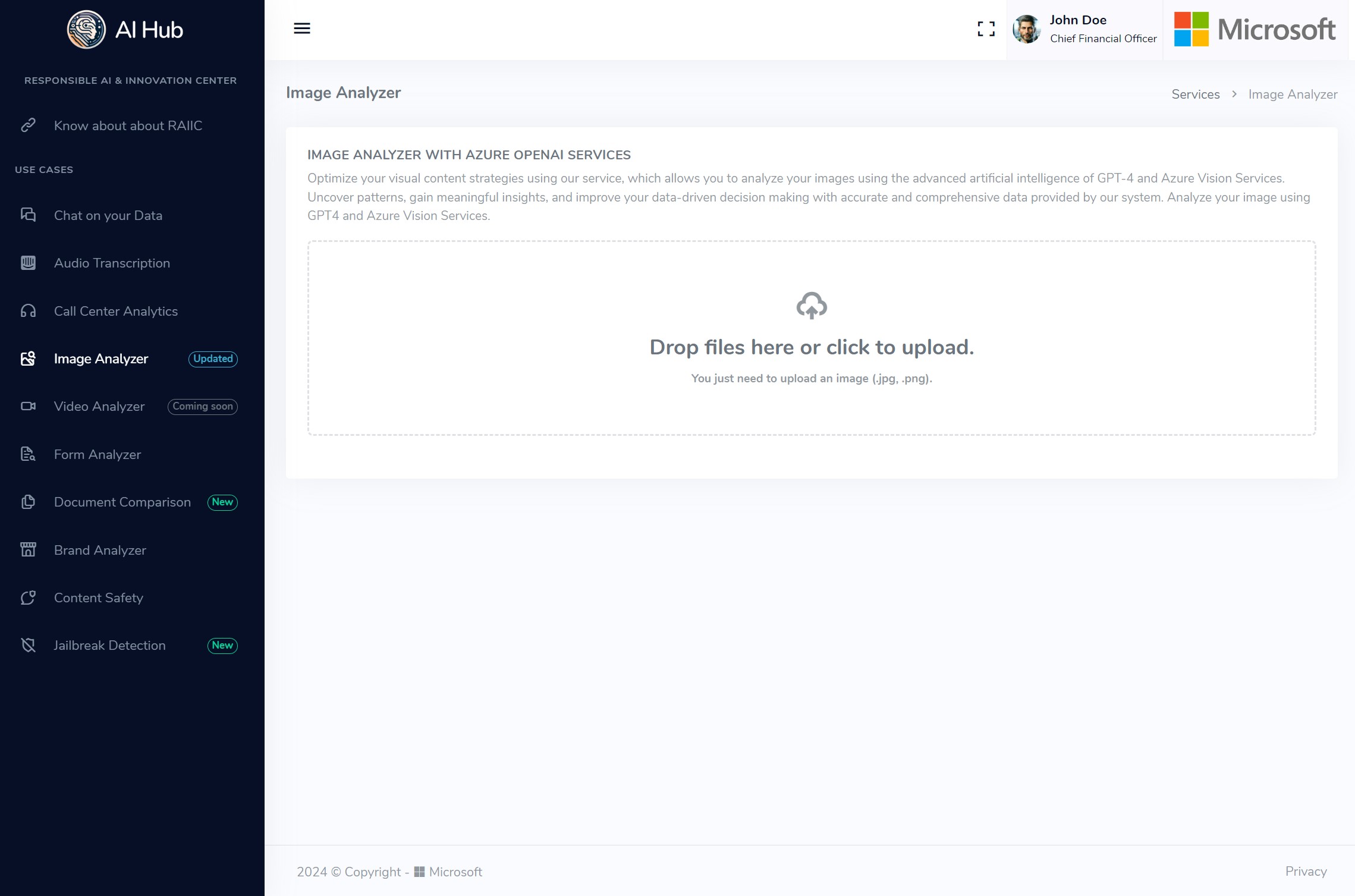

Analyze your image using GPT4 and Azure Vision Services. Upload an image and the Image Analyzer will analyze it using Azure Vision Services formats supported .jpg, .png

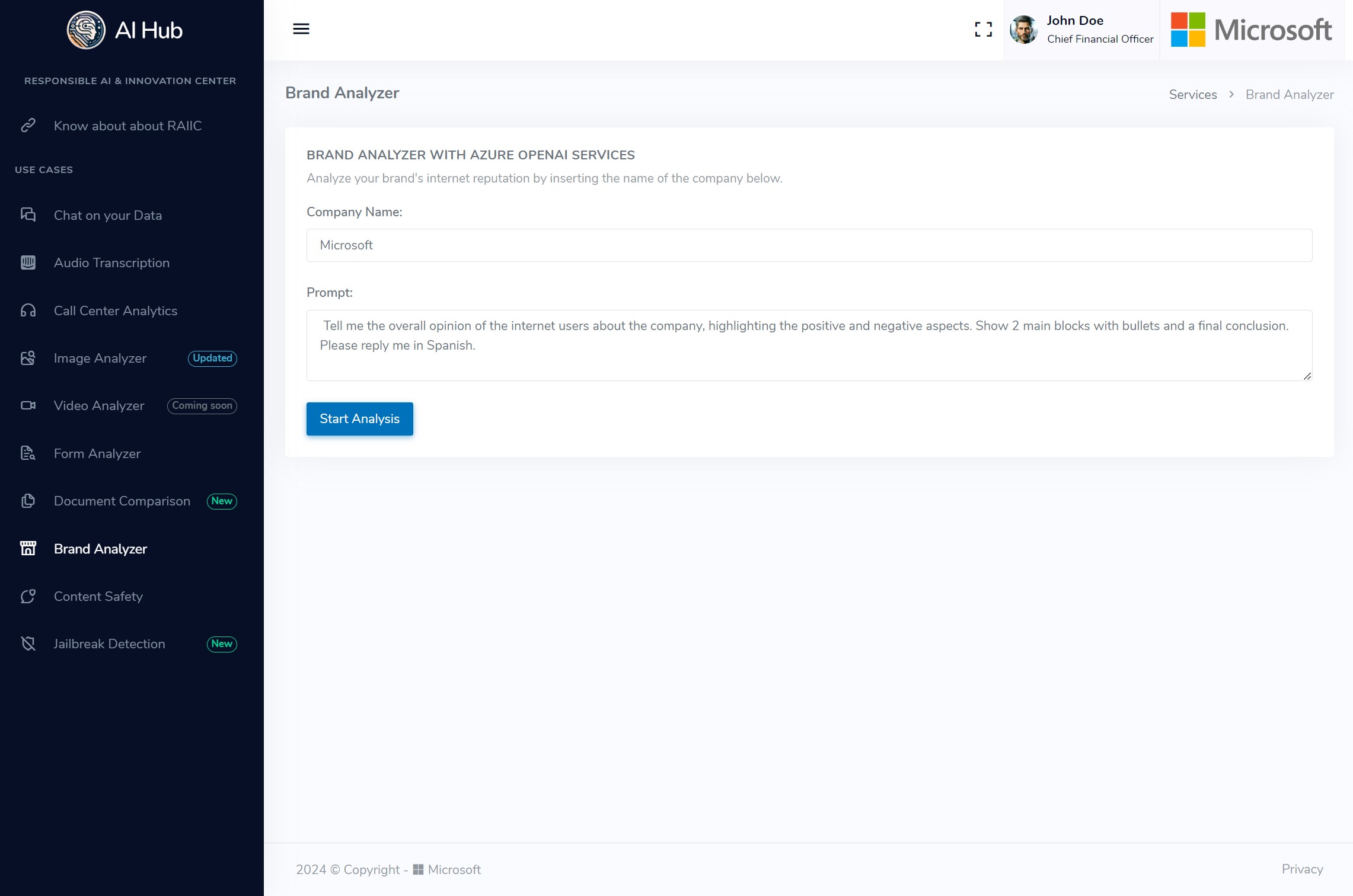

Analyze your brand’s internet reputation by inserting the name of the company.

Just enter the name of the company and the Brand Analyzer will search in Bing for mentions of the company and analyze the sentiment of the mentions. You can also search for a specific product or service of the company just modifiying thr promt custumizing the query.

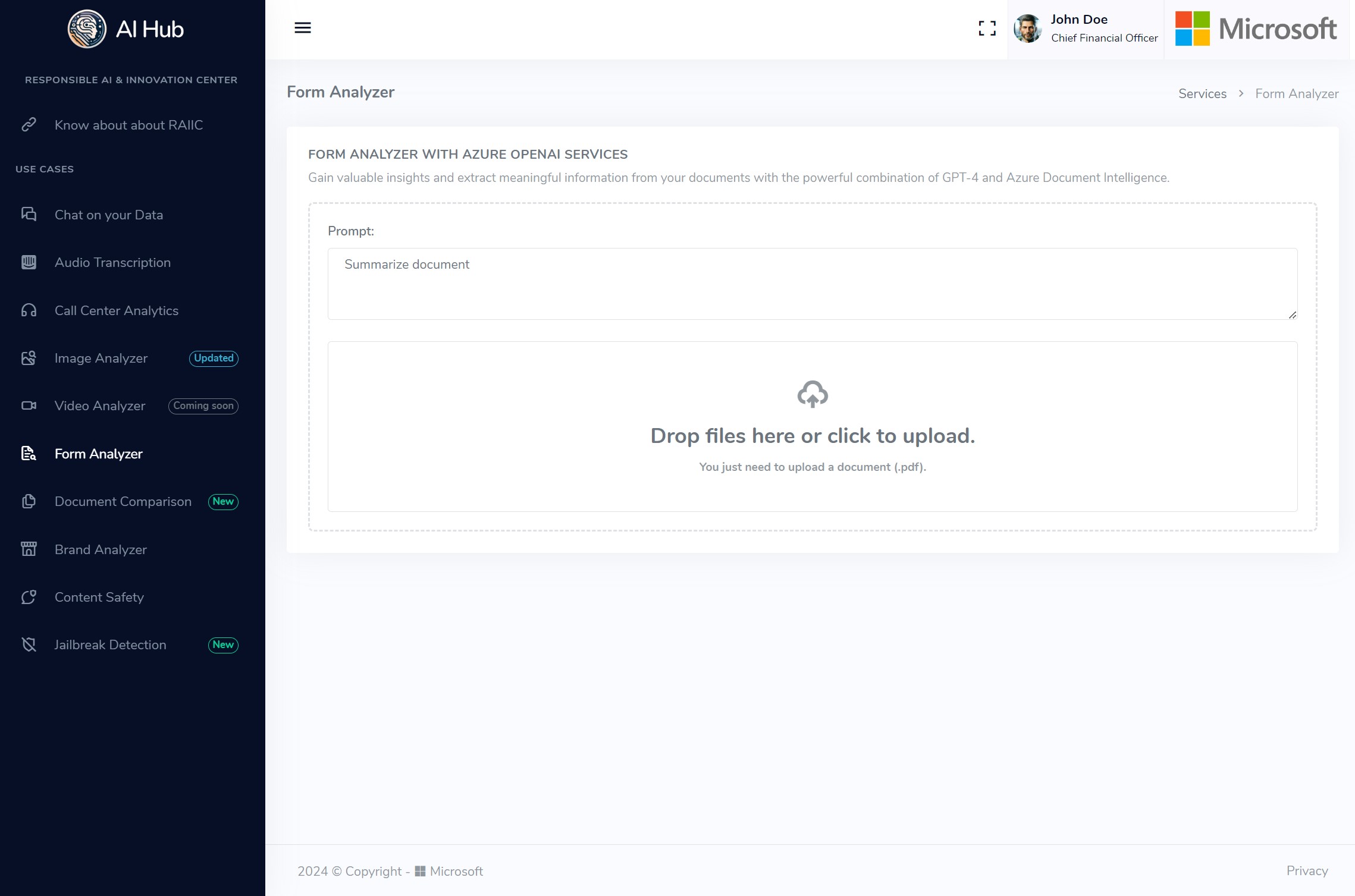

Analyze and chat with your documents using GPT4 and Azure Document Intelligence.

Just upload a .pdf document and the Form Analyzer will extract the text and analyze it with Azure Document Intelligence, and then you can chat with the document using GPT4. You can also modify the prompt to extract cusntom information from the document.

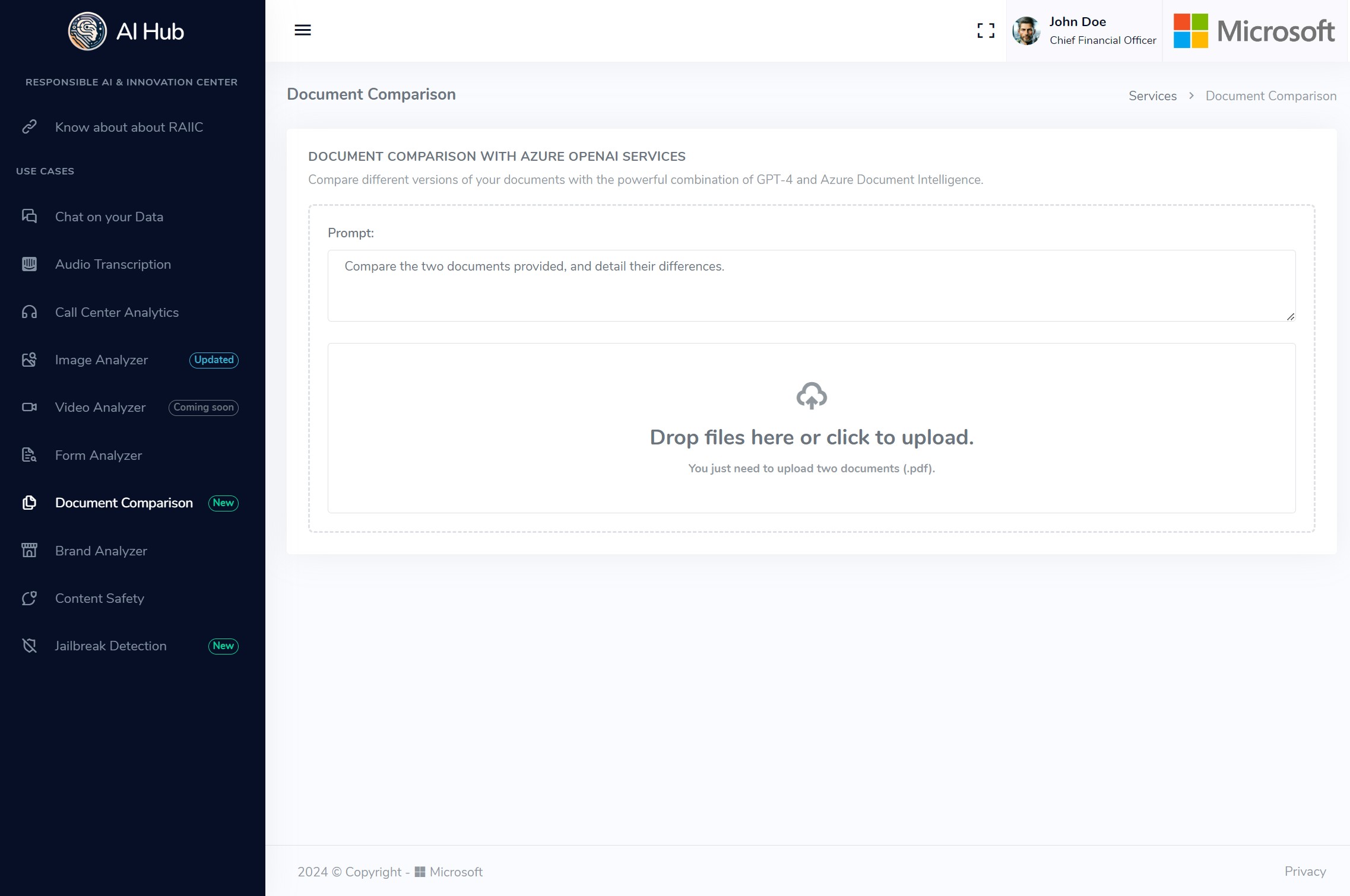

Compare different versions of your documents with the powerful combination of GPT-4 and Azure Document Intelligence.

Just upload two .pdf documents to extract the content using OCR capabilities of Azure Document Intelligence, and then you can ask the differences between both documents with the power of GPT4.

In today’s digital age, online platforms are increasingly becoming hubs for user-generated content, ranging from text and images to videos. While this surge in content creation fosters a vibrant online community, it also brings forth challenges related to content moderation and ensuring a safe environment for users. Azure AI Content Safety offers a robust solution to address these concerns, providing a comprehensive set of tools to analyze and filter content for potential safety risks.

Use Case Scenario: Social Media Platform Moderation

Consider a popular social media platform with millions of users actively sharing diverse content daily. To maintain a positive user experience and adhere to community guidelines, the platform employs Azure AI Content Safety to automatically moderate and filter user-generated content.

Image Moderation: Azure AI Content Safety capabilities are leveraged to analyze images uploaded by users. The system can detect and filter out content that violates community standards, such as explicit or violent imagery. This helps prevent the dissemination of inappropriate content and ensures a safer environment for users of all ages.

Text Moderation: The Text Moderator is employed to analyze textual content, including comments, captions, and messages. The platform can set up filters to identify and block content containing hate speech, harassment, or other forms of harmful language. This not only protects users from offensive content but also contributes to fostering a positive online community.

Customization and Adaptability: Azure AI Content Moderator allows platform administrators to customize the moderation rules based on specific community guidelines and evolving content standards. This adaptability ensures that the moderation system remains effective and relevant over time, even as online trends and user behaviors change.

Real-time Moderation: The integration of Azure AI services enables real-time content moderation. As users upload content, the system quickly assesses and filters it before making it publicly available. This swift response time is crucial in preventing the rapid spread of inappropriate or harmful content.

User Reporting and Feedback Loop: Azure AI Content Safety facilitates a user reporting and feedback loop. If a user comes across potentially harmful content that was not automatically detected, they can report it. This feedback helps improve the system’s accuracy and adaptability, creating a collaborative approach to content safety.

By implementing Azure AI Content Safety, the social media platform can significantly enhance its content moderation efforts, providing users with a safer and more enjoyable online experience while upholding community standards.

AI Hub uses Azure AI Content Safety to moderate the content of the user’s query, and to moderate the content of the response generated by our LLM (ChatGPT).

Learn more at the official documentation: What is Azure AI Content Safety?

Start Right now: Azure AI Content Safety Studio

OpenAI Plugins are powerful tools that connect systems (like ChatGPT) to third-party applications. Think of a plugin like a new skill you teach to your phone or computer. It’s a mini-program that lets your software do something new, like asking a chatbot questions. These plugins enable AI applications powered by LLMs to interact with APIs defined by developers, enhancing their capabilities and allowing it to perform a wide range of actions beyond the training of the inherent AI model.

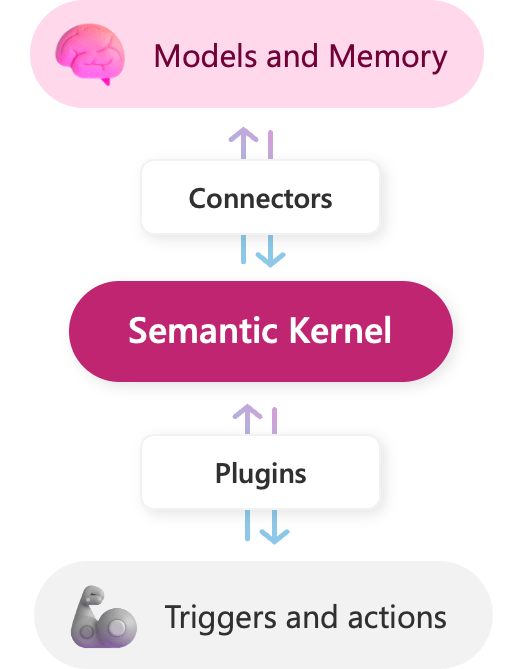

On the other hand, Microsoft’s Semantic Kernel is an open-source SDK that lets you easily build AI-related projects, especially OpenAI plugins. It provides the following features:

Interoperability: Semantic Kernel has adopted the OpenAI plugin specification as the standard for plugins. This creates an ecosystem of interoperable plugins that can be used across all major AI apps and services like ChatGPT, Bing, and Microsoft 365. Any plugins you build with Semantic Kernel can be exported, so they are usable in these platforms.

Importing Plugins: Semantic Kernel makes it easy to import plugins defined with an OpenAI specification. Your code can use Semantic Kernel to leverage these plugins into your application.

Native and Prompts Functions: In any plugin, you can create two types of functions: prompts and native functions. With native functions, you can use C# or Python code to directly build features to manipulate data or perform other operations beyond the capabilities of an LLM; and with prompts, you can create reusable semantic instructions for a wide variaety of LLMs, not just OpenAI, with templates to handle variables, conditionals and loops.

Use this plugin to analyze a call using the provided transcript. It offers functionality that is quite similar to the use case example of Call Center Analytics.

To test this plugin, send a POST request to its REST API at /plugins/transcript with the following request body:

transcript: the text with the call transcript.Upon successful execution, OpenAI will return a response with the following information extracted and formatted as bullet points:

Please note that the information provided may vary depending on the content of the supplied call transcript.

The following diagram shows the high-level architecture of the AI Hub solution:

To deploy the AI Hub on your own Azure subscription, please follow these instructions.

Before deploying the Azure AI Hub, please ensure the following prerequisites are met:

variables.tf file to create the appropriate .tfvars file with the values you prefer. Take into account that some elements in the architecture are optional, like for example private endpoints which by default are not deployed unless the value of the use_private_endpoints variable is set to true.2.56.0 or higher installed, or using the Azure Cloud Shell.Run the following command to deploy the infrastructure:

az login

az account set -s <target subscription_id or subscription_name>

powershell -Command "iwr -useb https://raw.githubusercontent.com/azure/aihub/master/install/install.ps1 | iex"

For installation on Linux, we recommend using Ubuntu 22.04 or a newer version. Before executing the installation script, ensure that the following applications are installed and up-to-date:

curl, version 7.x or higher.jq, version 1.6 or higher.unzip, version 6.x or higher.To deploy the infrastructure, execute the following command:

az login

az account set -s <target subscription_id or subscription_name>

bash -c "$(curl -fsSL https://raw.githubusercontent.com/azure/aihub/master/install/install_linux.sh)"

Azure OpenAI Service provides REST API access to OpenAI’s powerful language models including the GPT-4, GPT-35-Turbo, and Embeddings model series. In addition, the new GPT-4 and gpt-35-turbo model series have now reached general availability. These models can be easily adapted to your specific task including but not limited to content generation, summarization, semantic search, and natural language to code translation. Users can access the service through REST APIs, Python SDK, or our web-based interface in the Azure OpenAI Studio.

Important concepts about Azure OpenAI:

Models available

Take into account that not all models are available in all Azure Regions, for Regional availability check the documentation: Model summary table and region availability

Deployment: once you instantiate a specific model, it will be available as deployment. You can create and delete deployments of available models as you wish. This is managed through the AOAI Studio.

Quotas: the quotas available in Azure are allocated per model and per region, within a subscription. Learn more about quotas. In the documentation you can find best practices to manage your quota.

AI Hub uses Azure OpenAI Embeddings model to vectorize the content and ChatGPT model to conversate with that content.

More information at the official documentation: What is Azure OpenAI

Azure AI Content Safety detects harmful user-generated and AI-generated content in applications and services. Azure AI Content Safety includes text and image APIs that allow you to detect material that is harmful. We also have an interactive Content Safety Studio that allows you to view, explore and try out sample code for detecting harmful content across different modalities.

Moderator works both for text and image content. It can be used to detect adult content, racy content, offensive content, and more. The service can be used to moderate content from a variety of sources, such as social media, public-facing communication tools, and enterprise applications.

Azure AI Content Safety Studio is an online tool designed to handle potentially offensive, risky, or undesirable content using cutting-edge content moderation ML models. It provides templates and customized workflows, enabling users to choose and build their own content moderation system. Users can upload their own content or try it out with provided sample content.

AI Hub uses Azure AI Content Safety to moderate the content of the user’s query, and to moderate the content of the response generated by our LLM (ChatGPT).

Learn more at the official documentation: What is Azure AI Content Safety?

Azure Cognitive Search (formerly known as “Azure Search”) is a cloud search service that gives developers infrastructure, APIs, and tools for building a rich search experience over private, heterogeneous content in web, mobile, and enterprise applications.

Search is foundational to any app that surfaces text to users, where common scenarios include catalog or document search, online retail apps, or data exploration over proprietary content. When you create a search service, you’ll work with the following capabilities:

AI Hub uses Azure Cognitive Search to serve an index of vectorized content, that will be used by our LLM (ChatGPT) to respond to user’s query.

Learn more at the official documentation: What is Azure Cognitive Search?.

Learning Path:Implement knowledge mining with Azure Cognitive Search

Azure Container Apps is a managed serverless container service that enables executing code in containers without the overhead of managing virtual machines, orchestrators, or adopting a highly opinionated application framework.

Common uses of Azure Container Apps include:

AI Hub uses Azure Container Apps to deploy the chat user interface that will answer user queries based on the company’s documents.

Learn more about Azure Container Apps: Azure Container Apps documentation?

Azure Functions is a serverless solution that allows you to write less code, maintain less infrastructure, and save on costs. Instead of worrying about deploying and maintaining servers, the cloud infrastructure provides all the up-to-date resources needed to keep your applications running.

Functions provides a comprehensive set of event-driven triggers and bindings that connect your functions to other services without having to write extra code. You focus on the code that matters most to you, in the most productive language for you, and Azure Functions handles the rest.

AI Hub uses Azure Function to create chunks of the documents text and create embeddings to be added to the Azure Cognitive Search index.

Learn more about Azure Functions: What is Azure Function?. For the best experience with the Functions documentation, choose your preferred development language from the list of native Functions languages at the top of the article.

Azure Blob Storage is Microsoft’s object storage solution for the cloud. Blob Storage is optimized for storing massive amounts of unstructured data. Unstructured data is data that doesn’t adhere to a particular data model or definition, such as text or binary data.

Blob Storage is designed for:

AI Hub uses Blob Storage to store the documents (PDFs) that will be then vectorized, indexed or analyzed.

Learn more about Azure Blob Storage: What is Azure Blob Storage?

Semantic Kernel is an open-source SDK that lets you easily combine AI services like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C# and Python. By doing so, you can create AI apps that combine the best of both worlds.

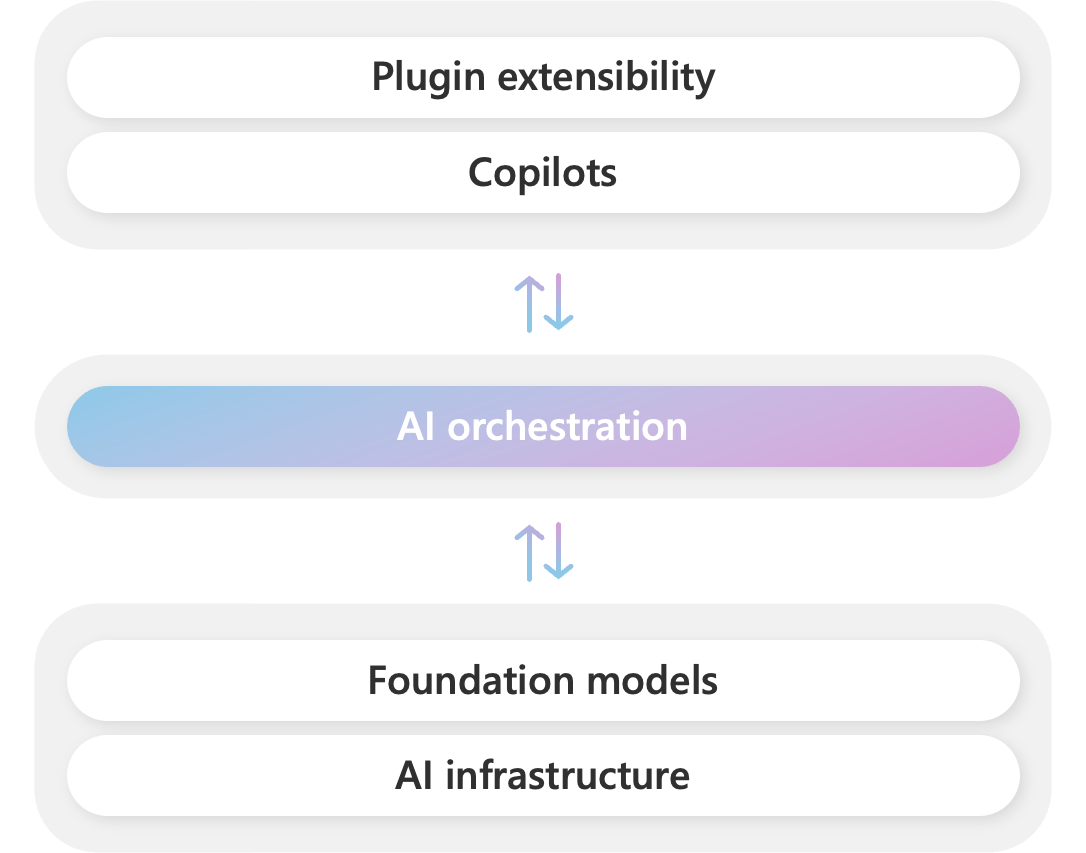

Microsoft powers its Copilot system with a stack of AI models and plugins. At the center of this stack is an AI orchestration layer that allows us to combine AI models and plugins together to create brand new experiences for users.

To help developers build their own Copilot experiences on top of AI plugins, we have released Semantic Kernel, a lightweight open-source SDK that allows you to orchestrate AI plugins. With Semantic Kernel, you can leverage the same AI orchestration patterns that power Microsoft 365 Copilot and Bing in your own apps, while still leveraging your existing development skills and investments.

At present, the AI Hub does not use the Semantic Kernel directly in its use case examples. However, it does demonstrate its usage through two specific examples that show how easely is to create OpenAI plugins using Semantic Kernel as an SDK.

Here are the available plugin examples:

Call Analysis: This plugin uses Semantic Kernel to implement features similar to those demonstrated in the Call Center Analytics use case.

Financial Product Comparison: This plugin employs Semantic Kernel to combine native and prompt functions to compare a specific financial product with others in the market. It combines prompts with the Web Search Engine Plugin, which is further integrated with the Bing Search connector.

For more information, please refer to the OpenAI Plugins section. You can also learn more about Semantic Kernel in its official documentation.

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repositories using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.