Activate GenAI with Azure

Activate GenAI with Azure! — Talk with your Enterprise data with Azure OpenAI and Cognitive Search.

This delivery guide will help you build a ChatGPT-like experiences over your Enterprise data using the Retrieval Augmented Generation pattern. Under the hood the solution uses Azure OpenAI Service to access the ChatGPT model (gpt-35-turbo), and Azure Cognitive Search for data indexing and retrieval.

1 - Overview

Activate GenAI with Azure — A smart and scalable document retrieval solution

Description

Activate GenAI with Azure is a new delivery that leverages the power of artificial intelligence and cloud computing to provide a smart and scalable document search retrieval solution. The solution uses Azure OpenAI, Cognitive Search, Container Apps, Application Insights, and Azure API Management to create a chat interface that can answer user queries with relevant documents, suggested follow-up questions, and citations. The solution also allows users to upload custom data files and perform vector search using semantic or hybrid methods. Additionally, the solution supports extensibility through plugins, charge back functionality, security features such as authentication and authorization, monitoring capabilities, and scalability options.

Pre-requisites

To use this solution, you will need the following:

- An Azure subscription

- A User, Service Principal or Managed Identity with the following permissions:

- Contributor role on the Azure subscription

- Azure CLI v2.53.0 or later

- Terraform v1.6.0 or later

High-level Architecture

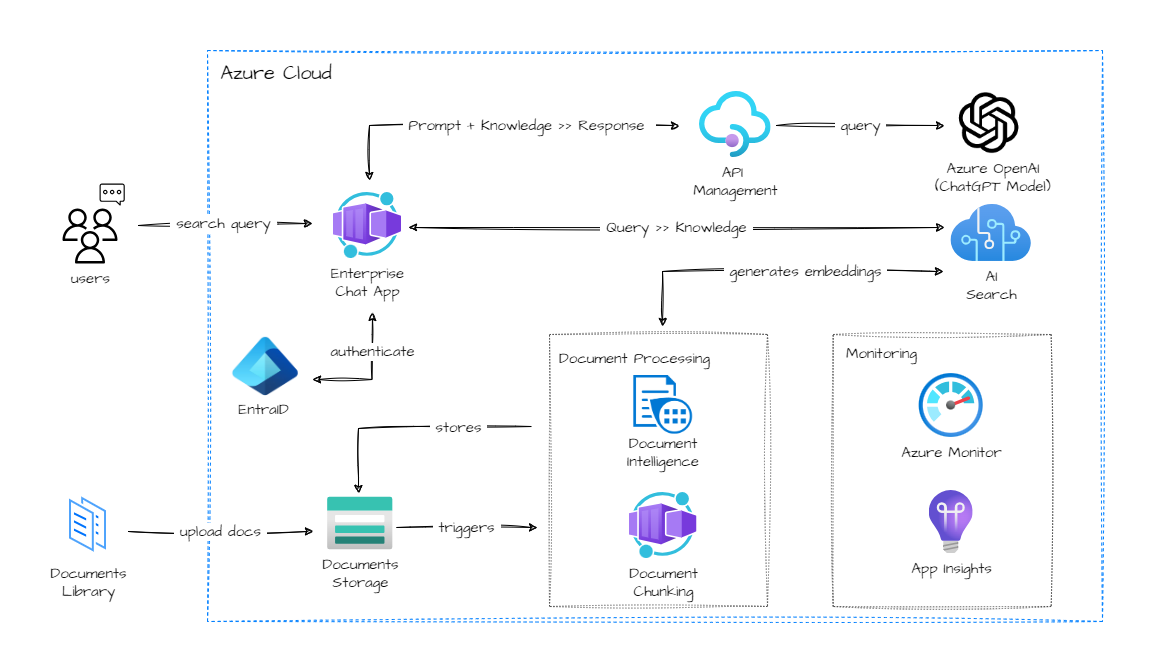

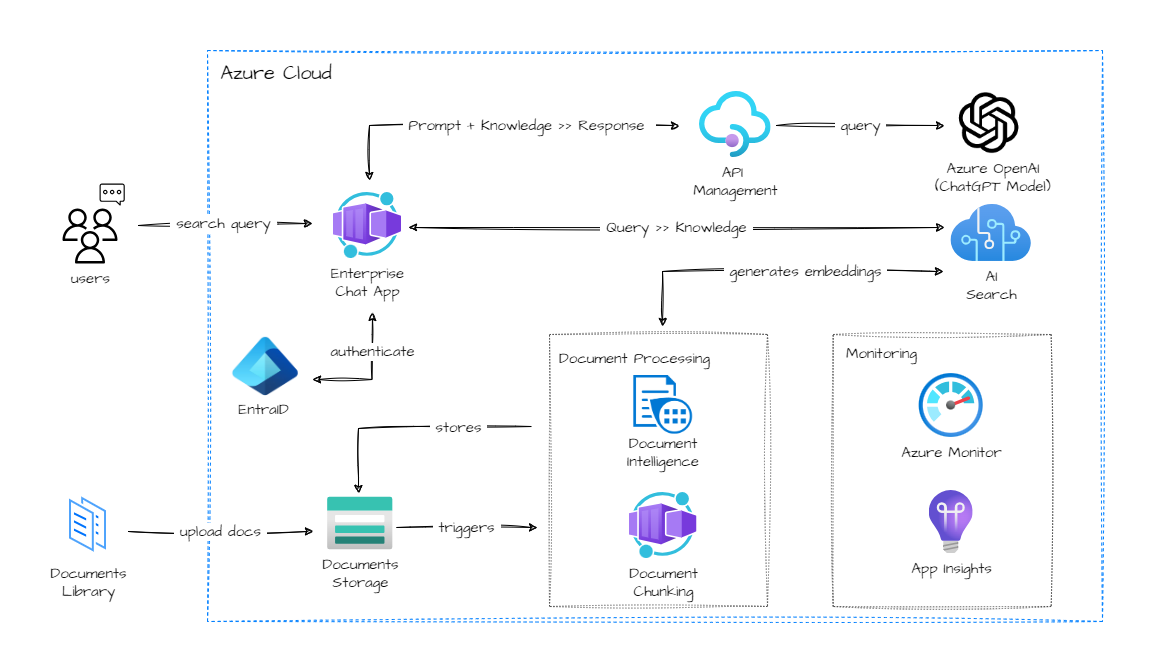

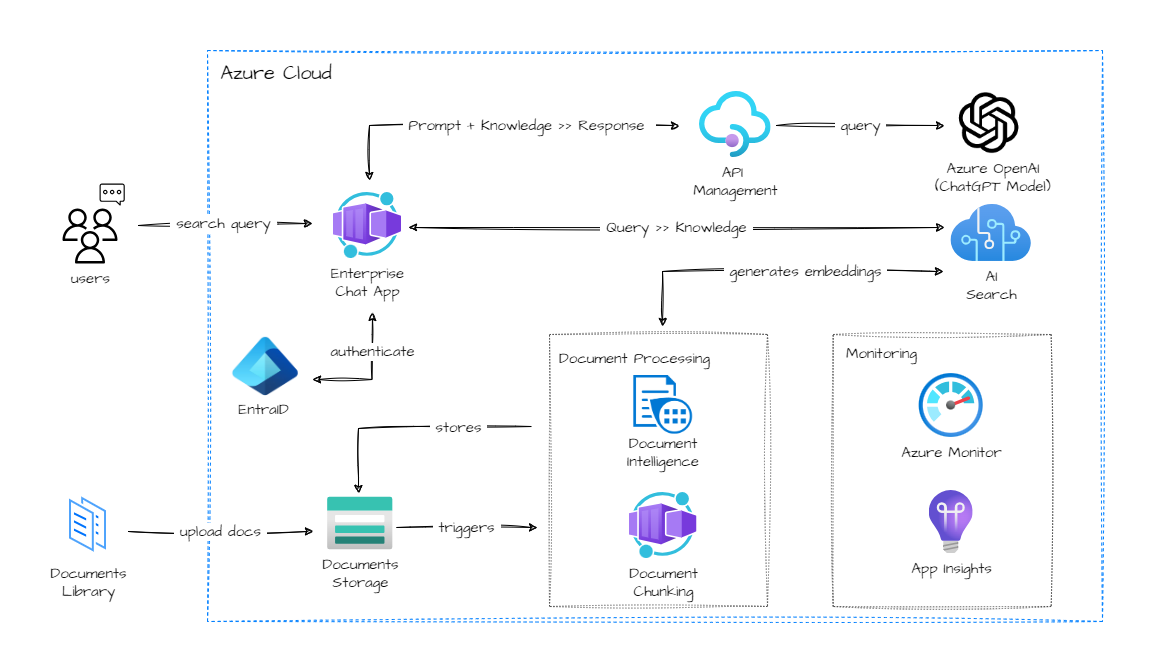

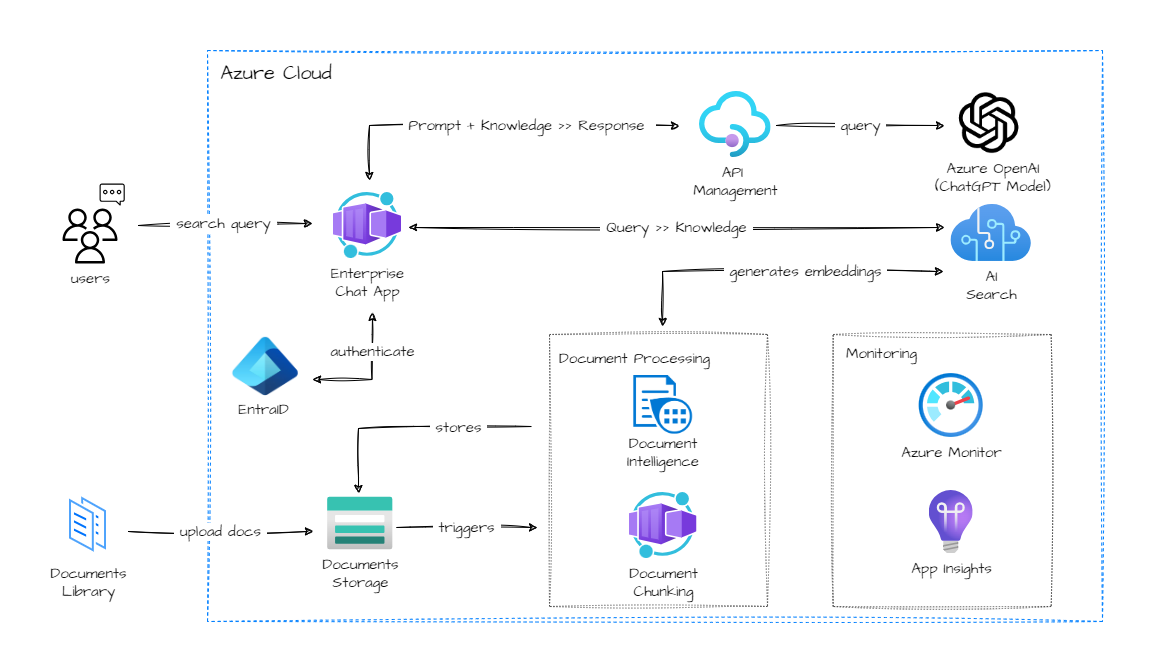

The following diagram shows the high-level architecture of the Activate GenAI with Azure solution:

Objectives

By the end of this delivery, you will be able to:

- Configure and deploy the Activate GenAI with Azure solution

- Use the chat interface to query documents and get relevant results

- Upload custom data files and perform vector search

- Extend the solution with plugins

- Manage the charge back functionality

- Secure and monitor the solution

- Scale and integrate the solution with other services

Delivery Guidance

This delivery consists of the following steps:

Day 1:

- Scoping and planning.

- Prepare the environment.

- Adapt deployment scripts according to the customer’s requirements.

Day 2:

- Deploy the Activate GenAI with Azure solution: Create and configure the Azure resources.

- Test and use the chat interface to query documents.

- Upload custom data files and perform vector search.

Day 3:

- Customize the solution’s look and feel.

- Extend the solution with prompt engineering and plugins.

- Show how the solution scales and integrates with other services.

- Show how the security and monitoring works.

- Plan the next steps.

Closing

By the end of the Activate GenAI with Azure delivery, stakeholders will have a powerful and innovative solution that can help them improve their document retrieval efficiency, enhance their user experience, increase their productivity, share their knowledge, gain data insights and analytics, and comply with security standards.ss

2 - Supported Scenarios

Understand the supported Scenarios.

Business Scenarios

Document Retrieval

Activate GenAI with Azure is a new delivery that leverages the power of artificial intelligence and cloud computing to provide a smart and scalable document search and retrieval solution. The solution uses Azure OpenAI, Cognitive Search, Container Apps, Application Insights, and Azure API Management to create a chat interface that can answer user queries with relevant documents, suggested follow-up questions, and citations. The solution also allows users to upload custom data files and perform vector search using semantic or hybrid methods. Additionally, the solution supports extensibility through plugins, charge back functionality, security features such as authentication and authorization, monitoring capabilities, and scalability options.

Technical Scenarios

Azure OpenAI enhanced Monitoring

Ensure models are being used responsibly within the corporate environment and within the approved use cases of the service.

References:

Load Balance Azure OpenAI instances

Enable high availability of the model APIs to ensure user requests are met even if the traffic exceeds the limits of a single Azure OpenAI service.

References:

Load Balancing in Azure OpenAI Service

Serverless Document Batch Processing

Use Azure Functions to process documents, create embeddings and store them in the specified memory store.

References:

Azure OpenAI Embeddings QnA

3 - Deploy the Architecture

This guide provides details and instructions to help you deploy the Activate GenAI with Azure Accelerator for your customer.

High-level Architecture

The following diagram shows the high-level architecture of the Activate GenAI with Azure Accelerator:

Deployment

Work in progress. There are still some manual steps to be automated. Check here for the latest updates.

Run the following command to deploy the Activate GenAI with Azure Accelerator:

cd infra

terraform init

terraform apply

4 - Concepts

Understand the core components of the solution: Azure Open AI, Azure Cognitive Search, Azure APIM and more.

4.1 - Azure OpenAI

The core of the Generative AI solution.

Azure OpenAI Service provides REST API access to OpenAI’s powerful language models including the GPT-4, GPT-35-Turbo, and Embeddings model series. In addition, the new GPT-4 and gpt-35-turbo model series have now reached general availability. These models can be easily adapted to your specific task including but not limited to content generation, summarization, semantic search, and natural language to code translation. Users can access the service through REST APIs, Python SDK, or our web-based interface in the Azure OpenAI Studio.

Important concepts about Azure OpenAI:

Azure OpenAI Studio

Models available

- GPT-35-Turbo series: typical “chatGPT” model, recommended for most of the Azure OpenAI projects. When we might need more capability, GPT4 can me considered (take into account it will imply more latency and cost)

- GPT-4 series: they are the most advanced language models, available once you fill in the GPT4 Request Form

- Embeddings series: embeddings make it easier to do machine learning on large inputs representing words by capturing the semantic similarities in a vector space. Therefore, you can use embeddings to determine if two text chunks are semantically related or similar, and provide a score to assess similarity.

Take into account that not all models are available in all Azure Regions, for Regional availability check the documentation: Model summary table and region availability

Deployment: once you instantiate a specific model, it will be available as deployment. You can create and delete deployments of available models as you wish. This is managed through the AOAI Studio.

Quotas: the quotas available in Azure are allocated per model and per region, within a subscription. Learn more about quotas. In the documentation you can find best practices to manage your quota.

Activate GenAI with Azure uses Azure OpenAI Embeddings model to vectorize the content and ChatGPT model to conversate with that content.

For more information check: What is Azure OpenAI

4.2 - Azure Cognitive Search

Used to build a rich search experience over private, heterogeneous content in web, mobile, and enterprise applications.

Azure Cognitive Search (formerly known as “Azure Search”) is a cloud search service that gives developers infrastructure, APIs, and tools for building a rich search experience over private, heterogeneous content in web, mobile, and enterprise applications.

Search is foundational to any app that surfaces text to users, where common scenarios include catalog or document search, online retail apps, or data exploration over proprietary content. When you create a search service, you’ll work with the following capabilities:

- A search engine for full text and vector search over a search index containing user-owned content

- Rich indexing, with lexical analysis and optional AI enrichment for content extraction and transformation

- Rich query syntax for vector queries, text search, fuzzy search, autocomplete, geo-search and more

- Programmability through REST APIs and client libraries in Azure SDKs

- Azure integration at the data layer, machine learning layer, and AI (Azure AI services)

Activate GenAI with Azure uses Azure Cognitive Search to serve an index of vectorized content, that will be used by our LLM (ChatGPT) to respond to user’s query.

For more information check: What is Azure Cognitive Search?.

Learning Path:Implement knowledge mining with Azure Cognitive Search

4.3 - Semantic Kernel

An open-source SDK that lets you easily combine AI services like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C# and Python.

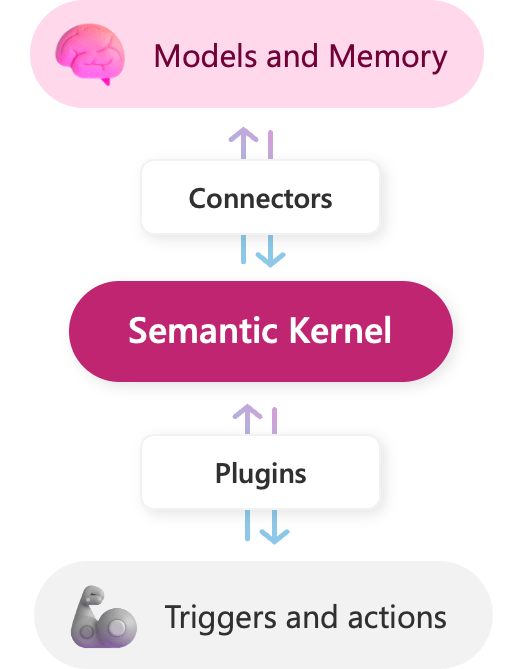

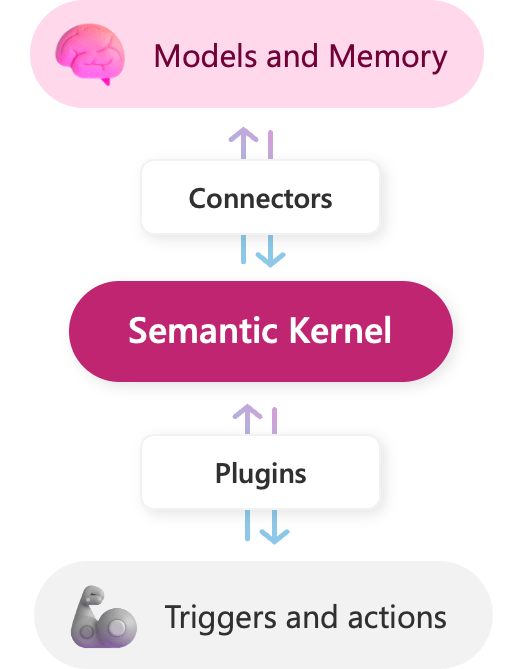

Semantic Kernel is an open-source SDK that lets you easily combine AI services like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C# and Python. By doing so, you can create AI apps that combine the best of both worlds.

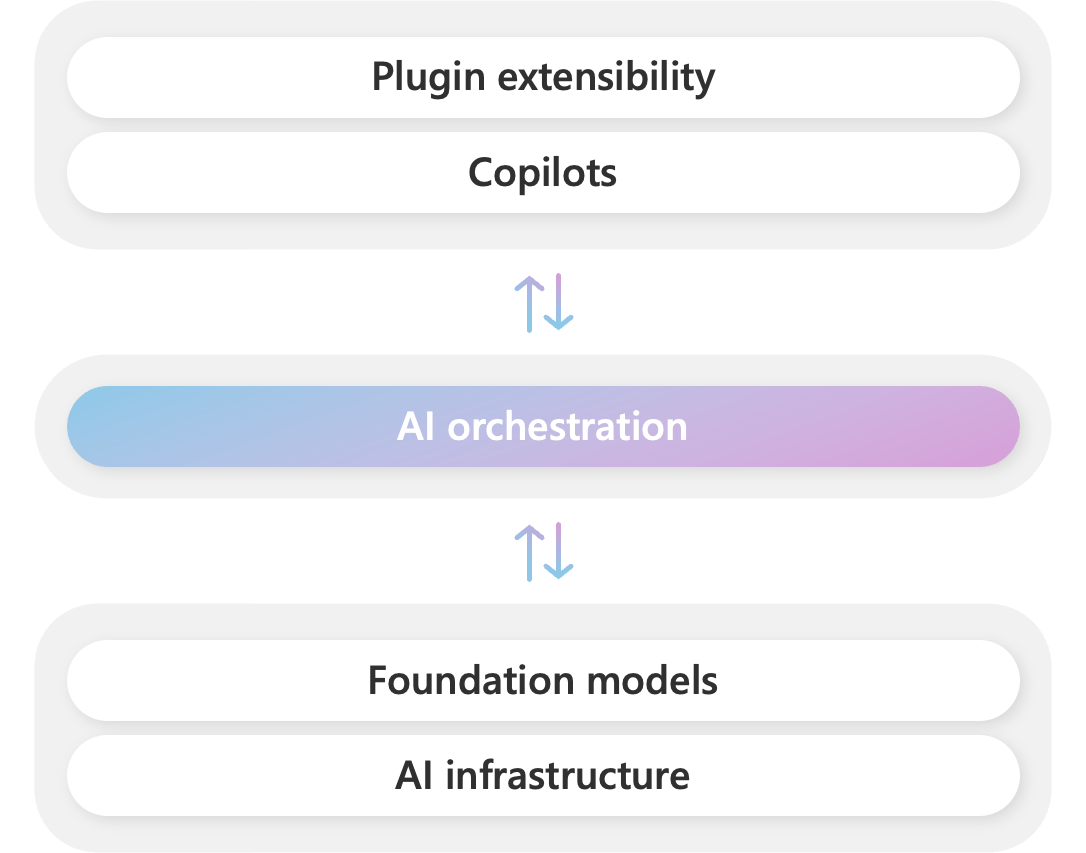

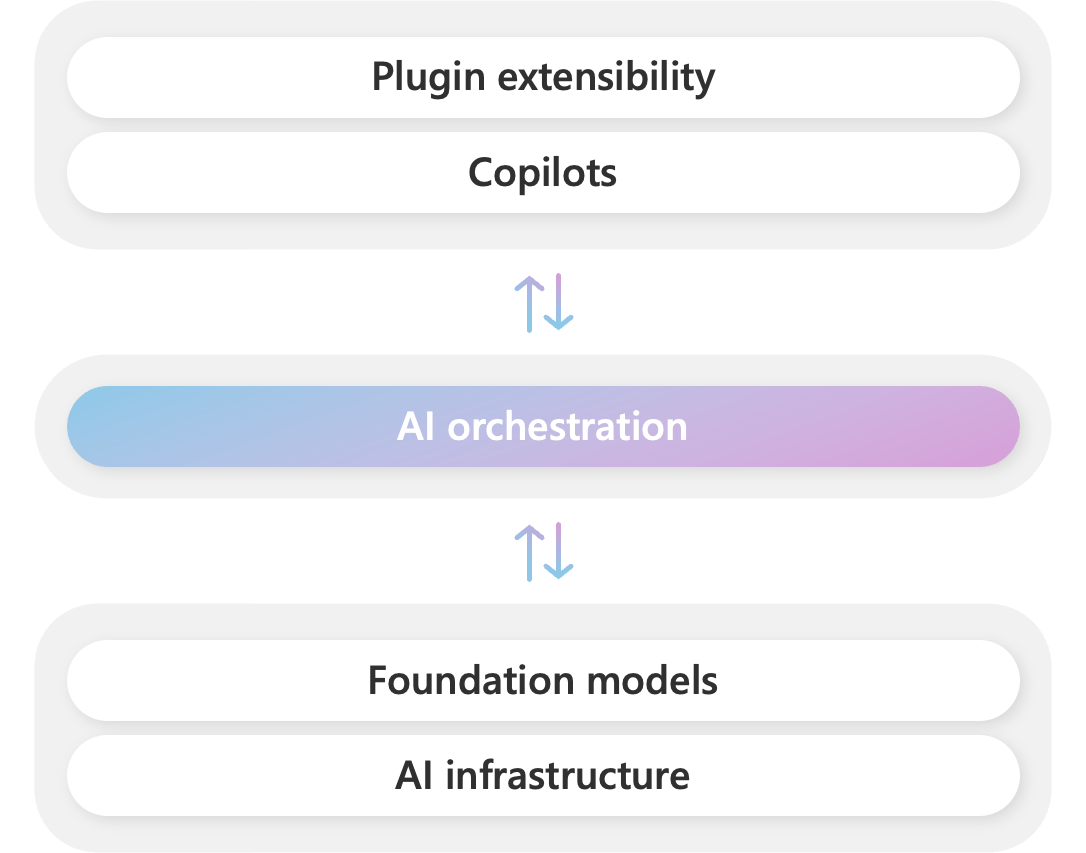

Microsoft powers its Copilot system with a stack of AI models and plugins. At the center of this stack is an AI orchestration layer that allows us to combine AI models and plugins together to create brand new experiences for users.

To help developers build their own Copilot experiences on top of AI plugins, we have released Semantic Kernel, a lightweight open-source SDK that allows you to orchestrate AI plugins. With Semantic Kernel, you can leverage the same AI orchestration patterns that power Microsoft 365 Copilot and Bing in your own apps, while still leveraging your existing development skills and investments.

Activate GenAI with Azure uses Semantic Kernel to orchestrate the prompts, Azure OpenAI calls and Azure Cognitive Search queries and results.

For more information check: Semantic Kernel

4.4 - Azure Container Apps

Host the application in Azure Container Apps.

Azure Container Apps is a managed serverless container service that enables executing code in containers without the overhead of managing virtual machines, orchestrators, or adopting a highly opinionated application framework.

Common uses of Azure Container Apps include:

- Deploying API endpoints

- Hosting background processing jobs

- Handling event-driven processing

- Running microservices

Activate GenAI with Azure uses Azure Container Apps to deploy the chat user interface that will answer user queries based on the company’s documents.

For more information check: Azure Container Apps documentation?

4.5 - Azure APIM

Use Azure APIM to enable chargeback, monitoring and loadbalancing for Azure OpenAI instances.

Azure API Management is a platform-as-a-service that provides a hybrid, multicloud management platform for APIs across all environments 1. It supports the complete API lifecycle and helps customers manage APIs as first-class assets throughout their lifecycle 1.

Activate GenAI with Azure uses API Management to enable chargeback, monitoring and loadbalancing for Azure OpenAI instances…

For more information check: What is APIM?

4.6 - Azure Functions

Serveles application used to bacth-process documents and create embeddings.

Azure Functions is a serverless solution that allows you to write less code, maintain less infrastructure, and save on costs. Instead of worrying about deploying and maintaining servers, the cloud infrastructure provides all the up-to-date resources needed to keep your applications running.

Functions provides a comprehensive set of event-driven triggers and bindings that connect your functions to other services without having to write extra code. You focus on the code that matters most to you, in the most productive language for you, and Azure Functions handles the rest.

Activate GenAI with Azure uses Azure Function to create chunks of the documents text and create embeddings to be added to the Azure Cognitive Search index.

For more information check: What is Azure Function?. For the best experience with the Functions documentation, choose your preferred development language from the list of native Functions languages at the top of the article.

4.7 - Azure Storage

Store the documents in Azure Blob Storage.s

Azure Blob Storage is Microsoft’s object storage solution for the cloud. Blob Storage is optimized for storing massive amounts of unstructured data. Unstructured data is data that doesn’t adhere to a particular data model or definition, such as text or binary data.

Blob Storage is designed for:

- Serving images or documents directly to a browser.

- Storing files for distributed access.

- Streaming video and audio.

- Writing to log files.

- Storing data for backup and restore, disaster recovery, and archiving.

- Storing data for analysis by an on-premises or Azure-hosted service.

Activate GenAI with Azure uses Blob Storage to store the documents (PDFs) that will be then vectorized and indexed.

For more information check: What is Azure Blob Storage?

4.8 - Azure Application Insights

Monitor the application using Application Insights.

Application Insights is an extension of Azure Monitor and provides application performance monitoring (APM) features. APM tools are useful to monitor applications from development, through test, and into production in the following ways:

- Proactively understand how an application is performing.

- Reactively review application execution data to determine the cause of an incident.

Along with collecting metrics and application telemetry data, which describe application activities and health, you can use Application Insights to collect and store application trace logging data.

The log trace is associated with other telemetry to give a detailed view of the activity. Adding trace logging to existing apps only requires providing a destination for the logs. You rarely need to change the logging framework.

Application Insights provides other features including, but not limited to:

- Live Metrics: Observe activity from your deployed application in real time with no effect on the host environment.

- Availability: Also known as synthetic transaction monitoring. Probe the external endpoints of your applications to test the overall * availability and responsiveness over time.

- GitHub or Azure DevOps integration: Create GitHub or Azure DevOps work items in the context of Application Insights data.

- Usage: Understand which features are popular with users and how users interact and use your application.

- Smart detection: Detect failures and anomalies automatically through proactive telemetry analysis.

Application Insights supports distributed tracing, which is also known as distributed component correlation. This feature allows searching for and visualizing an end-to-end flow of a specific execution or transaction. The ability to trace activity from end to end is important for applications that were built as distributed components or microservices.

Activate GenAI with Azure uses Application Insights to monitor application logs.

For more information check: What is Application Insights?

5 - Resources & References

Check out other resources and references

Sample Repos:

Azure APIM & Azure OpenAI

Azure Services

6 - Contribution Guidelines

How to contribute to the project

Contributing

This project welcomes contributions and suggestions. Most contributions require you to

agree to a Contributor License Agreement (CLA) declaring that you have the right to,

and actually do, grant us the rights to use your contribution. For details, visit

https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need

to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the

instructions provided by the bot. You will only need to do this once across all repositories using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct.

For more information see the Code of Conduct FAQ

or contact opencode@microsoft.com with any additional questions or comments.